Author: JAMIUL ISLAM - Page 7

How to Use Large Language Models for Literature Review and Research Synthesis

Learn how to use large language models like GPT-4 and LitLLM to cut literature review time by up to 92%. Discover practical workflows, tools, costs, and why human verification still matters.

AI Ethics Frameworks for Generative AI: Principles, Policies, and Practice

AI ethics frameworks for generative AI must move beyond vague principles to enforceable policies. Learn how top organizations are reducing bias, ensuring transparency, and holding teams accountable-before regulation forces their hand.

Reasoning in Large Language Models: Chain-of-Thought, Self-Consistency, and Debate Explained

Chain-of-Thought, Self-Consistency, and Debate are three key methods that help large language models reason through problems step by step. Learn how they work, where they shine, and why they’re transforming AI in healthcare, finance, and science.

Self-Attention and Positional Encoding: How Transformers Power Generative AI

Self-attention and positional encoding are the core innovations behind Transformer models that power modern generative AI. They enable models to understand context, maintain word order, and generate coherent text at scale.

Vibe Coding vs AI Pair Programming: When to Use Each Approach

Vibe coding speeds up simple tasks with AI-generated code, while AI pair programming offers real-time collaboration for complex problems. Learn when to use each to boost productivity without sacrificing security or quality.

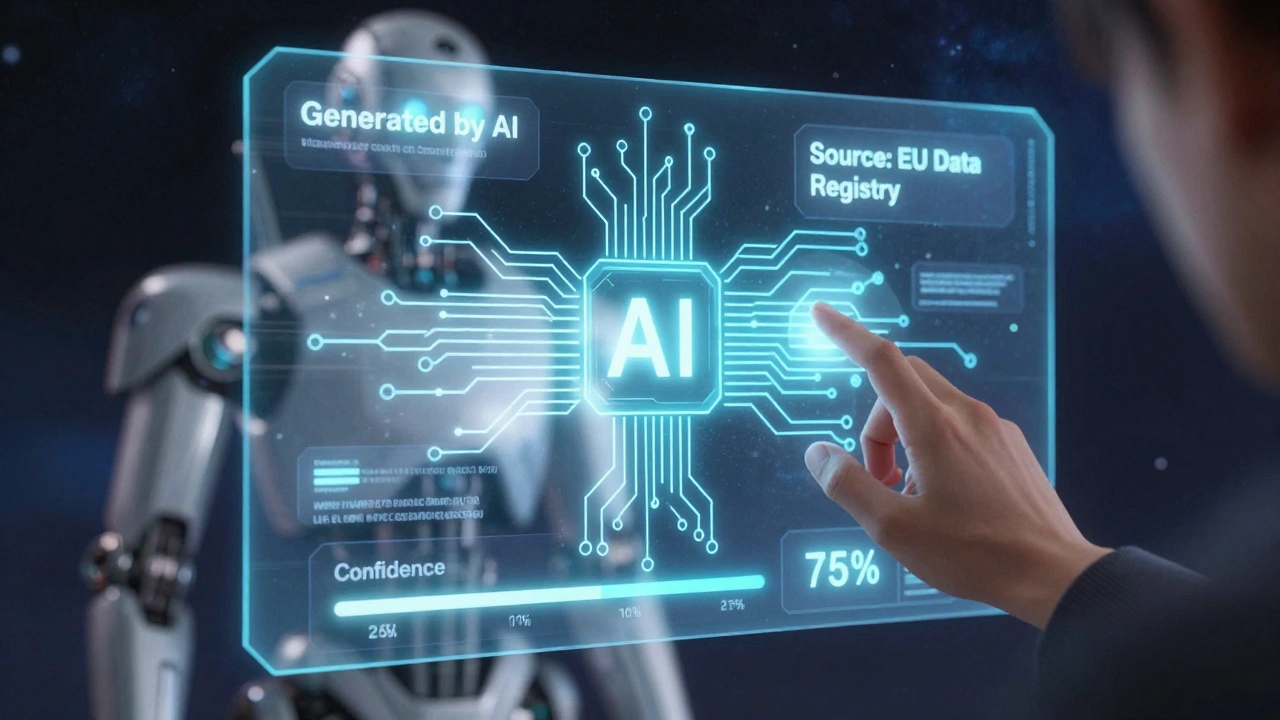

Designing Trustworthy Generative AI UX: Transparency, Feedback, and Control

Trust in generative AI comes from transparency, feedback, and control-not flashy interfaces. Learn how leading platforms like Microsoft Copilot and Salesforce Einstein build user trust with proven design principles.

Prompt Compression: Cut Token Costs Without Losing LLM Accuracy

Prompt compression cuts LLM input costs by up to 80% without sacrificing answer quality. Learn how to reduce tokens using hard and soft methods, real-world savings, and when to avoid it.

Knowledge Sharing for Vibe-Coded Projects: Internal Wikis and Demos That Actually Work

Learn how vibe-coded internal wikis and short video demos preserve team culture, cut onboarding time by 70%, and reduce burnout - without adding more work. Real tools, real results.

Can Smaller LLMs Learn to Reason Like Big Ones? The Truth About Chain-of-Thought Distillation

Smaller LLMs can learn to reason like big ones through chain-of-thought distillation - cutting costs by 90% while keeping 90%+ accuracy. Here's how it works, what fails, and why it's changing AI deployment.

Top Enterprise Use Cases for Large Language Models in 2025

In 2025, enterprises are using large language models to automate customer service, detect fraud, review contracts, and train employees. Success comes from focusing on accuracy, security, and data quality-not model size.

Checkpoint Averaging and EMA: How to Stabilize Large Language Model Training

Checkpoint averaging and EMA stabilize large language model training by combining multiple model states to reduce noise and improve generalization. Learn how to implement them, when to use them, and why they're now essential for models over 1B parameters.

Data Residency Considerations for Global LLM Deployments

Data residency for global LLM deployments ensures personal data stays within legal borders. Learn how GDPR, PIPL, and other laws force companies to choose between cloud AI, hybrid systems, or local small models-and the real costs of each.