Large Language Models: How They Work, Where They're Used, and How to Use Them Right

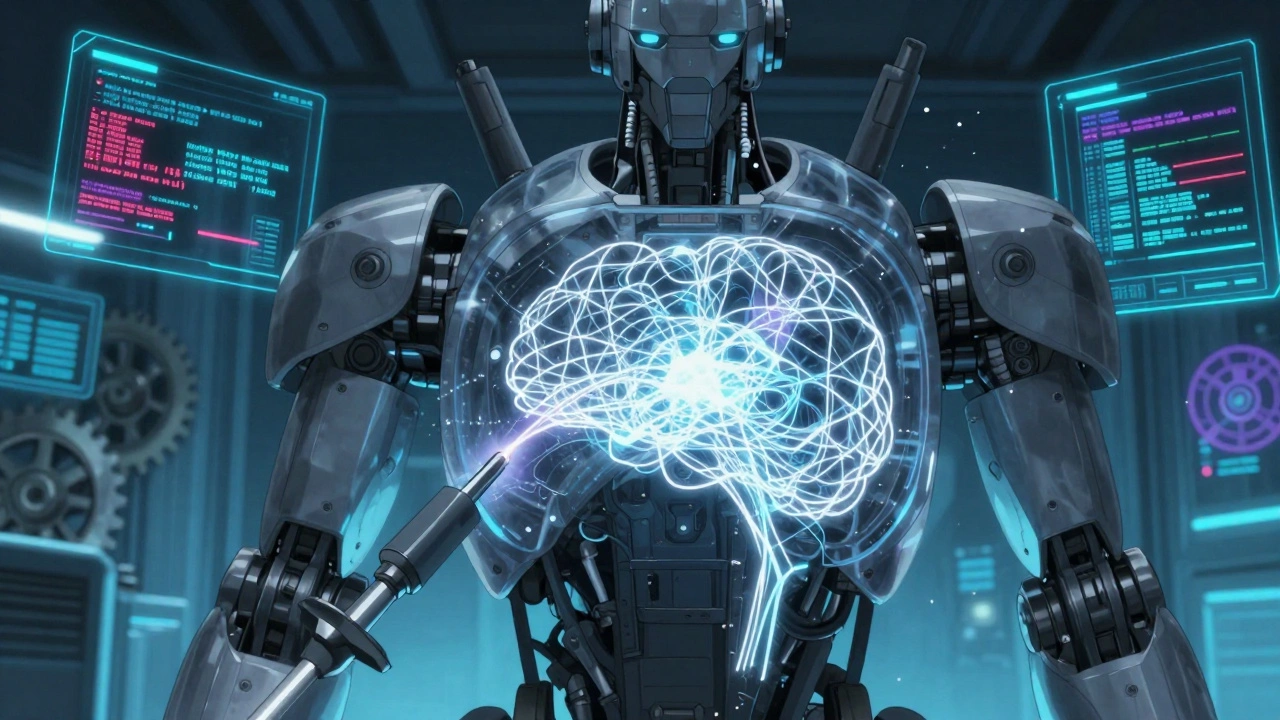

When you interact with a chatbot that answers your questions, writes code, or summarizes a contract, you're likely using a large language model, a type of AI system trained on massive amounts of text to predict and generate human-like language. Also known as LLMs, these models power everything from customer service bots to internal tools—but they’re not magic. They need careful handling to be accurate, secure, and cost-effective.

Behind every large language model are self-attention, the mechanism that lets the model weigh which words matter most in a sentence and positional encoding, how the model keeps track of word order so "I love cats" doesn’t become "cats love I". These aren’t just technical details—they’re why LLMs understand context instead of just recycling phrases. But as models grow, they also grow expensive. Memory and compute costs are now dominated not by the model weights, but by the KV cache, the temporary memory storing past interactions during inference. That’s why teams are turning to optimizations like FlashAttention and quantization to cut costs without losing quality.

Using LLMs in business isn’t just about picking the biggest model. It’s about matching the tool to the task. Enterprises are using them to review contracts, detect fraud, and train employees—but success comes from focusing on accuracy, not size. If your model remembers personal data from training, you risk violating data residency, laws that require personal data to stay within certain countries. If your AI generates code or UI, you need to check if it supports keyboard navigation and screen readers—or you’re excluding users. And if you’re fine-tuning it, you’re not just teaching answers—you’re teaching reasoning, through methods like chain-of-thought distillation, a way to shrink big models into smaller ones that still think step-by-step.

Security is another layer. Prompt injection, data leaks, and hallucinations aren’t theoretical—they happen daily. That’s why teams are moving to continuous security testing, automated checks that run after every model update, catching threats before they’re exploited. And if you’re measuring success, you’re not just tracking accuracy—you’re measuring latency, token costs, and whether your team can actually explain what the AI did. ROI isn’t about flashy demos. It’s about knowing if your AI saved time, cut inventory, or reduced legal risk—and proving it.

What follows is a curated collection of real-world guides on how to build, deploy, and secure LLMs without falling into common traps. You’ll find practical fixes for memory leaks, clear frameworks for ethics and governance, and case studies from companies cutting costs by 90% while keeping results sharp. No fluff. No hype. Just what works.

Chain-of-Thought Prompts for Reasoning Tasks in Large Language Models

Chain-of-thought prompting helps large language models solve complex reasoning tasks by breaking problems into steps. It works best on models over 100 billion parameters and requires no fine-tuning-just well-structured prompts.

Encoder-Decoder vs Decoder-Only Transformers: Which Architecture Powers Today’s Large Language Models?

Encoder-decoder and decoder-only transformers power today's large language models in different ways. Decoder-only models dominate chatbots and general AI due to speed and scalability, while encoder-decoder models still lead in translation and summarization where precision matters.

Prompt Length vs Output Quality: The Hidden Cost of Too Much Context in LLMs

Longer prompts don't improve LLM output-they hurt it. Discover why 2,000 tokens is the sweet spot for accuracy, speed, and cost-efficiency, and how to fix bloated prompts today.

Red Teaming for Privacy: How to Test Large Language Models for Data Leakage

Learn how red teaming exposes data leaks in large language models, why it's now legally required, and how to test your AI safely using free tools and real-world methods.

Autonomous Agents Built on Large Language Models: What They Can Do and Where They Still Fail

Autonomous agents built on large language models can plan, act, and adapt without constant human input-but they still make mistakes, lack true self-improvement, and struggle with edge cases. Here’s what they can do today, and where they fall short.

Structured vs Unstructured Pruning for Efficient Large Language Models

Structured and unstructured pruning help shrink large language models for real-world use. Structured pruning keeps hardware compatibility; unstructured gives higher compression but needs special chips. Learn which one fits your needs.

How Vocabulary Size in Large Language Models Affects Accuracy and Performance

Vocabulary size in large language models directly impacts accuracy, efficiency, and multilingual performance. Learn how tokenization choices affect real-world AI behavior and what size works best for your use case.

How to Use Large Language Models for Literature Review and Research Synthesis

Learn how to use large language models like GPT-4 and LitLLM to cut literature review time by up to 92%. Discover practical workflows, tools, costs, and why human verification still matters.

Reasoning in Large Language Models: Chain-of-Thought, Self-Consistency, and Debate Explained

Chain-of-Thought, Self-Consistency, and Debate are three key methods that help large language models reason through problems step by step. Learn how they work, where they shine, and why they’re transforming AI in healthcare, finance, and science.

Citations and Sources in Large Language Models: What They Can and Cannot Do

LLMs can generate convincing citations, but most are fake. Learn why AI hallucinates sources, how to spot them, and what you must do to avoid being misled by AI-generated references in research.