Ever typed a super long prompt into ChatGPT, thinking more details would mean better answers-only to get a messy, off-track response? You’re not alone. Many developers and businesses assume that stuffing more context into a prompt will improve results. But the truth is, prompt length often hurts performance, not helps it.

More Tokens Don’t Mean Better Answers

It’s counterintuitive, but research shows that after about 2,000 tokens, most large language models start performing worse. GPT-4, Claude 3, and Gemini 1.5 Pro all show this pattern-even though they technically support inputs of 100,000+ tokens. At 500 tokens, accuracy hovers around 95%. At 1,000, it drops to 90%. By 2,000 tokens, it’s down to 80%. Beyond that, it keeps sliding. Each extra 500 tokens cuts accuracy by roughly 5 percentage points. This isn’t a bug. It’s how attention mechanisms work. Transformers weigh every token against every other token. That means if you double the prompt length, the computational load doesn’t double-it quadruples. At 4,000 tokens, processing time can spike over five times compared to 1,000. And the model doesn’t use all that extra data wisely.The Recency Bias Problem

LLMs don’t treat all parts of a prompt equally. They focus heavily on what comes last. This is called recency bias. In a 10,000-token prompt, the first 20% of information gets only 12-18% of the model’s attention. That means if you put your core instruction at the start-like “Summarize this report accurately”-and then add 9,000 tokens of background data, the model might forget your original request entirely. A Microsoft and Stanford study found that hallucinations (made-up facts) jump by 34% when prompts exceed 2,500 tokens. Bias also grows: outputs become 28% more likely to reflect stereotypes or flawed assumptions buried in the long context. One developer on GitHub reported their math solver’s accuracy dropped from 92% to 64% just by extending a prompt from 1,800 to 3,500 tokens. The model wasn’t smarter-it was confused.What Works Better Than Long Prompts

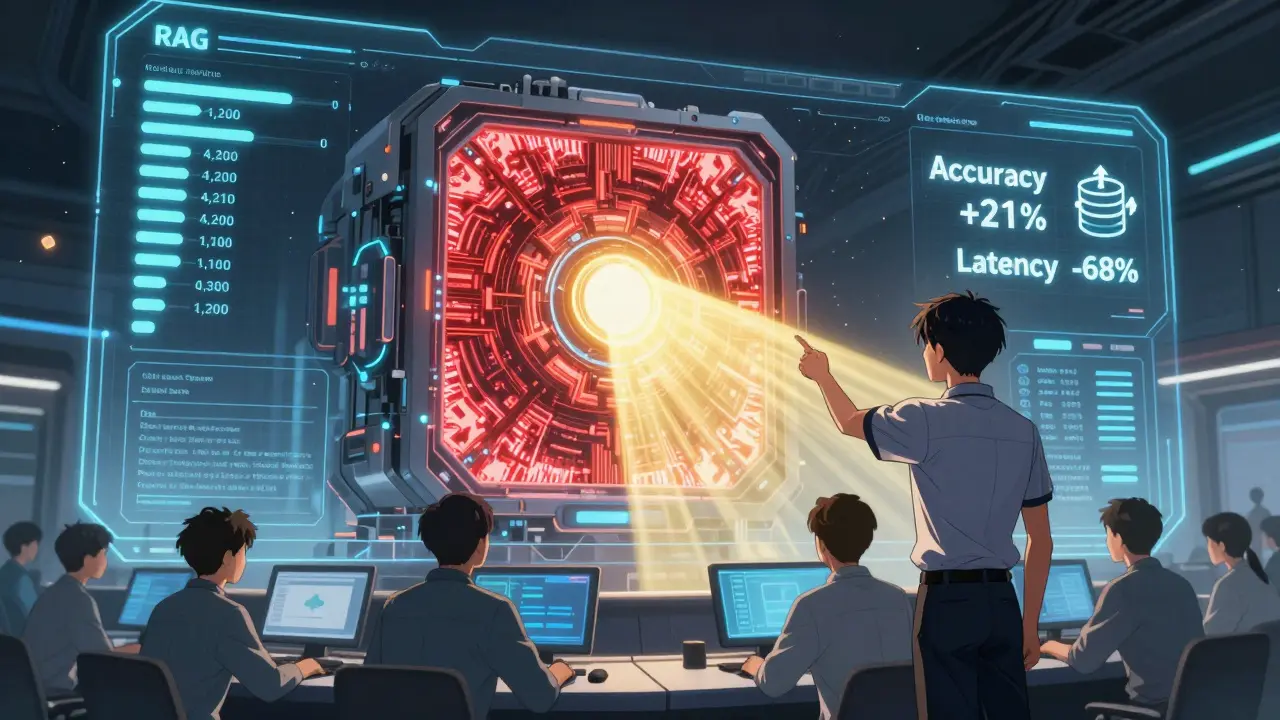

Instead of dumping everything into one prompt, smart teams use retrieval-augmented generation (RAG). RAG keeps the prompt short-usually under 1,000 tokens-and pulls in only the relevant context when needed. A PromptLayer case study showed a 16K-token RAG system outperformed a 128K-token monolithic prompt by 31% in accuracy while cutting latency by 68%. Even advanced techniques like Chain-of-Thought prompting help, but only up to a point. At 1,000 tokens, CoT boosts accuracy by 19%. At 2,500 tokens, that benefit shrinks to just 6%. The model can’t hold the logic together if the input is too bloated.Model Differences: Open vs Proprietary

Not all models behave the same. Llama 3 70B shows less degradation between 1,000 and 2,000 tokens than GPT-4-turbo. Open-weight models may handle longer prompts slightly better due to training methods. But even they hit a wall. Google’s Gemini 1.5 Pro holds an edge at 2,000 tokens (88% accuracy vs GPT-4’s 82%), but both decline past that point. Anthropic’s internal testing found 1,800 tokens is the “sweet spot” for Claude 3’s reasoning tasks. Beyond that, performance drops 2.3% per additional 100 tokens. Stanford’s Dr. Percy Liang puts it bluntly: “Beyond 2,000 tokens, we’re not giving models more context-we’re giving them more noise to filter through.”

Real-World Impact: Cost, Speed, and Customer Satisfaction

This isn’t just academic. Enterprises are losing money on bad prompt design. Altexsoft’s 2023 analysis found that optimizing prompt length cut cloud costs by 37% and improved accuracy by 22% in customer service bots. On G2, companies reported response times dropping from 4.2 seconds to 1.7 seconds after trimming prompts. Customer satisfaction scores jumped 27 points. One Reddit user reduced their prompt from 4,200 to 1,100 tokens and saw financial report accuracy climb from 68% to 89%. HackerNews surveys show 73% of developers have experienced the recency bias trap-where critical instructions at the top of a long prompt get ignored.What’s the Right Length?

There’s no universal number, but there are solid guidelines:- Simple tasks (classification, sentiment analysis): 500-700 tokens

- Complex reasoning (analysis, planning, multi-step logic): 800-1,200 tokens

- Avoid going over 2,000 tokens unless you’ve tested it

When Longer Prompts Actually Help

There are exceptions. Legal contract analysis, medical record review, or technical documentation cross-referencing sometimes need long context. A Nature study in April 2025 found that for highly specialized tasks requiring connections between distant sections, prompts of 32,000+ tokens offered marginal gains. But these cases are rare-only 8% of real-world use cases, according to PromptLayer.

The Future: Intelligent Context, Not Just More Tokens

The industry is moving away from brute-force prompting. Google’s January 2025 “Adaptive Context Window” helps Gemini 1.5 Pro retain early-sequence info better. Anthropic’s Claude 3.5, launching in 2025, will auto-score and filter low-value tokens. Meta AI’s March 2025 research on hierarchical attention showed 29% better performance on 4,000-token prompts by focusing attention in layers. By 2027, Gartner predicts 90% of enterprise LLM systems will use automated context optimization-not fixed-length prompts. Prompt engineering is becoming a $287 million market in 2024, growing at 63% annually. Fortune 500 companies now have dedicated teams just for this.How to Fix Your Prompts Today

Here’s what you can do right now:- Trim ruthlessly. Delete examples, filler text, and redundant explanations.

- Repeat key instructions. Put your main goal at the start and the end to fight recency bias.

- Use RAG. Store context externally and pull in only what’s needed.

- Test with real data. Measure accuracy and speed before and after shortening.

- Avoid copying long prompts from blogs. What works for one task won’t work for another.

Why This Matters

LLMs aren’t getting smarter because they see more text. They’re getting smarter because we learn how to ask better questions. The biggest bottleneck isn’t model size-it’s how we feed it information. Stop assuming more is better. Start optimizing for clarity, not volume.Does longer prompt always mean better output from LLMs?

No. After about 2,000 tokens, most models like GPT-4, Claude 3, and Gemini 1.5 Pro start performing worse. Longer prompts increase computational load, slow down responses, and reduce accuracy due to attention overload and recency bias. The model struggles to focus on critical instructions buried in extra text.

What’s the ideal prompt length for most tasks?

For simple tasks like classification or sentiment analysis, use 500-700 tokens. For complex reasoning like analysis or planning, aim for 800-1,200 tokens. Never exceed 2,000 tokens without testing. Most optimal prompts are shorter than people assume.

Why do long prompts cause hallucinations?

Long prompts overwhelm the model’s attention mechanism, making it harder to distinguish relevant facts from noise. This leads to guessing-especially when key instructions are buried. Microsoft and Stanford found hallucination rates rise by 34% beyond 2,500 tokens because the model loses track of what’s important.

What is RAG, and how does it help with prompt length?

RAG stands for Retrieval-Augmented Generation. Instead of putting all context in the prompt, RAG stores it externally and pulls in only the most relevant pieces when needed. This keeps prompts short (under 1,000 tokens) while still giving the model access to deep information. One case study showed RAG improved accuracy by 31% and cut latency by 68% compared to a massive 128K-token prompt.

Can I use longer prompts for legal or medical tasks?

Yes-but only in rare cases. A Nature study found that for complex legal contract analysis or medical record review, where cross-referencing distant sections is critical, prompts of 32,000+ tokens can help. However, these scenarios make up only about 8% of real-world use cases. For 92% of applications, shorter prompts perform better.

How can I test my prompt length for optimal results?

Start with a short prompt (500-700 tokens), then gradually add context one piece at a time. Measure accuracy and response time after each change. Use tools like PromptLayer’s PromptOptimizer or PromptPanda’s Calculator to automate testing. Most users find their sweet spot in 2-3 iterations.

Why do some developers still use long prompts?

Many assume more context equals better results, especially when they’re new to LLMs. Others copy prompts from blogs without testing. Altexsoft found 68% of enterprise LLM implementations they audited used bloated prompts that hurt performance. It’s a common mistake-but one that’s easy to fix.

Will future models solve the prompt length problem?

Not by just scaling up. Even models with 200,000-token contexts show performance drops after 2,000-3,000 tokens. The real progress is in smarter attention systems-like Google’s Adaptive Context Window and Anthropic’s context relevance scoring. These tools help models focus better on what matters, not just handle more text. The future is intelligent context, not longer prompts.

Samuel Bennett

lol so you're telling me after all this time I've been wasting money on GPT-4 just because I thought more words = better AI? Newsflash: the algorithm's probably just trolling us. I bet they train these models to get dumber the longer your prompt is to sell us more API credits. Who even designed this? A corporate bot with a PhD in overcomplicating things?

Rob D

Oh please. This is why America’s tech leadership is crumbling. You people treat LLMs like they’re magic elves who read your mind when you scream at them in all caps. Meanwhile, China’s training models on 500K-token prompts and they’re diagnosing cancer from scratch. You wanna compete? Stop being lazy and learn how to structure a damn prompt. Or better yet-move to India and let someone who actually knows what they’re doing fix this mess.

Franklin Hooper

2000 tokens is the threshold. Not 1800. Not 2500. 2000. The data is clear. The attention mechanism degrades exponentially beyond that point. No need for fluff. No need for anecdotes. Just math. And you're still using 4000 token prompts? You're not a developer. You're a noise generator.

Jess Ciro

They don't want you to know this. Big AI is hiding the truth. Why do you think they pushed 128K token limits? To keep you dependent on their bloated systems. They profit from your confusion. Your slow responses. Your wasted cloud bills. This isn't about tech-it's about control. They want you thinking more context = better. But the truth? They're just selling you smoke and mirrors wrapped in API keys.

saravana kumar

This is very good article. But I think you underestimate the cultural difference in prompt engineering. In India, we use long prompts because we are taught to be thorough. We do not trust AI to guess our intent. We write everything. Even if it is inefficient. This is not about optimization. This is about safety. In high-stakes environments, we cannot afford to lose context. So we endure the latency. We pay the cost. Because in our world, a hallucinated medical diagnosis costs more than a cloud bill.

Tamil selvan

Thank you for sharing this insightful and deeply researched perspective-it's refreshing to see someone cut through the hype and focus on real, measurable outcomes. I’ve personally seen teams waste weeks trying to ‘fix’ models by adding more context, when all they needed was a clean, focused prompt and a solid RAG pipeline. Your guidelines are practical, grounded, and truly helpful. I encourage every engineer reading this to print this out and tape it to their monitor. You’ve saved countless hours-and possibly entire projects-for people who didn’t know they were doing it wrong. Truly appreciated.

Mark Brantner

so i just trimmed my 5k token monster prompt down to 900 tokens and my ai went from writing essays about my cat’s existential crisis to actually giving me the damn sales report i asked for?? like… wow. i feel like a genius. also i think my laptop just sighed in relief. also also i misspelled ‘prompt’ three times in this comment. forgive me. i’m still recovering from 3 years of prompt trauma