Author: JAMIUL ISLAM - Page 4

Framework-Aligned Vibe Coding with Wasp for Full-Stack Apps

Wasp is a declarative full-stack framework that generates React, Node.js, and PostgreSQL code from a simple config file, cutting development time by 60-70%. Ideal for MVPs and internal tools.

Real-Time Multimodal Assistants Powered by Large Language Models: What They Can Do Today

Real-time multimodal assistants powered by large language models can see, hear, and respond instantly to text, images, and audio. Learn how GPT-4o, Gemini 1.5 Pro, and Llama 3 work today-and where they still fall short.

Security for RAG: How to Protect Private Documents in Large Language Model Workflows

Learn how to protect private documents in RAG systems using multi-layered security, encryption, access controls, and real-world best practices to prevent data leaks in enterprise AI workflows.

Trustworthy AI for Code: How Verification, Provenance, and Watermarking Are Changing Software Development

AI-generated code is everywhere-but without verification, provenance, and watermarking, it’s a ticking time bomb. Learn how trustworthy AI for code is changing software development in 2026.

Prompting as Programming: How Natural Language Became the Interface for LLMs

Natural language is now the primary way humans interact with AI. Prompt engineering turns simple text into powerful programs, replacing code for many tasks. Learn how it works, why it's changing development, and how to use it effectively.

Secure Human Review Workflows for Sensitive LLM Outputs

Human review workflows are essential for securing sensitive LLM outputs in regulated industries. Learn how to build a compliant, scalable system that prevents data leaks and meets GDPR and HIPAA requirements.

Board-Level Briefing: Strategic Implications of Vibe Coding for 2026

Vibe coding lets non-engineers build software with AI-but at what cost? Boards must understand its risks: hidden tech debt, legal liability, and system failures. Here’s how to use it safely in 2026.

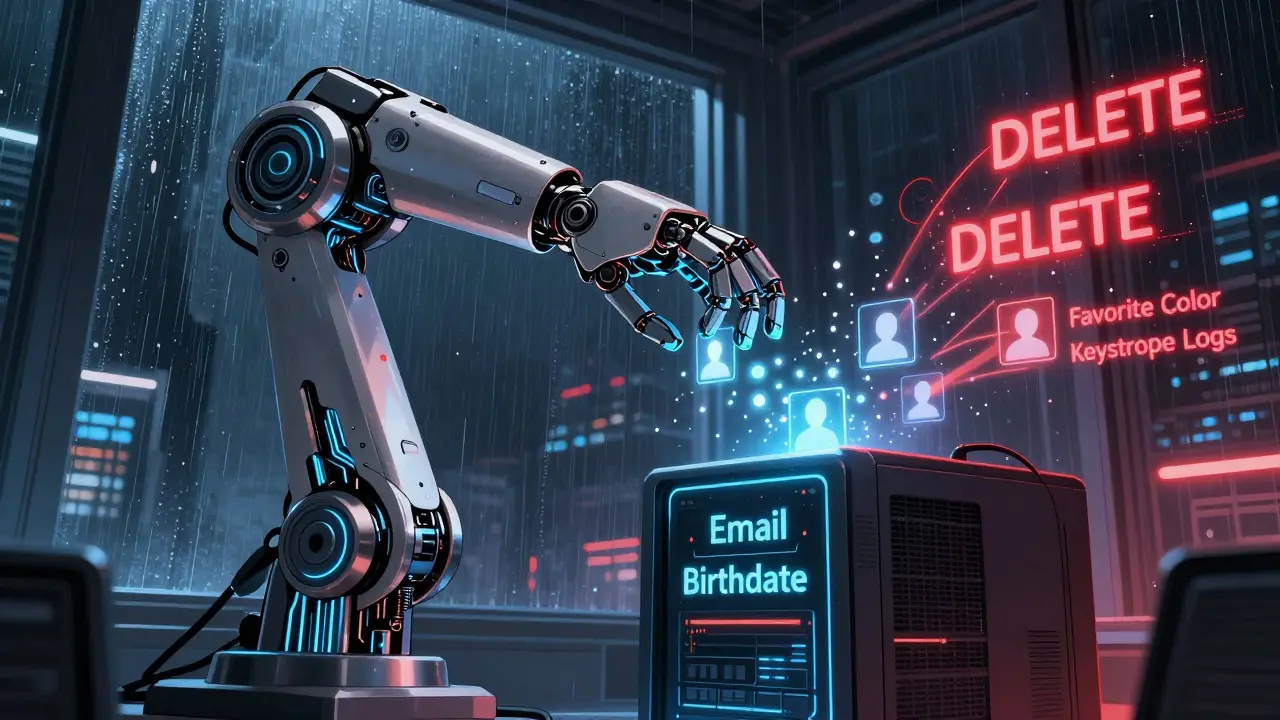

Data Retention Policies for Vibe-Coded SaaS: What to Keep and Purge

Vibe-coded SaaS apps collect too much data by default. Learn what to keep, what to purge, and how to use precise AI prompts to avoid GDPR fines and reduce storage costs.

Vibe Coding for IoT Demos: Simulate Devices and Build Cloud Dashboards in Hours

Vibe coding lets you build IoT device simulations and cloud dashboards in hours using AI, not code. Learn how to simulate sensors, connect to AWS IoT Core, and generate live dashboards with plain English prompts.

Customer Support Automation with LLMs: Routing, Answers, and Escalation

LLMs are transforming customer support by automating responses, smartly routing inquiries, and escalating only what needs human help. See how companies cut costs, boost satisfaction, and scale support without hiring more agents.

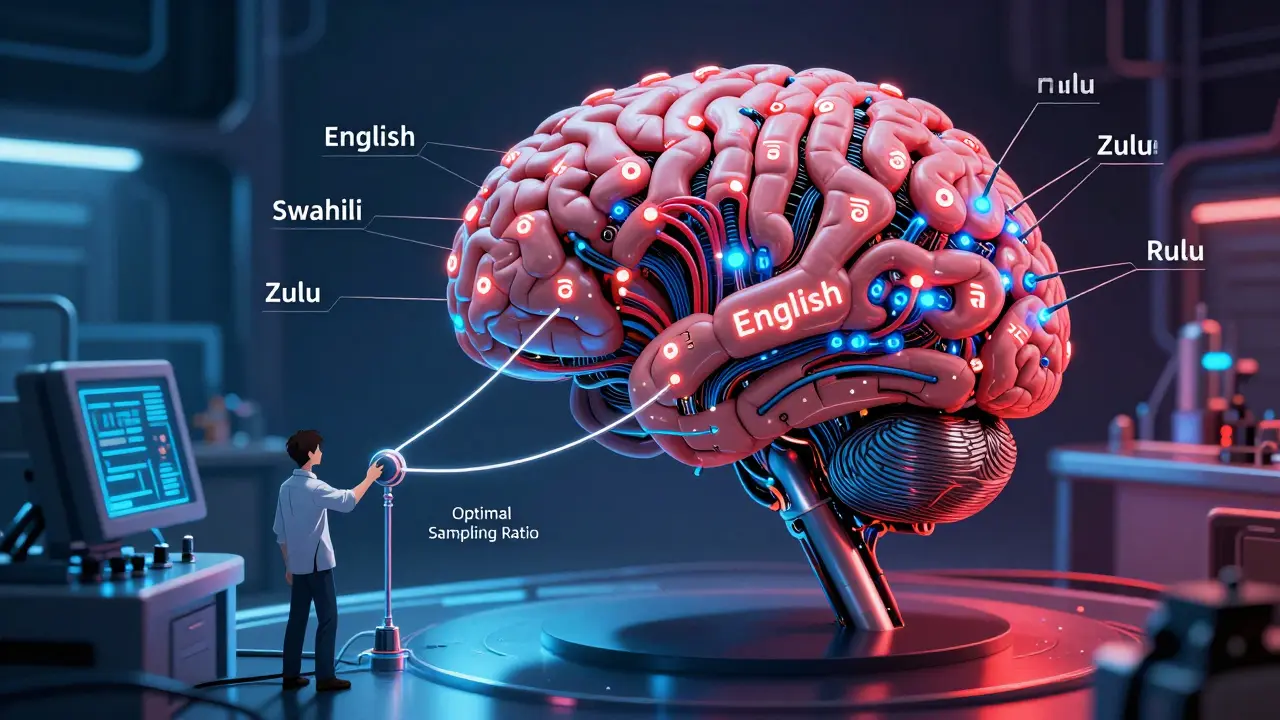

Scaling Multilingual Large Language Models: How Data Balance and Coverage Drive Performance

Discover how balancing training data across languages-not just adding more-dramatically improves multilingual LLM performance. Learn the science behind optimal sampling and why it's replacing outdated methods.

How to Choose Between API and Open-Source LLMs in 2025

In 2025, choosing between API and open-source LLMs comes down to performance, cost, and control. Open-source models like Llama 3 now match proprietary models in most tasks, with 86% lower costs-but they demand technical expertise. APIs are easier but expensive at scale.