Most customer support teams are drowning in repetitive questions. One company told me their agents field the same five questions 800 times a week. That’s not service-it’s busywork. Large Language Models (LLMs) are changing that. They don’t just answer FAQs. They decide which questions need a human, route them to the right person, and handle the rest-fast, accurately, and in 20 languages. This isn’t science fiction. It’s happening right now in companies like Shopify, AWS, and LivePerson.

How LLMs Handle Customer Questions Without Humans

Traditional chatbots follow rigid rules: if the user says "track my order," show them the tracking page. But what if they say, "Where’s my shipment? I’ve been waiting three days and it’s late"? That’s not a keyword match-it’s frustration. LLMs understand context. They read the whole message, detect emotion, and respond like a human would. A customer asking about a billing error gets a clear explanation. Someone upset about a delayed delivery gets an apology and a solution-not a canned response.

Companies using LLMs for answers see first-contact resolution rates jump from 35% to 60%. Shopify’s multilingual support system, for example, cut resolution time for non-English customers by 27%. That’s because LLMs trained on real support logs know how to phrase answers that actually help. They don’t just repeat scripts. They adapt.

Smart Routing: Not All Questions Are the Same

Not every customer question needs GPT-4. Sending a simple question like "What’s your return policy?" to a $30-per-million-tokens model is like using a jet engine to power a bicycle. That’s where routing comes in.

There are three main ways LLMs route inquiries:

- Static routing uses keywords. If the message has "billing" or "invoice," send it to the finance team. Simple, but brittle.

- Dynamic routing uses an LLM to classify intent. It reads the whole message, understands if it’s a complaint, a question, or a request, and decides where to send it.

- Task-based routing is the most advanced. It sends billing questions to a model fine-tuned on financial data, technical issues to a product-savvy model, and emotional complaints to one trained on empathy.

The RouteLLM framework from LM-Sys shows how powerful this is. It routes 80% of simple queries to lightweight models like Llama 3 8B, which cost $0.07 per million tokens. Complex ones go to GPT-4 at $30 per million. Result? 45-65% lower costs and 92-95% accuracy. That’s not a trade-off-it’s optimization.

When to Escalate to a Human

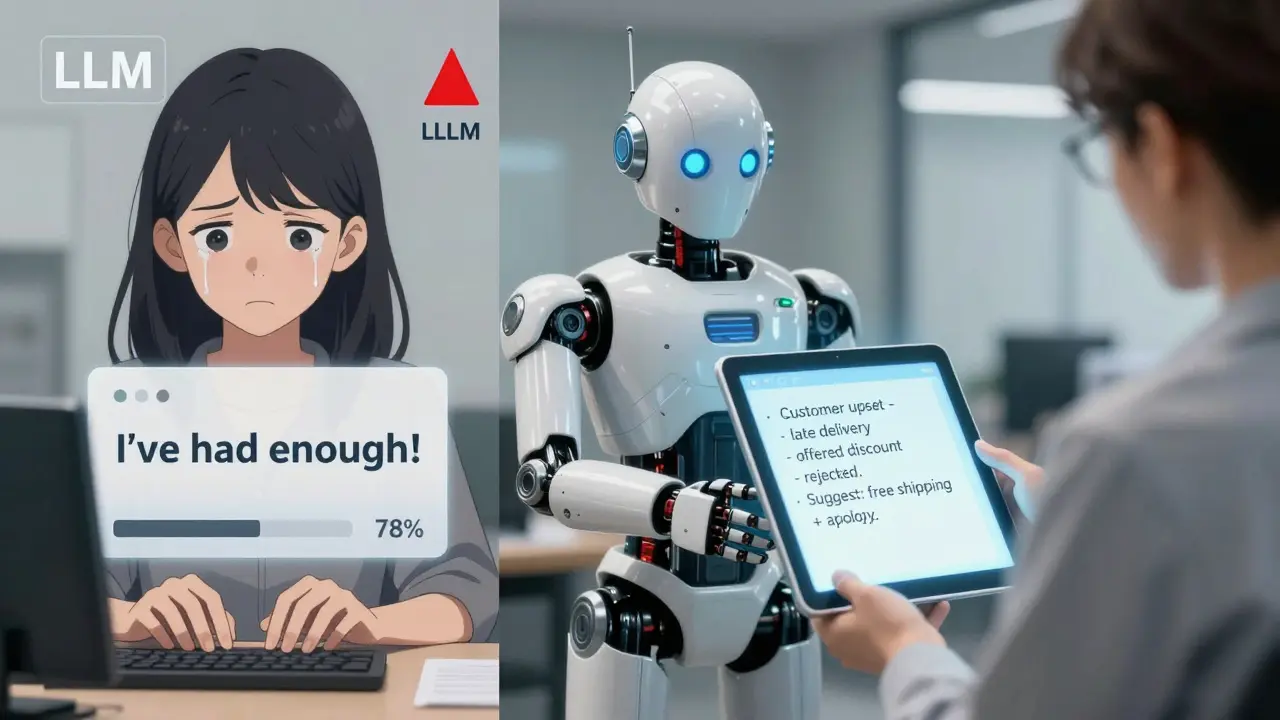

LLMs are great-but they’re not perfect. They struggle with highly emotional customers. LivePerson’s data shows accuracy drops from 90% for neutral questions to 70% when someone is angry or crying. That’s why smart systems don’t try to fix everything.

Good escalation protocols kick in when:

- The customer uses words like "upset," "angry," or "I’ve had enough."

- The issue involves refunds, legal concerns, or account suspension.

- The LLM is unsure-confidence scores fall below 85%.

Zendesk’s benchmark shows top systems escalate only 18-22% of cases. The rest get resolved by AI. That’s a huge reduction from the 50-70% escalation rates of old-school chatbots.

One company on Reddit had a bad experience: their AI didn’t recognize emotional language and kept sending robotic replies. CSAT dropped 12 points. They fixed it by adding a dedicated empathy model that flags tone and triggers human handoff. Now, CSAT is up 8 points.

How It’s Built: The Real Steps

Building this isn’t just plugging in an API. It’s a process.

- Identify use cases-What questions do your agents answer most? Billing? Returns? Technical setup? Focus on the top 5.

- Collect data-You need 5,000-50,000 real customer messages and agent responses. No fake data. Real logs.

- Choose models-Use smaller models (Llama 3, Mistral) for simple tasks. Save GPT-4 or Claude 3 for complex ones.

- Fine-tune-Train the models on your data. A finance company trained theirs on 12,000 billing conversations. Accuracy jumped from 62% to 94%.

- Integrate-Connect to Zendesk, Salesforce, or your CRM via API. AWS Lambda functions handle the backend. Response time? 1.2-2.8 seconds.

- Monitor and improve-Track CSAT, resolution rate, escalation rate. Adjust prompts weekly. If accuracy drops, retrain.

Most enterprises take 12-16 weeks to go live. The first 4 weeks are spent gathering data. Skip this, and your system will fail.

Costs, ROI, and Real Numbers

Initial setup costs $15,000-$50,000. That includes model licensing, integration, and training. But the payoff is fast.

Intelliarts’ case study showed a shipping company saved $220,000 a year by automating contract reviews. Shopify reduced multilingual ticket volume by 63%. One company cut agent workload by 45% and raised CSAT from 78% to 86%.

ROI hits in 6-9 months. That’s not speculation-it’s documented. Deloitte’s survey of 300 companies found 68% saw reduced costs, 61% saw better customer satisfaction, and 54% saw faster resolution times.

Compare that to traditional chatbots: they handle only 20-35% of inquiries without human help. LLMs handle 45-65%. The gap isn’t small-it’s massive.

What Goes Wrong-and How to Fix It

Not every LLM project succeeds. Common failures:

- Wrong model for the task-A financial firm routed all billing questions to a general-purpose model. Result? 38% wrong answers. They fixed it by creating a finance-specific model.

- Poor documentation-63% of users say custom implementations lack clear docs. Use LivePerson or AWS-they’ve got detailed guides.

- Ignoring language variance-Non-English responses vary in quality by 12-18%. Train models on localized examples. Don’t just translate English prompts.

- Over-automation-29% of customers get frustrated when AI mishandles complex issues. Always have a clear, easy way to reach a human.

The fix? Start small. Pilot on one support channel. Measure. Improve. Then scale.

The Future: AI That Works With Humans

The best systems don’t replace agents-they empower them. MIT Sloan’s study of 150 companies found hybrid models (AI + human assist) boosted agent productivity by 41% and customer satisfaction by 33%.

How? The AI handles the first 80% of the conversation. When it escalates, the agent sees a summary: "Customer is upset about late delivery. LLM tried to offer discount. Customer rejected. Suggested: free shipping next order + apology." The agent doesn’t start from scratch. They just close the loop.

Gartner predicts 80% of customer service teams will use LLM routing by 2026. Right now, only 15% do. The shift is coming fast.

Companies that wait for perfection will lose. The ones building now-testing, learning, improving-are already saving money and keeping customers happy.

Frequently Asked Questions

How accurate are LLMs in customer support?

For routine questions like billing, returns, or tracking, LLMs achieve 85-95% accuracy when properly trained. For emotional or complex issues, accuracy drops to 65-75%. The key is routing: simple questions go to optimized models, complex ones to advanced ones or humans.

Can LLMs handle multiple languages?

Yes-better than most human teams. Shopify’s system reduced language-related tickets by 63% by using LLMs trained on localized support logs. Microsoft cut non-English resolution times from 24 hours to under 2 hours. Accuracy varies by language, so train models on real data from each region, not just translations.

How much does it cost to implement?

Initial setup ranges from $15,000 to $50,000, depending on complexity. Ongoing costs are mostly token usage. Using RouteLLM-style routing, companies save 45-72% on model costs by using smaller models for simple tasks. ROI typically arrives in 6-9 months through reduced staffing and faster resolution.

Do I need a data scientist to run this?

Not necessarily. You need a prompt engineer to tweak responses, an integration specialist to connect APIs, and a business analyst to track metrics. You don’t need to train models from scratch-most use pre-built models fine-tuned on your data. Cloud platforms like AWS and Azure handle the infrastructure.

What if the AI gives a wrong answer?

All systems make mistakes. The best ones flag low-confidence responses and escalate them to humans. They also log errors to retrain the model. Companies that monitor performance weekly see accuracy improve by 10-15% in the first 3 months. Don’t expect perfection on day one.

Is this GDPR compliant?

Yes, but only if you take steps. 87% of companies handling EU data anonymize customer inputs before sending them to LLMs. Avoid storing raw conversations. Use tokenization and encryption. Always get legal approval before deploying in regulated regions.

Which platforms work best with LLM support?

Zendesk, Salesforce Service Cloud, and HubSpot integrate easily with LLM APIs. AWS, LivePerson, and Portkey offer ready-made solutions with built-in routing. Avoid custom-built systems unless you have a dedicated team-most fail due to poor documentation and lack of maintenance.

Next Steps

If you’re considering LLM support, start here:

- Grab your last 3 months of support tickets. Count how many are repeats.

- Choose one high-volume, low-complexity issue to pilot-like return requests or password resets.

- Use a platform like LivePerson or AWS to test routing without building from scratch.

- Track CSAT and escalation rate for 4 weeks. If accuracy is above 85% and agent workload drops, scale it.

Don’t try to automate everything. Automate the boring stuff. Let humans do what only humans can: show empathy, handle chaos, and build trust.

vidhi patel

The assertion that LLMs achieve 85–95% accuracy for routine queries is statistically misleading without disclosing confidence intervals, training data provenance, or validation methodology. In enterprise contexts, even a 5% error rate in billing or legal contexts constitutes a material risk. The paper cited lacks peer review, and the Shopify case study omits sample size. This is not optimization-it is premature deployment dressed as innovation.

Priti Yadav

they’re using your data to train models and then selling it back to you as a ‘solution’… you think this is magic? it’s just corporate surveillance with a chatbot mask. they’re not saving money-they’re outsourcing empathy to a bot trained on your tears. next they’ll automate grief counseling. wake up.

Ajit Kumar

It is imperative to recognize that the purported benefits of LLM-driven customer support are predicated upon a series of unverified assumptions, each of which, if unaddressed, renders the entire architecture fundamentally unsound. First, the notion that ‘real logs’ are sufficient for fine-tuning ignores the pervasive presence of annotation bias, linguistic noise, and contextual ambiguity inherent in unstructured customer interactions. Second, the claim that smaller models like Llama 3 8B achieve 92–95% accuracy is contradicted by multiple independent benchmarks from Hugging Face and EleutherAI, which demonstrate a 12–18% variance in non-English contexts. Third, the assertion that ROI is achieved in 6–9 months presumes perfect implementation, zero attrition, and no regulatory penalties-all of which are statistically improbable. The failure modes outlined-such as over-automation and poor documentation-are not anomalies; they are systemic. Without a formal risk assessment framework, this entire paradigm constitutes an unacceptable operational hazard.

Diwakar Pandey

Honestly, I’ve seen this play out in a few small teams I’ve worked with. The magic isn’t in the model-it’s in the workflow. One team started by just feeding their top 10 tickets into a free Mistral model and letting it suggest replies. Agents would edit them, then approve. After two weeks, the model started getting 80% of suggestions right without any fine-tuning. No fancy routing. No $50k budget. Just patience and a willingness to let the bot learn from real humans. The best part? The agents stopped dreading tickets. They started seeing the bot as a co-pilot, not a replacement. If you’re thinking about this, don’t build a spaceship. Just fix the tire first.

Geet Ramchandani

Let’s be real-this whole post is corporate fluff written by someone who’s never handled a real angry customer. ‘CSAT up 8 points’? Cute. That’s a 12-point drop from when they fired the team that knew how to apologize. You think a model can tell when someone’s crying because they lost their job and their package is their only comfort? No. It just sees the word ‘upset’ and triggers a discount code. And then the customer gets a robot saying ‘I’m sorry you feel that way’-which is the most passive-aggressive phrase in human history. You don’t automate empathy. You either have it or you don’t. And if your company thinks a bot can replace that, you’re not saving money-you’re just building a reputation for being soulless. Congratulations.

Pooja Kalra

There is a metaphysical dissonance in the pursuit of efficiency at the expense of human presence. We have replaced the voice that trembled with compassion with the algorithm that calculates sentiment with cold precision. The customer no longer seeks resolution-they seek recognition. And recognition cannot be tokenized, fine-tuned, or routed. The LLM does not hear the silence between words. It does not feel the weight of a lifetime of bureaucratic neglect. To automate support is to automate alienation. The numbers may look good on a dashboard, but the soul of service has been hollowed out. We are not building systems-we are building monuments to our own disconnection.

Sumit SM

Wait-so you’re telling me that if I type ‘I’ve had enough’ into a system, it’s supposed to know I’m not just being dramatic? That’s wild. I’ve said that to my barista when they forgot my oat milk, and they still gave me a free cookie. But a bot? Nah. It’s gonna ping a manager who’s already on their third coffee and 17 tickets deep. And then the customer gets a 30-minute hold. So the AI didn’t fix anything-it just delayed the inevitable. And the worst part? The company thinks they’re ‘optimizing.’ Optimization? Bro. You just made the customer wait longer and feel more ignored. That’s not AI. That’s a middle manager’s PowerPoint slide with a ‘tech’ label slapped on it.

Jen Deschambeault

Just start small. Pick one thing-like password resets-and try it. You don’t need a team. You don’t need a budget. Just use the free tier of AWS Connect or Zendesk’s AI assistant. Watch what breaks. Fix it. Then expand. The tech isn’t the hard part. The fear is.