Imagine talking to a machine that sees what you see, hears what you hear, and responds instantly-not with a delay, but like a person. That’s no longer science fiction. Real-time multimodal assistants powered by large language models are here, and they’re changing how we interact with technology. These systems don’t just read text. They watch video, listen to speech, analyze images, and understand context across all of them-at the same time, with responses under half a second. If you’ve ever waited for a chatbot to process an uploaded photo or struggled with a voice assistant that missed the point of your question, you’re seeing the old version. This is the new one.

How These Assistants Actually Work

These aren’t just chatbots with image upload features. Real-time multimodal assistants use a single unified model that takes in text, audio, video, and images all at once. They don’t process one thing after another. They process them together. Inside, there are three main parts: encoders that turn raw input into data, fusion layers that connect the dots between different types of input, and decoders that generate the right kind of response-whether it’s spoken words, written text, or even a summary of what’s happening in a live video.

For example, if you send a photo of a broken appliance and say, “It’s making a loud clicking noise,” the system doesn’t just describe the image. It matches the sound pattern you described with visual cues in the photo, checks against known failure modes, and tells you it’s likely the motor bearing is worn out. This happens in under 800 milliseconds on consumer hardware. That’s faster than a human blink.

Leading models like GPT-4o, Gemini 1.5 Pro, and Meta’s Llama 3 multimodal variants all use this architecture. But they’re not the same. GPT-4o handles text in 120ms and images in 450ms. Gemini 1.5 Pro is better with video-processing a 10-minute clip in 750ms-but slower on text. Llama 3 is open-source and flexible, but it’s not as fast. If you’re building something for real-world use, speed and accuracy trade-offs matter.

Where These Assistants Are Already Making a Difference

Real-time multimodal assistants aren’t just cool demos. They’re being used right now in real businesses.

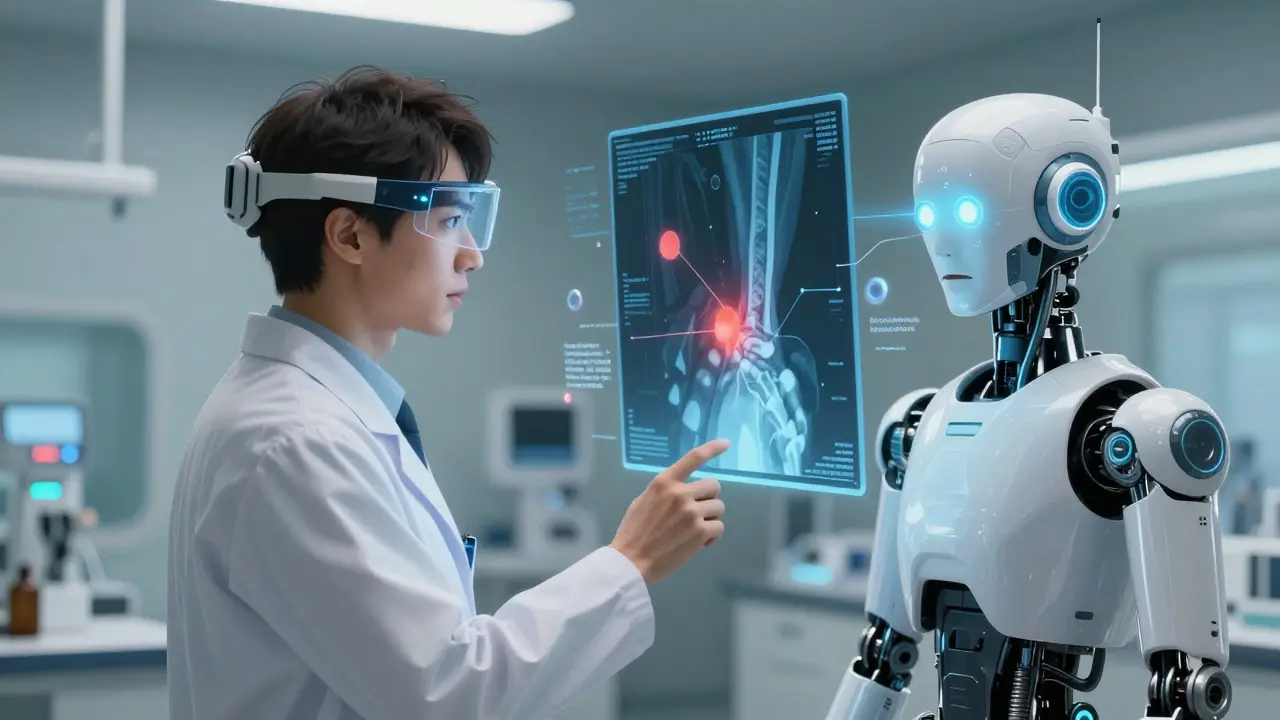

In healthcare, doctors are using them to analyze X-rays while talking to patients. The system listens to the patient’s description of pain, looks at the scan, and highlights possible fractures or abnormalities in real time. A 2024 study at Johns Hopkins showed a 22% increase in diagnostic accuracy when these assistants were used alongside radiologists.

In customer service, companies like Zendesk report a 47% drop in resolution time. Imagine a customer calling in, holding up a damaged product. The agent doesn’t have to ask them to email a photo or describe the issue. The assistant sees the damage, reads the warranty terms, and suggests a replacement-all while the customer is still talking.

In education, MIT tested these assistants in STEM labs. Students filmed themselves doing experiments and asked questions like, “Why did the solution turn green?” The system analyzed the video, the lab notes, and the spoken question, then gave a step-by-step explanation. Student engagement jumped 38.2%. It’s not replacing teachers. It’s giving them a real-time co-pilot.

Accessibility tools are also improving. Blind users can now point their phone at a street sign, and the assistant reads it aloud immediately. No more waiting for an app to load or a human to respond.

The Hidden Costs and Performance Gaps

But here’s the catch: these systems aren’t perfect. And they’re not cheap.

Accuracy varies wildly by modality. Text generation hits 92.6% accuracy. Image captioning? Only 78.2%. Audio transcription is better than video understanding. On complex video tasks-like following a person’s movements across multiple camera angles-accuracy drops to 62.4%. That’s a problem if you’re using this for autonomous systems or medical diagnostics.

Latency is another issue. Most users will abandon an assistant if it takes longer than 800ms to respond. That’s the threshold. GPT-4o hits 450ms on images. Gemini 1.5 Pro hits 750ms on video. But if the system has to process multiple inputs at once-say, a video call with background noise and someone holding up a document-it can stutter. Reddit users report that systems often prioritize one modality over another. The audio gets processed, but the image lags. Or the video cuts out while the voice keeps going. That’s not seamless. That’s frustrating.

And the hardware? You need serious power. Consumer setups need at least 24GB of VRAM. Enterprise versions run on clusters of 4-8 NVIDIA A100 GPUs. That’s not something a small business can afford. Pricing reflects that: GPT-4o charges $0.012 per image token. For a company processing 10,000 images a day, that’s $120 daily-just for images. On-premise licenses start at $250,000 a year.

Why the Industry Is Hyped-And Why It Should Be Cautious

Gartner puts real-time multimodal assistants at the “Peak of Inflated Expectations.” That’s the phase right before the hype crashes. And they’re right to be skeptical.

Professor Yoshua Bengio warned about the “illusion of understanding.” These models don’t know what they’re seeing. They’ve seen millions of images labeled “broken chair” and can guess the pattern. But they don’t understand chair mechanics or human intent. In a medical setting, that’s dangerous. A model might confidently say a tumor is benign because it looks like one in training data-when it’s actually something rare.

And then there’s the “multimodal gap.” Systems do well on individual inputs. But when you ask them to combine information across modalities-like linking a spoken complaint to a visual symptom and a medical history-they often fail. A 2025 ACM review found that 18.7% of information gets lost when separate models handle each modality. Unified models like GPT-4o cut that to 5.2%. Still, even 5% is too much in critical applications.

Regulations are catching up. The EU’s AI Act, effective January 2025, requires multimodal systems handling biometric data to hit at least 85% accuracy in real time. Most current systems barely clear that. That means many products on the market today won’t be legal to sell in Europe next year.

What’s Coming Next

Things are moving fast. NVIDIA’s new Blackwell Ultra chips cut latency by 40%. Google’s Gemini 1.5 Flash hits 220ms across all modalities. OpenAI’s rumored GPT-5 could be even faster. Meta is pushing to make multimodal models run on smartphones by late 2025.

The real breakthrough won’t come from bigger models. It’ll come from smarter design. Project Astra, Google’s secret project, aims for sub-100ms responses. That’s human reaction speed. If they hit it, assistants will feel like they’re thinking with you-not after you.

Standardization is also coming. The W3C formed a working group in late 2024 to create common APIs for multimodal systems. That means developers won’t have to rebuild everything for each vendor. Open-source tools like LLaVA-Next (with 28,400 GitHub stars) are giving smaller teams a shot.

But the biggest change will be in how we use them. Right now, they’re assistants. Soon, they’ll be collaborators. A teacher won’t just get help grading essays-they’ll get a real-time partner that adapts explanations based on a student’s facial expressions and tone. A mechanic won’t just get a diagnosis-they’ll get a live guide that shows them how to fix a part while they’re holding the tool.

Should You Use One Today?

If you’re a developer: Start with Llama 3 multimodal. It’s free, open, and you can test it on a single GPU. Build a simple app-like an image-to-speech tool for elderly users. See where it fails. That’s your learning curve.

If you’re a business: Don’t rush. Wait for the next 6-12 months. The hardware costs are still too high, and accuracy gaps are too risky for customer-facing roles. But if you’re in healthcare or customer service, pilot a system with limited scope. Use it for triage, not diagnosis. Use it to reduce repeat questions, not replace humans.

If you’re a user: Try GPT-4o or Gemini 1.5 Pro. Upload a photo. Speak a question. Watch how it responds. Notice when it gets confused. That’s the real test. The best assistants won’t give you perfect answers. They’ll give you useful ones-fast, and when they’re unsure, they’ll say so.

Real-time multimodal assistants aren’t magic. They’re tools. And like any tool, their value depends on how you use them. The future isn’t about machines that think like us. It’s about machines that work with us-without delay, without confusion, and without pretending they understand more than they do.

What’s the difference between a regular chatbot and a real-time multimodal assistant?

A regular chatbot only handles text. A real-time multimodal assistant processes text, images, audio, and video all at once, and responds instantly-often in under a second. It doesn’t wait for you to upload a photo or describe what you see. It sees, hears, and understands everything together.

Can I run a real-time multimodal assistant on my laptop?

You can run lightweight versions like Llama 3 multimodal on a high-end laptop with 24GB of VRAM, but performance will be slow. For true real-time speed (under 800ms), you need a powerful GPU or cloud access. Consumer laptops today aren’t built for this yet.

Which model is the best right now: GPT-4o, Gemini 1.5 Pro, or Llama 3?

It depends on your needs. GPT-4o is fastest overall and most accurate across modalities. Gemini 1.5 Pro handles long videos better. Llama 3 is open-source and cheaper to customize but slower. For most users, GPT-4o gives the best balance of speed and reliability.

Are these assistants safe for medical use?

They can help, but shouldn’t replace doctors. Studies show they improve diagnostic accuracy by up to 22% when used as a second opinion. But they don’t truly understand biology-they recognize patterns. In high-stakes situations, human judgment is still essential.

Why do these assistants sometimes lag between audio and video?

Because processing video takes longer than audio. Even with fast models, video frames need to be analyzed frame-by-frame, while audio can be processed in real time. If the system can’t sync them perfectly, you get mismatched lip movements or delayed responses. This is still a major technical challenge.

What’s the biggest limitation of these systems today?

The biggest limitation isn’t speed or cost-it’s consistency. These models can be brilliant on one input but fail when combining multiple. They might understand a spoken question and an image separately, but struggle to link them meaningfully. That’s the “multimodal gap,” and it’s the main barrier to true reliability.

Next steps: If you’re exploring this tech, start small. Test one modality at a time. Track where the system fails. Build around its weaknesses, not against them. The future belongs to those who use these tools wisely-not those who believe they’re infallible.

Ronak Khandelwal

This is the future, and it’s already here 🌟 I just showed my grandma how to point her phone at a recipe and have it read the ingredients out loud while she cooks-she cried. Not because it’s perfect, but because it finally *sees* her. We’re not just building tools-we’re building bridges. And that’s beautiful.

Jeff Napier

They’re not assistants they’re surveillance tools in disguise. GPT-4o listens to your kitchen, sees your face, knows your pain points-and sells it to advertisers. You think this is innovation? It’s behavioral harvesting with a smiley emoji. Wake up.

Sibusiso Ernest Masilela

Let me guess-you think this is ‘groundbreaking’? Please. These models are glorified autocomplete engines trained on scraped internet trash. You call it ‘understanding’? It’s statistical mimicry with a side of corporate hype. If you’re impressed by this, you’ve never read a single paper on cognitive science. Pathetic.

Daniel Kennedy

I’ve tested this with my students who have dyslexia. The system reads handwritten notes from a photo, explains the math, and speaks it back in a calm voice. One kid said, ‘I finally feel like I’m not broken.’ That’s not tech-that’s dignity. Yes, it’s flawed. Yes, it’s expensive. But when it works? It changes lives. Don’t throw the baby out with the bathwater.

Taylor Hayes

I appreciate the depth here. The real win isn’t speed or accuracy-it’s the shift from ‘user asks, system answers’ to ‘system anticipates, collaborates, adapts.’ That’s the quiet revolution. But I agree with the concerns about latency gaps. I’ve seen systems drop video frames during live demos and it breaks the flow. The UX isn’t seamless yet. We need better sync protocols, not just bigger GPUs.

Sanjay Mittal

Llama 3 multimodal runs fine on my RTX 4090. No cloud needed. I built a tool for farmers to photograph crop damage and get instant pest ID + organic remedy suggestions. Accuracy isn’t perfect, but it’s better than Google searches. If you’re in India or Africa, this is a game-changer. Stop waiting for GPT-5.

Mike Zhong

You think the ‘multimodal gap’ is the problem? It’s not. The real issue is that these models have zero ontology. They don’t know what a ‘chair’ is-they know the statistical correlation between ‘four legs’, ‘wood’, and ‘sitting’. They don’t understand causality. They don’t understand time. They don’t understand *being*. Calling this ‘intelligence’ is the greatest semantic fraud of the decade.

Jamie Roman

I’ve been playing with Gemini 1.5 Pro on my 3090 and it’s… weird. Like, it gets the gist of a video but sometimes ignores the most obvious thing-like a person waving for help in the corner of the frame. It’s not dumb, it’s distracted. Like a genius who can’t focus. And the audio-video sync? Sometimes the voice says ‘turn left’ but the video still shows the car going straight for a full second. It’s not lag-it’s disconnection. I think the fusion layers are still just patching things together, not truly integrating. We need neural architectures that treat modalities as dimensions of a single perception, not separate inputs. Maybe that’s what Astra’s doing. I hope so.