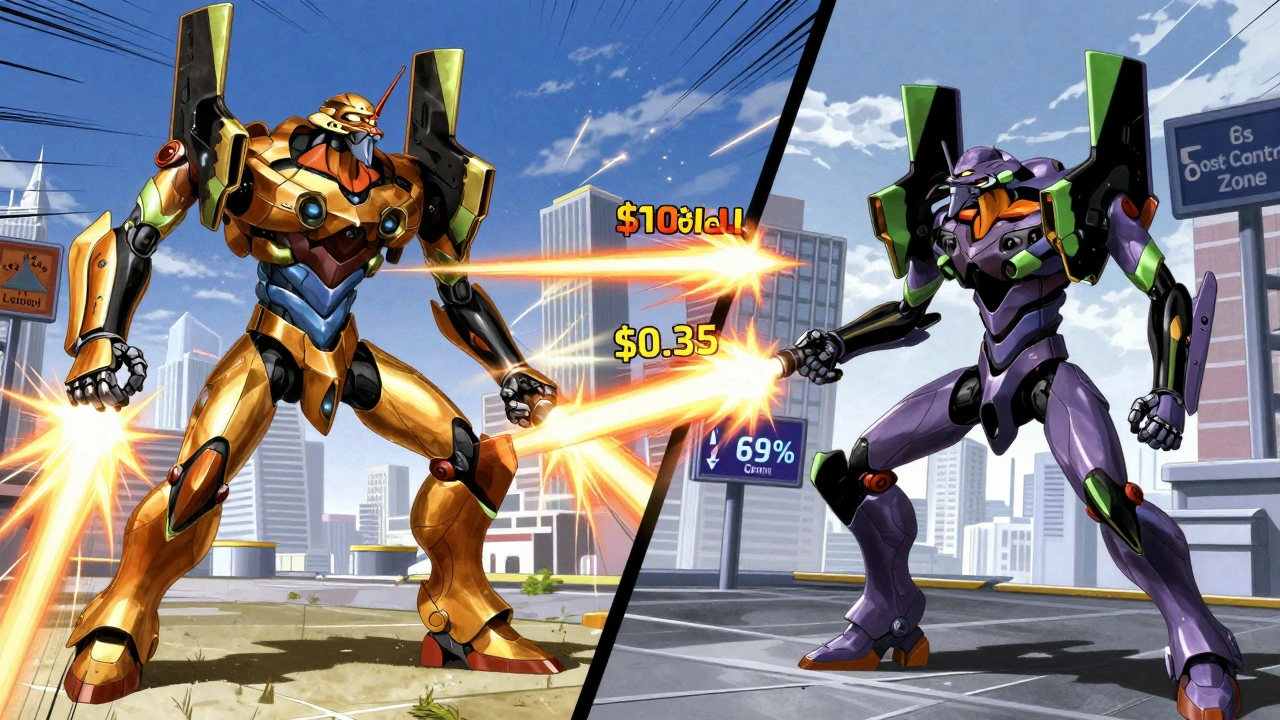

Latency and cost aren’t afterthoughts anymore-they’re the make-or-break factors in LLM deployments

If you’re still judging a large language model (LLM) only by how accurate its answers are, you’re already behind. In 2025, enterprises don’t just want correct responses. They want them fast, and they want them cheap. The days of treating latency and cost as secondary concerns are over. Today, these two metrics are as critical as accuracy-sometimes even more so.

Why? Because users won’t wait. A 2.5-second delay in a customer service chatbot? That’s a 68% drop-off rate, according to Deepchecks’ 2024 report. And if your monthly LLM bill spikes from $5,000 to $20,000 because you didn’t optimize token usage? That’s not a technical issue-it’s a business failure.

Companies like Shopify, Anthropic, and Meta have already learned this the hard way. They moved from experimenting with LLMs to running them at scale-and suddenly, accuracy alone meant nothing. If the model answers right but takes too long or costs too much, it’s unusable. That’s why latency and cost are now first-class metrics in LLM evaluation. Not add-ons. Not nice-to-haves. Core requirements.

What exactly is latency in LLMs-and why does it break user experience?

Latency isn’t just “how long it takes to reply.” It’s broken into three precise parts, each with its own impact:

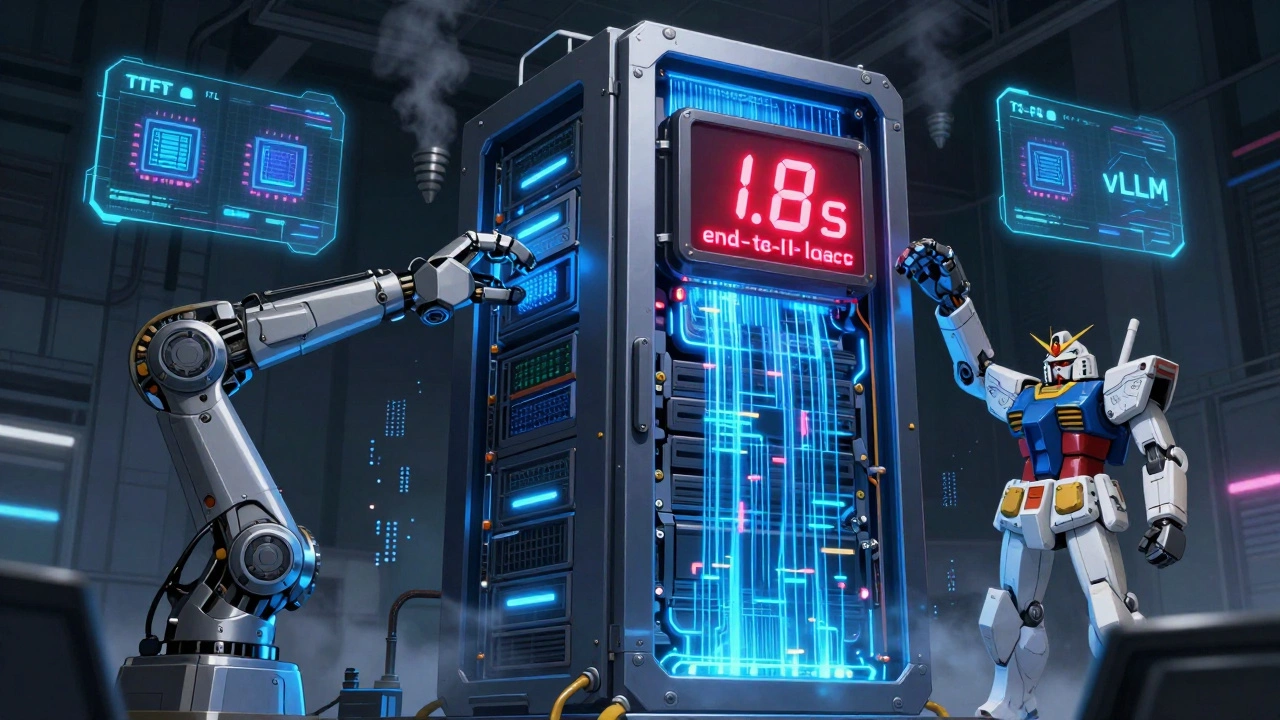

- Time-to-First-Token (TTFT): The delay between when you send a prompt and when the first word appears. For a 7B-parameter model on an A100 GPU, this should be under 500ms. Above 1 second? Users start to feel like the system is broken.

- Inter-Token Latency (ITL): The time between each word that follows. If it’s 80ms per token, a 20-word response takes 1.6 seconds-just for generation. That’s too slow for real-time chat.

- End-to-End Latency: The full journey-from your click to the last character delivered. This includes network lag, queuing, and model processing. For consumer apps, anything over 2 seconds is a dealbreaker.

Dr. Sarah Guo from Conviction Partners put it bluntly: “Any LLM deployment exceeding 2 seconds end-to-end latency fails the basic usability test for consumer applications, regardless of accuracy metrics.”

Real-world examples prove this. One Reddit user running Llama-2-70B on four A100s saw TTFT hit 1.2 seconds during peak hours. Customers abandoned the chatbot. They fixed it with caching-but then ran into stale data. Another team switched from GPT-4 to a fine-tuned Llama-3-8B. Accuracy dropped slightly, but latency fell from 850ms to 320ms. User satisfaction jumped from 68% to 89%.

It’s not just about speed. It’s about predictability. If latency spikes unpredictably when traffic hits 70% GPU usage-a phenomenon called “latency cliff”-you lose control. That’s why enterprises now monitor latency at three levels: token-level (5-10ms granularity), model-level (batch throughput), and application-level (real user experience).

Cost isn’t just about token pricing-it’s about hidden infrastructure traps

Most people think cost means “$0.03 per 1K tokens” from OpenAI. But that’s just the tip of the iceberg.

Here’s what actually adds up:

- Token volume: GPT-4-turbo charges $10 per million tokens. Claude-3-Opus? $15. Mistral-7B? Just $0.35. Self-hosted Llama-3-70B? Around $0.80. The difference isn’t marginal-it’s 20x to 40x cheaper.

- Hardware efficiency: A 70B model uses 3.7x more GPU memory than a 13B model. If you’re using A100s (40GB VRAM), you’ll need model sharding. H100s (80GB VRAM) handle it better, but cost more.

- Memory waste: Naive inference setups use only 40-60% of GPU memory. Tools like vLLM’s PagedAttention boost that to 75-85%. That’s 30% less hardware needed for the same output.

- Scaling surprises: A RAG system making 100 queries per second, each generating 10 tokens? That’s 1 million tokens per hour. At $0.03 per 1K tokens, that’s $30/hour. $720/day. $21,600/month. And that’s just one use case.

AIMultiple’s 2024 survey found that 73% of enterprises cited “cost unpredictability” as their top concern. OpenAI’s June 2024 rate hike caught 32% of developers off guard. One company on HackerNews said their GPT-4 bill hit $15,000/month. They switched to a smaller model-and cut costs to $1,500. Accuracy dropped 5%, but user complaints didn’t rise. The math worked.

Self-hosting isn’t always cheaper, but it’s more controllable. Azure OpenAI offers 350ms TTFT and 99.95% uptime-but at $0.03 per 1K tokens. Self-hosting Mixtral-8x7B on AWS costs $0.008 per 1K tokens, but latency swings from 400ms to 800ms depending on load. You trade consistency for savings.

How top companies optimize for both speed and cost

There’s no single fix. The best teams use a mix of strategies:

- Caching: Store common responses. Shopify reduced redundant calls by 50% using a smart cache layer. But beware: cached answers can become outdated. You need TTLs and invalidation triggers.

- Batching: Group multiple requests together. Increasing batch size from 1 to 8 can boost token throughput by 300%. But it also increases TTFT by 150%. You need to find the sweet spot.

- Model compression: 4-bit quantization cuts VRAM needs by 57% with less than 1% accuracy loss, according to Qwen’s 2024 paper. It’s not magic, but it’s close.

- Smart routing: Send simple questions to tiny models (like Mistral-7B), complex ones to bigger ones. Anthropic reported 35% cost savings using this approach.

- Hardware tuning: vLLM outperforms Hugging Face’s Text Generation Inference by 25% in TPS on identical hardware. NVIDIA’s TensorRT-LLM 0.12, released in December 2024, adds 40% faster token generation using speculative decoding.

One case study from Reddit’s r/LocalLLaMA showed a customer service bot going from 850ms latency to 320ms after switching to vLLM. User satisfaction jumped 21 percentage points. That’s not a tweak-it’s a transformation.

But here’s the trap: optimizing for speed can hurt quality. Galileo’s 2024 guide warns that cutting latency by 50% via aggressive quantization might degrade perplexity by 15-20%. You get fast answers-but wrong ones. That’s a false economy.

What tools and frameworks are actually used in production?

Enterprise teams don’t guess. They measure. Here’s what’s in use:

- For latency monitoring: Prometheus + Grafana (open-source, low overhead) or Arize AI (high-end, $5K/month minimum). Datadog APM scores 4.6/5 for cost tracking but adds 200ms of its own overhead.

- For inference engines: vLLM (best for high-throughput, batched workloads), Text Generation Inference (better for sporadic traffic), and NVIDIA Triton (enterprise-grade, supports multiple model formats).

- For cost tracking: Sematic (founded 2022, raised $22M) specializes in real-time cost alerts. Google Cloud’s “Latency Shield” auto-routes traffic to faster regions.

- For hardware: H100 GPUs dominate for large models. But A100s are still widely used. NVIDIA’s acquisition of OctoML in August 2024 signals a push to bake latency optimization into every layer of the stack.

And the market is exploding. Gartner projects the LLM performance monitoring market will hit $2.1 billion by 2026-up from $320 million in 2024. That’s a 58% annual growth rate. Companies aren’t just buying models. They’re buying performance guarantees.

Regulations, standards, and what’s coming next

It’s not just businesses driving change. Regulations are catching up.

The EU AI Act, effective February 2025, requires “reasonable response times” for high-risk applications. For medical triage bots, that means under 2 seconds. Financial services in the U.S. and EU now demand SLAs with latency guarantees. Fortune 500 companies include these in contracts-89% do, according to Forrester.

Looking ahead:

- Hardware: Cerebras’ CS-3 wafer-scale chip (shipping Q2 2025) promises sub-100ms TTFT for 100B+ models.

- Algorithms: MIT is researching “latency-aware training”-training models to be fast from the start, not fixing speed later.

- Standards: ISO is expected to release “LLM Performance Benchmarking Standard 27001” by Q3 2026. Expect formal definitions for TTFT, TPS, and cost-per-quality-unit.

Meanwhile, Anthropic’s Dario Amodei warned at AWS re:Invent 2024: “Without fundamental algorithmic improvements, cost scaling will limit LLM deployment growth to 20% annually versus the 200% seen in 2023.”

The future isn’t about bigger models. It’s about smarter, leaner, faster ones.

Final takeaway: Stop evaluating models in a vacuum

Don’t ask: “Which model has the highest accuracy?”

Ask:

- What’s the TTFT under real load?

- How much does a million tokens cost on my hardware?

- Can I cache 40% of responses without losing context?

- Will this scale to 1,000 requests per minute without breaking?

- What’s my ROI if I trade 3% accuracy for 80% lower cost?

Professor Percy Liang of Stanford put it best: “Cost-per-quality-unit should be the primary metric for enterprise LLM selection, not raw accuracy.”

Accuracy matters. But if your model can’t answer in under a second, or if it costs $10,000 a month to run, it’s not a solution. It’s a liability.

LLMs are no longer science experiments. They’re production systems. And production systems run on speed, cost, and reliability. Measure them like it.

What’s the acceptable latency for a customer-facing LLM chatbot?

For consumer applications, end-to-end latency should stay under 2 seconds. For optimal satisfaction, aim for under 1.5 seconds. Time-to-first-token (TTFT) should be under 500ms. Above 2 seconds, user drop-off rates jump sharply-by as much as 68%, according to Deepchecks’ 2024 data. Enterprise chatbots targeting high-value customers often target sub-1-second responses.

Is self-hosting cheaper than using cloud APIs like OpenAI?

It depends on volume and scale. For low usage (under 100K tokens/month), cloud APIs are simpler and often cheaper due to no infrastructure overhead. But at scale-say, 500K+ tokens/day-self-hosted models like Llama-3-70B or Mistral-7B can be 10x to 40x cheaper. For example, GPT-4-turbo costs $10 per million tokens. Self-hosted Llama-3-70B runs at around $0.80 per million tokens. The tradeoff is latency variability and engineering effort.

How do I measure latency accurately in my LLM system?

Track three key metrics: Time-to-First-Token (TTFT), Inter-Token Latency (ITL), and End-to-End Latency. Use tools like Prometheus with custom exporters or dedicated platforms like Arize AI or Datadog. Measure at the application level (user experience), not just the model level. Include network delays, queuing, and preprocessing time. Avoid measuring only on idle hardware-test under realistic load.

Can I reduce cost without hurting accuracy too much?

Yes, and many teams do it successfully. Use 4-bit quantization-it cuts VRAM usage by 57% with under 1% accuracy loss. Switch to smaller models like Mistral-7B or Llama-3-8B for simple tasks. Implement caching for common queries. Use smart routing to send easy prompts to lightweight models. One company reduced costs by 37% with caching and only saw a 2% accuracy dip. The key is testing: measure accuracy before and after each optimization.

What’s the biggest mistake companies make with LLM cost and latency?

They optimize one metric at the expense of the other. For example, aggressively quantizing a model to cut latency might drop accuracy by 15-20%, making responses unreliable. Or they use a huge model for simple tasks, blowing up costs. Another common error: ignoring the difference between average and p99 latency. A model might average 400ms, but spike to 3 seconds during peak load-that’s what users experience. Always measure worst-case scenarios.

Are there any regulations around LLM latency and cost?

Yes. The EU AI Act, effective February 2025, requires “reasonable response times” for high-risk applications like medical triage bots-interpreted as under 2 seconds. Financial institutions in the U.S. and EU now demand latency SLAs in vendor contracts. While there’s no direct regulation on cost yet, transparency requirements are growing. Companies must be able to explain and justify their LLM spending, especially in regulated industries.

sonny dirgantara

lol i just tried running llama3 on my laptop and it froze for 3 mins. guess im not ready for production lol

Jawaharlal Thota

I've been through this exact journey with our customer support bot. We started with GPT-4 because everyone said it was the best, but our users were leaving after 3 seconds. We switched to Mistral-7B with 4-bit quantization and vLLM, cut our monthly bill from $18k to $1.2k, and guess what? User satisfaction went UP. People don't care if the answer is perfect if it takes forever. They care if it helps them right now. We added caching for common questions like 'what's my return policy?' and that alone saved us 40% in token costs. The key isn't having the biggest model-it's having the right one for the job. And yes, accuracy dipped a little, but not enough to matter. Our CSAT scores actually improved because responses felt faster, more human. Stop chasing benchmarks. Chase user retention.

Lauren Saunders

Honestly, this entire post reads like a vendor whitepaper masquerading as insight. Latency? Cost? Please. You're just afraid of the real problem: LLMs are still fundamentally broken. They hallucinate. They're brittle. You can optimize every millisecond and save every dollar, but if the output is garbage, none of it matters. And don't even get me started on 'smart routing'-that's just a band-aid on a hemorrhage. The real innovation isn't in inference engines-it's in rethinking why we're using LLMs at all in the first place.

Gina Grub

I saw a company burn $200k last quarter because they used GPT-4 for FAQ bots. They didn't even measure p99 latency. Their system would work fine until 5pm when everyone logged off and then-BAM-3 second delays. Customers screamed. Legal got involved. HR had to do damage control. This isn't tech. This is negligence wrapped in a Python script.

Kendall Storey

You're all missing the point. It's not about choosing between speed and cost-it's about building systems that adapt. Use quantized models for simple queries. Route complex ones to bigger models. Cache what you can. Monitor p99, not average. And for god's sake, test under real load-not your dev machine. This isn't theory. It's operations. And if you're not measuring your latency and cost like you measure your KPIs, you're flying blind. The tools are here. The data is here. Stop overthinking and start optimizing.

Rae Blackburn

Wait… so you're telling me OpenAI secretly wants us to self-host so they can sell us more H100s? And that the EU AI Act is just a cover for NVIDIA to monopolize the market? I read somewhere that all these 'cost savings' are just a trap to make us dependent on AWS. Who benefits? Who really benefits?

Andrew Nashaat

I can't believe people are still falling for this 'quantization doesn't hurt accuracy' myth. You're lying to yourselves. 4-bit? Sure, it's fast-but you're trading coherence for speed. I've seen models output 'The capital of France is Berlin' with 95% confidence after quantization. And you call that acceptable? You're not saving money-you're creating legal liability. And don't even get me started on 'smart routing'-you think a 7B model can handle medical triage? That's not optimization-that's malpractice. If you're not using GPT-4-turbo for anything serious, you're gambling with people's lives. And you wonder why trust in AI is declining?

LeVar Trotter

This is one of the most practical breakdowns I've seen on this topic. Seriously, props to the author. I run a small SaaS with 50K daily users, and we made the same switch: GPT-4 → Llama-3-8B + vLLM + caching. Our latency dropped from 800ms to 280ms. Our monthly cost went from $14k to $980. And our support tickets? Down 22% because users weren't frustrated waiting. We did A/B testing. We tracked drop-off rates. We didn't assume-we measured. The myth that bigger = better is dead. Lean, fast, and reliable wins every time. If you're still using raw GPT-4 for simple tasks, you're not being innovative-you're being wasteful. And it's not just about money-it's about respect for your users' time.

Tyler Durden

I think we need to talk about something no one's saying: the emotional impact of latency. It's not just numbers. When someone asks a question and waits 3 seconds for an answer, they start doubting themselves. Did I word it wrong? Is the system broken? Is it even listening? That doubt lingers. When the answer comes fast-even if it's slightly less perfect-they feel heard. That's the real metric. I used to work in call centers. The best reps didn't have the best answers-they had the fastest responses. Same here. Speed isn't technical-it's psychological. And if you're not designing for that, you're designing for failure. We started measuring user sentiment alongside latency. The correlation was terrifying. Slow = anxious. Fast = trusted. It's not a coincidence. It's human nature.