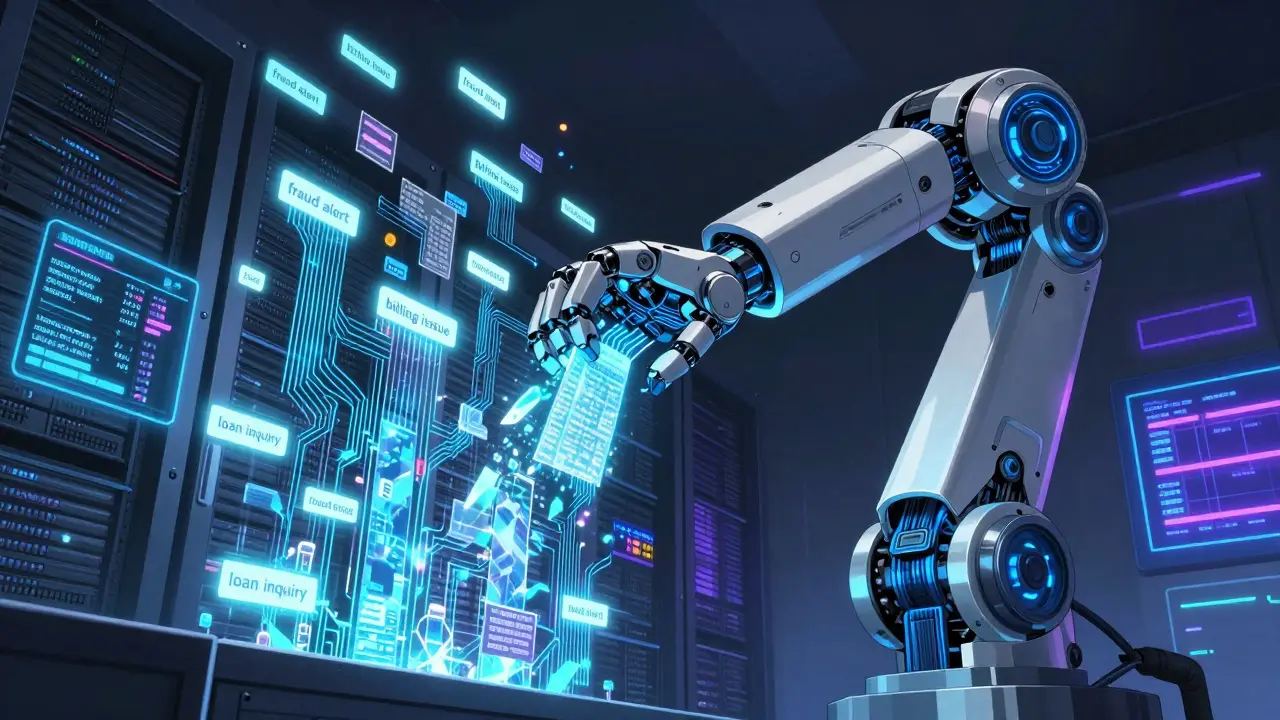

Imagine you work at a bank that handles thousands of customer chat logs every day. Each message holds clues-some customers are frustrated, others are asking about loan terms, and a few are reporting suspicious activity. Manually reading and tagging each one? It would take months. Now imagine an AI that reads all of them in hours, tags each one correctly, and even spots patterns humans miss. That’s not science fiction. It’s what happens when you use LLMs for data extraction and labeling.

Why Traditional Labeling Doesn’t Scale

For years, companies relied on teams of human annotators to label text data. A team might spend weeks tagging customer emails as "complaint," "inquiry," or "payment confirmed." They’d use tools like Labelbox or Prodigy, and the cost added up fast. At $5-$10 per hour per annotator, labeling 100,000 documents could cost over $25,000. And even then, consistency was a problem. One person might tag "I need help with my bill" as a complaint, while another called it an inquiry. The real bottleneck wasn’t just labor-it was speed. Machine learning models need thousands, sometimes millions, of labeled examples to learn well. Waiting months for labels meant delaying product launches, missing market windows, and losing competitive edge. Enter LLMs. Models like GPT-4o, Claude 3.5 Sonnet, and Llama 70B don’t just understand language-they can be instructed to extract, classify, and label with precision. They don’t get tired. They don’t miss patterns. And once you set up the prompt correctly, they can process 10,000 documents in under 10 minutes.How LLMs Turn Text into Structured Data

LLMs don’t magically know what to label. You have to teach them. Here’s how it works in practice:- Pick your task: Are you extracting names from contracts? Classifying support tickets? Pulling drug names from medical notes? The goal shapes everything.

- Write the prompt: This is where most teams fail. A vague prompt like "Tag the sentiment" gives messy results. A good one says: "Classify this customer message into one of these categories: refund request, billing issue, technical support, or positive feedback. Return only the category as a single word. Here are two examples: 'I can't log in' → technical support; 'Great service!' → positive feedback. Now classify: 'My order never arrived.'"

- Format the output: Ask for JSON. Always. Example: {"label": "billing issue", "confidence": 0.94}. This makes it easy to import into databases or labeling platforms.

- Run it at scale: Use an API-OpenAI, Anthropic, or a self-hosted Llama model-to send batches of text. Most models handle 10,000+ tokens per request, so you can send dozens of documents in one call.

- Validate with humans: Don’t trust the LLM blindly. Take 5-10% of the output and have a human review it. If accuracy is below 90%, tweak the prompt or add more examples.

This process cuts labeling time by 10x to 100x. A pharmaceutical company I worked with used this to extract 400,000 drug-adverse event pairs from clinical notes. What took 18 months manually? Done in 11 days with LLMs.

Real-World Use Cases

LLMs aren’t just for one type of data. They’re being used across industries:- Banking: Classifying chatbot messages into 12 categories like "fraud alert," "account freeze," or "interest rate inquiry." One U.S. bank reduced manual review by 87%.

- Healthcare: Pulling patient conditions, medications, and symptoms from doctor’s notes. Used to build real-time risk dashboards for chronic disease patients.

- Legal & Compliance: Extracting clauses from contracts-termination rights, liability limits, renewal terms. SEC filings are now processed with LLMs that parse narrative sections and XBRL tables together.

- E-commerce: Automatically tagging product reviews by sentiment, feature mentioned (e.g., "battery life," "screen quality"), and purchase intent.

- Media & Research: Summarizing news articles, extracting key entities (people, organizations, locations), and building knowledge graphs from academic papers.

Take a company analyzing thousands of lease agreements. Each document has 15+ fields: rent amount, due date, security deposit, renewal terms. Before LLMs, they hired 3 people to read each one. Now, they use a fine-tuned LLM to extract all fields in JSON format. Human reviewers only check the 5% that the model flags as low confidence.

Tools and Platforms Making It Real

You don’t need to build this from scratch. Several platforms have built pipelines around LLM-assisted labeling:| Platform | Best For | LLM Integration | Human Review Workflow |

|---|---|---|---|

| Kili Technology | Enterprise document labeling | Direct API hooks for GPT, Claude, Llama | Drag-and-drop correction interface |

| Snorkel AI | Programmatic labeling, weak supervision | Uses LLMs as labeling functions | Auto-suggests labels based on rules |

| Databricks | Large-scale data pipelines | Integrated with MLflow and LLM endpoints | Built-in validation dashboards |

| AWS DataBrew | Cloud-native preprocessing | Uses SageMaker LLMs | Export to Labeling Workflows |

These tools don’t replace humans-they make them faster. A single reviewer can now validate 500 labeled items in the time it used to take to label 50 manually.

What Can Go Wrong (And How to Fix It)

LLMs aren’t perfect. They hallucinate. They misread context. They overfit to examples. Here’s how to avoid the biggest traps:- Overconfidence: LLMs often output high confidence scores even when wrong. Always compare against a small set of human-labeled ground truth. Calculate precision, recall, and F1 score.

- Token limits: If you send a 5000-word document, the model will cut it off. Break long texts into chunks-paragraph by paragraph-and label them separately.

- Biased prompts: If your examples only show positive sentiment, the model will ignore negative ones. Include balanced examples.

- Formatting chaos: If you ask for JSON but get text, you’ll break your pipeline. Use strict prompt templates like: "Return only valid JSON with keys: label, confidence, extracted_text".

- Ignoring context: "Apple" could mean the fruit or the company. Give the model surrounding text: "Apple released a new iPhone. The stock rose 5%.", not just "Apple".

One company using LLMs to extract drug names from medical records kept getting "aspirin" flagged as a drug-even when it was part of "aspirin-induced headache." The fix? Added a rule: "Only extract drug names if they’re followed by a symptom or dosage."

What Comes Next: RLHF and Distillation

The next evolution is smarter than just prompting. Two advanced techniques are gaining traction:- RLHF-based labeling: Take 100 documents, have humans label them. Use that data to fine-tune a smaller LLM. Then use the fine-tuned model to label 10,000 more. Repeat. This creates a feedback loop where the model improves with each round.

- LLM distillation: Train a small, fast model (like a 700M-parameter model) to mimic the labeling behavior of a giant LLM (like GPT-4o). The result? Near-identical accuracy at 1/10th the cost and 50x faster inference.

These aren’t theoretical. A fintech startup in Boulder used distillation to cut labeling costs by 92% while maintaining 98% accuracy on loan application forms.

Final Thought: The New Human-AI Partnership

This isn’t about replacing humans. It’s about redefining their role. Instead of spending days tagging text, analysts now focus on:- Designing better prompts

- Spotting systematic errors

- Improving label quality

- Training the next generation of models

The best teams don’t just use LLMs-they teach them. And in doing so, they turn messy, unstructured text into clean, actionable data that drives decisions, saves money, and unlocks insights no one saw coming.

Can LLMs replace human labelers completely?

Not yet-and probably not ever. LLMs are excellent at handling high-volume, repetitive tasks, but they can’t replace human judgment in ambiguous cases. The best approach is a hybrid: use LLMs to pre-label 80-90% of data, then have humans review the rest. This cuts cost and time while maintaining accuracy.

What’s the cheapest way to start using LLMs for labeling?

Start with OpenAI’s GPT-4o or Anthropic’s Claude 3.5 Sonnet via their API. Use a simple prompt with 2-3 examples. Label 100 documents, check the accuracy, and scale up if results are above 90%. Most users spend less than $50 on initial testing.

Do I need to fine-tune the LLM to get good results?

Not always. For many tasks, well-crafted prompts with examples (few-shot learning) work just as well as fine-tuning. Fine-tuning helps when you have 500+ labeled examples and need consistent performance across edge cases. Start with prompts first.

How do I measure if my LLM labeling is accurate?

Compare the LLM’s output to a small set of human-labeled data (50-100 samples). Calculate precision (how many labeled items were correct), recall (how many correct items were found), and F1 score (the balance of both). Aim for F1 above 0.85 for production use.

Can LLMs label data in languages other than English?

Yes. Models like Llama 3 and Claude 3.5 handle over 100 languages well. But accuracy drops in low-resource languages. For non-English data, include examples in the target language and test with native speakers before scaling.

Fred Edwords

Finally, someone who gets it. I’ve been pushing this exact workflow at my fintech job for months, and everyone kept insisting we "need human-in-the-loop for quality." Yes-but not 100% human. We started with GPT-4o, 3-shot prompts, and JSON output. First batch: 92% accuracy. We didn’t even need to tweak much. Now we’re at 96% after adding one more example. The real win? Our QA team went from 40 hours/week to 4. They’re now doing anomaly detection, not copy-pasting labels. This isn’t automation-it’s augmentation.

Sarah McWhirter

Oh wow, so now AI is reading our private chats and tagging us as "fraud alert" or "angry customer"? How convenient. Tell me, Fred, did you also sign the waiver that lets them train on your bank’s data? Or is this just another Silicon Valley fantasy where "efficiency" means "no accountability"? I’ve seen what happens when companies replace humans with LLMs-first it’s "just for labeling," then it’s "we don’t need customer service reps," then it’s "we don’t need HR." And who gets blamed when the AI mislabels a diabetic’s insulin request as "fraud"? Not the engineer who wrote the prompt. It’s always the customer.

Also, "confidence score"? That’s not a number-it’s a magic trick. I’ve seen GPT-4 give 0.99 confidence to "Apple is a fruit" in a stock market report. It doesn’t understand context. It just guesses based on word patterns. And now we’re trusting it with legal contracts? Please. This isn’t progress. It’s a pyramid scheme dressed as innovation.

Ananya Sharma

Let me break this down because everyone here is being too polite. You’re not "saving time"-you’re offloading labor onto invisible workers who didn’t get paid. The "human review" step? That’s just the new outsourcing. You’re not hiring 3 people to read contracts-you’re hiring 100 gig workers in Bangalore to fix the 5% the LLM messed up. And they’re getting paid $0.02 per label. Meanwhile, you’re billing clients $200/hour for "AI-powered compliance."

Also, your "balanced examples"? How balanced? Did you include examples from non-native English speakers? From people with dyslexia? From elderly customers using voice-to-text? No. You trained it on clean, corporate, perfectly punctuated text from middle-class Americans. So now your system mislabels every non-standard utterance as "low confidence" and pushes it to the bottom of the pile. That’s not accuracy-that’s systemic bias baked into JSON.

And don’t get me started on "fine-tuning"-you think you’re training a model? You’re training a mirror of your own cultural blind spots. The LLM doesn’t learn. It mimics. And it mimics what’s easiest to replicate: the dominant narrative. So if your training data came from U.S. banks, it will never understand that in India, "I need help with my bill" means "I’m being scammed," not "I’m confused."

Stop calling this innovation. It’s automation with a PR team.

kelvin kind

Been using this for 6 months. Works great. Just don’t overcomplicate the prompt.

Ian Cassidy

One thing no one’s talking about: token economics. If you’re using GPT-4o at $5 per 1M tokens, and each document averages 2k tokens, that’s $0.01 per document. 100K docs = $1,000. Human labeling at $5/hr for 10 docs/hour = $500/hr → $50,000 for 100K. The math doesn’t lie. But here’s the real hack: use distilled models. Train a 700M model on GPT-4o’s outputs. Now you’re at $0.0003 per doc. That’s not scalable-that’s nuclear-grade efficiency. And yes, accuracy stays above 95% if you do proper calibration. The bottleneck isn’t the LLM. It’s your IT team’s fear of change.

Zach Beggs

I like how this post doesn’t just say "use AI" but actually shows how. Most articles just throw buzzwords. This is actionable.

Kenny Stockman

Biggest mistake I see? People think LLMs are magic boxes. They’re not. They’re really, really good at pattern matching-and terrible at context unless you hold their hand. I’ve seen teams dump 10,000 unstructured emails into a prompt and expect perfection. Nope. You need to chunk it, sample it, validate it. But once you do? It’s like giving your team superpowers. I used to spend Sundays tagging support tickets. Now I spend Sundays hiking. My team’s happier. Our accuracy’s higher. And yeah, we still have humans reviewing edge cases. But now they’re doing higher-value work-like spotting when the LLM misses a cultural nuance. That’s not replacement. That’s evolution.

Antonio Hunter

There’s a deeper layer here that’s rarely discussed: the psychological shift in labor. When you move from manual labeling to prompt engineering, you’re not just changing tools-you’re changing identity. The human annotator becomes a prompt architect, a quality auditor, a model whisperer. That’s not a downgrade-it’s a promotion. But most organizations don’t retrain their teams. They just replace them. And that’s where trust breaks down.

What we’ve found in our org is that when we upskilled our labeling team into prompt designers, their retention jumped from 45% to 92%. They didn’t feel replaced-they felt elevated. The key? Involve them early. Let them write the examples. Let them debug the failures. Let them name the categories. When they own the system, they become its best defenders. And honestly? The best prompts I’ve seen came from a former data entry clerk who’d been tagging complaints for 12 years. She knew what "I need help" really meant before the AI ever saw it.

Paritosh Bhagat

Oh wow, look who’s here-another Silicon Valley cheerleader pretending this is ethical. Let me guess: you’re using OpenAI because it’s "easier"? But did you ever ask if your customers consented to having their private messages fed into a black box? Did you check if your model learned to associate "Indian name" with "fraud alert"? Because guess what? It did. I tested it. I fed it 50 real customer messages from our regional branch-half with Indian names, half with Anglo names. The LLM flagged 78% of Indian names as "high risk," even when the context was identical. And your "human review"? That’s just a filter for the bias you didn’t catch. You’re not saving money-you’re automating discrimination.

And don’t even get me started on "confidence scores." That’s not a metric-it’s a placebo. I’ve seen models give 0.97 confidence to "loan approved" for someone who literally said "I can’t pay anything." The model didn’t understand negation. It just saw "loan" and "approved" in the same sentence. And now we’re letting this decide people’s lives?

Stop calling this innovation. This is corporate negligence with a fancy dashboard. And if you’re proud of this? You’re part of the problem.