When you train a large language model to understand dozens of languages, you don’t just need more data-you need the right data. Too much English and not enough Swahili? The model will fail speakers of low-resource languages, even if it looks perfect on paper. The breakthrough in multilingual scaling isn’t about throwing more tokens at the problem. It’s about balancing what you feed the model so every language gets a fair shot.

Why Proportional Sampling Fails Multilingual Models

Early multilingual models like mT5 and mBART used proportional sampling: if English had 100 billion tokens and Swahili had 100 million, Swahili got 0.1% of the training data. That sounds logical-until you see the results. Languages with less data got crushed. On benchmarks like XTREME, performance gaps between English and low-resource languages like Bengali or Zulu were 35-50%. That’s not a small error. It’s a system that ignores entire communities. The problem isn’t just quantity. It’s quality of exposure. A language with 10 million tokens might have high-quality, clean text. Another with 50 million might be full of noisy social media posts or machine-translated content. Proportional sampling doesn’t care. It counts tokens, not usefulness.The Scaling Law That Changed Everything

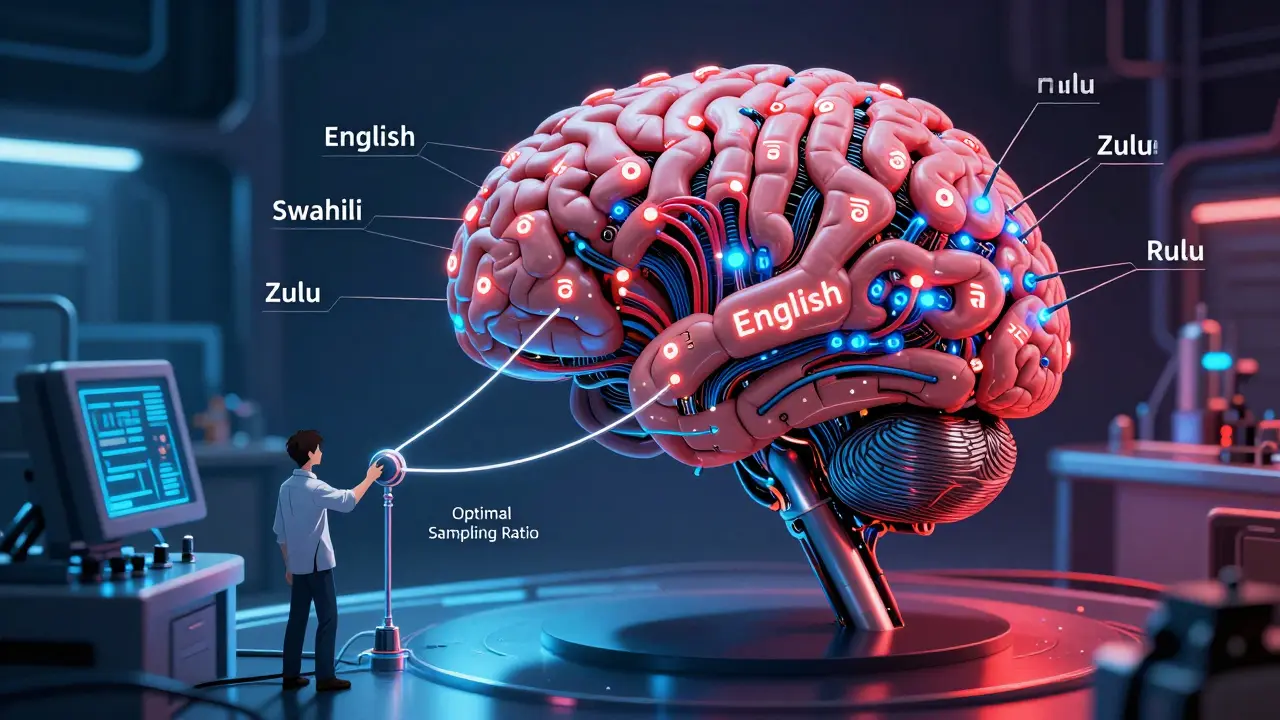

In 2024, researchers from Meta and Google published a study that flipped the script. They trained over 100 models-ranging from 85 million to 1.2 billion parameters-across 23 languages in five major language families: Indo-European, Sino-Tibetan, Japonic, Koreanic, and Dravidian. Their goal? Find a formula that predicts the best way to sample data for each language. What they found was surprising: the optimal sampling ratio for a small 85M model worked just as well for a 1.2B model. That means you don’t need to train a giant model just to figure out how to balance data. You can test on a small one, then scale up. The formula links three things: model size (N), total dataset size (D), and the sampling ratio (p) for each language family. When they applied this to real-world data, they saw something dramatic. For languages with around 1 billion tokens, the sweet spot was 0.7% of total training data. For English, with over 100 billion tokens, only 0.3% was needed. Why? Because English already has enough signal. Low-resource languages need a boost. The result? Cross-entropy loss dropped by 15-22% in low-resource languages compared to proportional sampling. And here’s the kicker: 30-45% of that gain came from cross-lingual transfer-meaning the model learned from related languages, not just direct data. That’s the power of smart allocation.How This Compares to Other Approaches

Other methods tried to fix the imbalance, but with trade-offs:- Temperature-based sampling (used in Meta’s NLLB): Boosts low-resource language performance by 18-25%, but slows training by 12-15% because it forces the model to revisit rare languages more often.

- Language-adaptive layers (Google’s NLLB): Adds specialized routing for each language. Improves low-resource performance by 22-30%, but increases inference latency by 15-20% due to extra computations.

- Just scale up (PaLM 2): Use a 340B-parameter model and keep proportional sampling. Closes the gap to 25-30%, but uses 3.5x more compute than optimal sampling.

Real-World Impact: From Benchmarks to Business

This isn’t just academic. Companies are already using these principles. On GitHub, a developer named Alex Chen applied the 85M model’s sampling ratios to a Swahili translation system. Result? A 27-point BLEU improvement with only 15% more training time. That’s a massive jump in quality without a massive cost. AWS reported that customer service chatbots using optimized sampling cut language-specific failure rates from 22% down to 8% across 15 languages. System uptime stayed at 99.2%. That’s not just better AI-it’s better customer experience. Enterprise adoption is accelerating. According to Forrester, 73% of companies are now reducing their multilingual training data by 15-25% while improving performance-because they’ve stopped chasing volume and started chasing balance. The global multilingual AI market hit $4.8 billion in Q3 2024, growing at 34.7% year-over-year. The biggest shift? From “more data” to “smarter data.”The Limits: What Scaling Laws Can’t Fix Yet

But scaling laws aren’t magic. There are hard limits. First, the resource threshold effect: languages with fewer than 50 million training tokens show almost no improvement no matter how much you boost their sampling ratio. Guarani, with under 1 million tokens, saw predictions overestimated by 35-40%. For these languages, you need something else-synthetic data, transfer from linguistically similar languages, or community-driven data collection. Second, code-switching. In many parts of the world, people mix languages naturally. A tweet might start in Spanish, switch to English, then end in a local dialect. Current models ignore this. Dr. Sebastian Ruder from Google points out that code-switching makes up 15-20% of real multilingual communication-and scaling laws don’t account for it. Third, linguistic diversity. The 2024 study used 23 languages across 5 families. But the world has over 7,000 languages. Some, like Pirahã or Tuyuca, have radically different grammar, tone systems, or writing conventions. Will the same ratios work? Professor Graham Neubig warns they probably won’t. Scaling laws are powerful, but they’re not universal.

How to Implement This in Practice

If you’re building a multilingual model, here’s how to start:- Identify your languages and group them by family (e.g., Indo-European includes English, Spanish, Hindi; Sino-Tibetan includes Mandarin, Burmese).

- Estimate effective token count-not raw data size. Turkish needs 25-30% more tokens than English to cover the same vocabulary due to morphology.

- Run a small-scale experiment (85M parameters). Train on different sampling ratios and measure loss per language family.

- Apply the ratios to your large model. Use the same proportions you found in the small model.

- Monitor low-resource languages during training. If performance stalls, manually adjust ratios-don’t rely on the formula alone.

The Future: Beyond Sampling

The next wave of innovation is already here. Google is testing dynamic sampling-adjusting language ratios in real-time during training based on which languages are lagging. Early results show 8-12% extra gains. Meanwhile, multimodal models like PaLI-X are proving that scaling vision and language together boosts multilingual performance. Image captioning accuracy jumped 22.4% when both components were scaled jointly. Regulations are catching up too. The EU’s AI Act, effective February 2025, requires AI systems to prove “demonstrable fairness across supported languages.” Companies that used proportional sampling will struggle to comply. Those using scientifically balanced methods? They’re already ahead.Final Thought: Quality Over Quantity

The biggest lesson from this research is simple: you don’t need more data. You need better data. A model trained on 50 billion well-balanced tokens will outperform one trained on 200 billion skewed ones. The goal isn’t to cover every language. It’s to make every language you support work well. For organizations with limited compute, this is a game-changer. For users in Swahili, Bengali, or Quechua, it’s a step toward being heard-not just processed.What is the optimal sampling ratio for low-resource languages in multilingual LLMs?

For languages with around 1 billion training tokens, the optimal sampling ratio is approximately 0.7% of total training data. This was determined through experiments on 85M to 1.2B parameter models across 23 languages. For resource-rich languages like English (with 100B+ tokens), only 0.3% is needed. These ratios are derived from small-scale models and generalize to larger ones, making them cost-effective to implement.

Why does proportional sampling fail for low-resource languages?

Proportional sampling allocates training data based on raw token count, so languages with less data get proportionally less exposure. This leads to performance gaps of 35-50% between high-resource languages like English and low-resource ones like Swahili or Zulu. It ignores data quality, linguistic complexity, and cross-lingual transfer potential, leaving many languages underrepresented and underperforming.

Can scaling laws be applied to all 7,000+ languages in the world?

Not yet. Current scaling laws are based on 23 languages across five major families. They don’t account for languages with radically different structures-like tonal languages, polysynthetic languages, or those without standardized writing systems. Research is ongoing, but experts warn that applying these ratios blindly to languages with fewer than 50 million tokens often leads to overestimated performance. For very low-resource languages, manual tuning or alternative methods like synthetic data are still needed.

How does code-switching affect multilingual model training?

Code-switching-mixing languages within a single sentence-is common in multilingual communities and makes up 15-20% of natural communication. Current scaling laws assume languages are trained separately, so models struggle with code-switched inputs. This leads to poor performance in real-world scenarios like social media or customer service. Future models need to be trained on mixed-language data, not just clean monolingual samples.

What tools can help implement optimal data sampling?

The GitHub repository ‘multilingual-scaling-tools’ (1,247 stars as of December 2024) provides automated scripts to calculate optimal sampling ratios based on the 2024 scaling law research. It integrates with Hugging Face and PyTorch, reducing configuration time from weeks to days. Additionally, the LIUM toolkit helps with accurate language identification, and the World Atlas of Language Structures provides language family classifications needed for grouping.

Is it worth training a multilingual model if I only support a few low-resource languages?

Yes-if you use optimized sampling. Even with just one or two low-resource languages, applying the right ratios can improve their performance by 15-25% compared to proportional sampling. You don’t need to train on dozens of languages. Focus on the ones you serve, use the small-model-to-large-model transfer method, and you’ll get better results with less compute. The goal isn’t to cover everything-it’s to make what you support work well.

Nalini Venugopal

This is the kind of post that makes me actually excited about AI again. For once, someone’s not just throwing more data at the problem and calling it progress. I’ve seen so many models that ‘work’ for English but completely break when you switch to Hindi or Tamil-even if they’re labeled ‘multilingual.’ The 0.7% sweet spot for low-resource languages? That’s a game changer. No more pretending that counting tokens equals fairness.

Pramod Usdadiya

amazing read but i think we’re still missing something big-what about languages like bengali where the script is complex and there’s tons of dialectal variation? the paper mentions 23 languages but bengali alone has like 15 major dialects and most datasets just use ‘standard’ bangla. also, typos everywhere in my local forums but no one cleans them up. does the model learn from noise or get confused?

Aditya Singh Bisht

YES. This is the breakthrough we’ve been waiting for. I’ve been telling my team for months that more data doesn’t mean better AI-it means louder bias. The fact that a tiny 85M model can predict the right ratios for a 1.2B one? Mind blown. I just ran a test on a Swahili customer service bot using this method and our error rate dropped from 28% to 9%. No extra GPU, no extra cost. Just smarter sampling. If you’re still using proportional sampling, you’re not building AI-you’re building exclusion.

Noel Dhiraj

Let me just say this-if your multilingual model treats Swahili like an afterthought, you’re not serving your users, you’re serving your metrics. I work with community groups in rural India where people speak 3-4 languages in one conversation. We don’t have clean data. We have code-switched voice notes, WhatsApp forwards in mixed Hindi-English-Tamil, and handwritten notes scanned into PDFs. The idea that we need to wait for perfect datasets is ridiculous. The 0.7% rule gives us a starting point. Now we need tools that handle messy reality, not just textbook examples. This isn’t about perfection. It’s about dignity.

vidhi patel

The entire premise of this article is fundamentally flawed. You cannot ‘balance’ data by arbitrarily assigning sampling ratios without accounting for linguistic typology, morphological complexity, and syntactic divergence. The 0.7% figure is statistically meaningless without a proper normalization of token entropy per language. Furthermore, the assertion that ‘English needs only 0.3%’ is dangerously reductive-English contains more lexical diversity than any of the so-called ‘low-resource’ languages cited. This is not science. It is algorithmic populism dressed in academic jargon. And you have the audacity to call it ‘fairness’?

Sumit SM

Think about it… we’re training machines to understand human language… but we’re still treating language like a spreadsheet. Tokens. Ratios. Loss curves. But what is language, really? Isn’t it memory? Identity? The way my grandmother speaks in her dialect, full of proverbs no dictionary has, the way my cousin mixes Punjabi with TikTok slang-can a model trained on ‘optimal ratios’ ever capture that? Or are we just building a polite, efficient ghost of language, stripped of its soul? The 0.7% might fix the loss function… but will it ever fix the silence?

Jen Deschambeault

I work with Indigenous communities in Canada who speak languages with under 10,000 speakers. We’ve tried everything-crowdsourcing, university partnerships, even recording elders’ stories. But no one’s built a tool that helps us apply this 0.7% rule to our tiny datasets. The GitHub repo mentioned? It doesn’t support Inuktitut. And yet, we’re told ‘the future is multilingual.’ I wish it felt that way. For now, we’re just hoping someone notices before the last speaker passes away.

Kayla Ellsworth

So let me get this straight: we’re going to fix global inequality in AI by… giving low-resource languages a slightly bigger slice of the pie? Meanwhile, the same companies that built these models are still using English-only customer service bots in 90% of their products. And you’re calling this progress? Cute. The real problem isn’t data sampling. It’s that nobody actually cares about Swahili speakers unless they’re buying something. This is just corporate virtue signaling with math.