By January 2026, if you’re writing code and not checking where it came from, you’re already behind. AI tools like GitHub Copilot and OpenAI’s Codex are generating millions of lines of code every day. But here’s the problem: AI-generated code isn’t just buggy-it’s unpredictable. It can slip in memory leaks, security holes, or even backdoors you didn’t ask for. And because AI doesn’t explain its reasoning, you can’t trust it just because it compiles. That’s where trustworthy AI for code comes in-not as a luxury, but as a necessity.

Why You Can’t Just Trust AI to Write Code

Think about the last time you used an AI tool to generate a function. It worked. It ran. You merged it. Then, six weeks later, a production crash. The root cause? A subtle buffer overflow hidden in the AI’s logic. You didn’t see it because AI doesn’t show its work. It doesn’t say, “I chose this loop because the input size is under 100.” It just spits out code. OpenAI’s own data shows that their AI-generated code gets reviewed in over 100,000 pull requests per day. But here’s the kicker: only 36% of those PRs are flagged by their own review system. And of those, 46% lead to actual code changes. That means more than half the time, the AI’s output slips through without human eyes catching the risk. In healthcare systems, autonomous vehicles, or financial apps, that’s not acceptable. The core issue? AI models are probabilistic. They guess. They don’t prove. And when you’re building systems that control real-world outcomes, guessing isn’t a feature-it’s a liability.Verification: Making AI Code Mathematically Reliable

Verification is the process of proving, with mathematical certainty, that code behaves as intended. It’s not about testing-it’s about deduction. You start with a set of rules: “This function must never access memory outside its bounds,” or “This API call must return within 500ms.” Then you use tools to check if the code follows those rules, no matter what inputs it gets. TrustInSoft’s Analyzer is one of the few tools doing this at scale. It doesn’t run the code. It doesn’t simulate it. It uses formal methods to mathematically prove memory safety. In one case study, a medical device manufacturer reduced critical bugs by 82% after switching to TrustInSoft. That’s not luck. That’s proof. There are different flavors of verification:- Model Checking: Explores every possible state the code can reach. Like checking every route on a map before you drive.

- Proof Assistants: Tools that help engineers build ironclad logical proofs. Think of them as AI co-pilots for formal logic.

- Abstract Interpretation: Analyzes code behavior without running it, catching issues like null pointer dereferences before deployment.

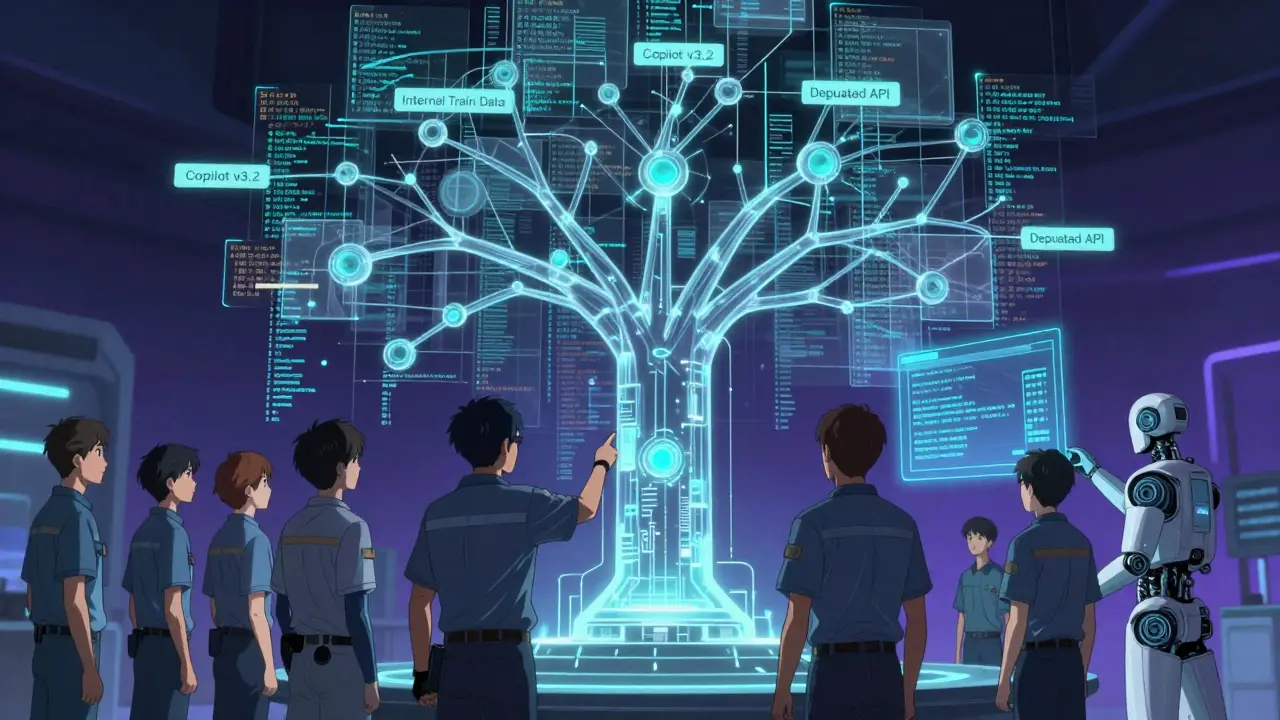

Provenance: Knowing Where Code Really Came From

You’ve probably seen code like this: “I found this on Stack Overflow.” But what if it came from an AI that was trained on proprietary code from a competitor? Or worse-what if it was trained on malicious code? Provenance answers one question: Where did this code come from? Not just “GitHub,” but which model, which version, which training data, and under what conditions? GitHub’s six-step review process, updated in January 2026, now includes “context verification” as step two. That means checking: Did this code come from a model trained on internal repos? Is it copying patterns from a closed-source library? Is it using a deprecated API that was patched last month? OpenAI’s reviewer doesn’t just look at the code-it looks at the repository. It needs access to the full codebase, dependencies, and even CI/CD logs. Why? Because context changes everything. A function that’s safe in isolation might be dangerous when called from a multi-threaded service. Without provenance, you’re flying blind. Companies using provenance tracking report 40% fewer production bugs tied to AI-generated code. But only if they enforce it. A survey from GitHub in January 2026 found that teams skipping provenance checks had 3x more security incidents.

Watermarking: The Invisible Signature in AI Code

Watermarking sounds like something for photos or music. But now, it’s being used in code. The idea is simple: embed a hidden signature in AI-generated code that says, “This was made by AI.” Not for users to see. For tools to detect. It’s like a digital fingerprint in the structure of variable names, comment patterns, or even the order of function calls. Why bother? Because if you can’t tell AI code from human code, you can’t enforce policies. Regulatory bodies like the EU AI Act now require organizations to identify AI-generated code in critical systems. Watermarking makes that possible. But it’s not foolproof. A determined attacker can strip out watermarks. And some developers hate them-they say it makes code look “dirty.” But here’s the reality: if you’re in finance, healthcare, or defense, you don’t get to choose. You have to detect AI code. And watermarking is the most practical way right now. Provably.ai is taking this further. They’re not just watermarking-they’re cryptographically signing code. Their system uses zero-knowledge proofs to let an AI agent prove, without revealing the code, that it generated a specific SQL query correctly. Verification takes under two seconds. And accuracy? 99.98% for database queries.Real-World Trade-Offs: Speed vs. Safety

No system is perfect. And every approach has a cost. OpenAI’s reviewer is fast, easy to use, and integrates into GitHub. It catches 80% of high-severity bugs-but misses 20%. It’s designed for usability. Engineers love it because it doesn’t slow them down. But it doesn’t give you mathematical guarantees. TrustInSoft gives you guarantees. But it slows you down. Setting it up in a CI pipeline can take weeks. It’s not for startups. It’s for banks, hospitals, and aerospace firms. And then there’s the false positive problem. Reddit users report spending 15-20 minutes per review chasing down AI-generated “issues” that turned out to be harmless. That’s productivity drain. And if tools are too noisy, developers turn them off. The smartest teams are combining approaches:- Use OpenAI’s reviewer for day-to-day PRs-fast, high-signal feedback.

- Run TrustInSoft on critical modules-memory safety, encryption logic, API gateways.

- Apply watermarking to all AI-generated code for compliance.

- Use provenance tracking to audit where code came from during security incidents.

What’s Next: The Verifiable Future

By 2027, the gap between AI code generation and verification will shrink from 5-10x to 2-3x. That means AI won’t just write code faster-it’ll also verify itself faster. TrustInSoft is building AI-specific formal verification templates, due in Q2 2026. These will cut setup time by 40%. Provably.ai is extending zero-knowledge proofs to JavaScript and Python-two of the most common languages in AI-generated code. And GitHub is rolling out automated provenance tagging for all Copilot-generated snippets. The market is exploding. $187 million in AI code verification tools sold in Q4 2025. 41% of Fortune 500 companies now have a verification policy. And the EU AI Act is forcing compliance across Europe. But the biggest shift isn’t technical. It’s cultural. Developers are no longer asking, “Can AI write this?” They’re asking, “Can I trust it?” The answer now isn’t yes or no. It’s: “Here’s how we verify it.”How to Start Today

You don’t need to overhaul your entire stack. Start small.- Enable GitHub’s built-in AI code review. It’s free. Turn it on for all PRs.

- Use a watermarking tool like Microsoft’s CodeGemma or OpenAI’s detection API to tag AI-generated files.

- For critical components (auth, payment, data encryption), run one formal verification tool. Try TrustInSoft’s free trial-it takes 2 hours to install.

- Document provenance. Add a comment to every AI-generated file: “Generated by Copilot v3.2 on 2026-01-10, trained on internal codebase.”

- Train your team on the six-step review process. Spend 30 minutes a week reviewing one AI-generated PR together.

Can AI-generated code be trusted without verification?

No. AI models generate code based on patterns, not logic. They don’t understand safety, security, or correctness. Without verification, you’re relying on luck. In high-risk systems like medical devices or financial software, that’s unacceptable. Verification turns guesswork into proof.

What’s the difference between provenance and watermarking?

Provenance tracks the origin of code-what model, training data, and environment generated it. Watermarking embeds a hidden signature in the code itself to signal it was AI-generated. Provenance answers “Where did this come from?” Watermarking answers “Is this AI-made?” You need both for full accountability.

Do I need formal methods if I use GitHub Copilot?

Not for every file. But for critical parts-authentication, data encryption, financial logic-you should. GitHub Copilot’s review catches common bugs, but it doesn’t prove memory safety or prevent buffer overflows. Formal methods like TrustInSoft do. Use Copilot for speed, formal tools for safety.

Are AI code verification tools expensive?

Some are, but not all. GitHub’s AI review is free. OpenAI’s reviewer is built into Copilot Enterprise. TrustInSoft and Provably.ai charge for enterprise use, but their pricing is often lower than the cost of a single security breach. For most teams, starting with free tools and scaling up is the smartest move.

Will AI ever verify its own code perfectly?

Not on its own. AI is probabilistic-it guesses. Verification requires deterministic logic. The future isn’t AI verifying itself. It’s AI generating code and a separate, rule-based verifier checking it. Think of it like a spellchecker for code: the AI writes, the verifier corrects. That’s the model that works.

How long does it take to implement AI code verification?

It depends. GitHub’s six-step review can be adopted in a week. Formal methods like TrustInSoft take 3-6 months to integrate fully, including training. Watermarking and provenance tagging can be automated in under 24 hours with the right CI/CD plugins. Start with the easiest step-enable GitHub’s review-and build from there.

Ronak Khandelwal

AI writing code is like a toddler drawing a map to the fridge-looks cute, but you’ll starve if you follow it 🤖🍎

We need verification like we need seatbelts. Not because we think we’ll crash, but because when we do, we don’t wanna die.

Also, watermarking? Yes please. I don’t want my team’s PRs to be full of ghost-written spaghetti. Let’s make AI own its mess.

And hey-provenance isn’t just tech, it’s ethics. If your code came from a model trained on stolen proprietary code, that’s not innovation. That’s plagiarism with a neural net.

Let’s stop pretending AI is a dev. It’s a really fast intern who never went to college and keeps mixing up pointers with coffee mugs.

But hey, I’m not against AI-I just want it to wear a name tag. 🙏

Daniel Kennedy

Stop romanticizing this. Verification isn’t a ‘nice-to-have’-it’s a legal obligation in 2026. The EU AI Act doesn’t care if your startup is ‘moving fast.’ If your AI-generated code crashes an insulin pump, you’re liable. Period.

TrustInSoft isn’t ‘slow’-it’s the only thing standing between your company and a $200M class action lawsuit.

And GitHub’s review? It’s a toy. It catches null pointers. It doesn’t prove memory safety. Stop calling it ‘enough.’

Watermarking? Fine. But if you’re not tracking provenance, you’re just decorating the corpse.

And yes-I’m aggressive because I’ve seen teams ignore this and then cry when their app gets hacked. Wake up.

Taylor Hayes

Just wanted to say I appreciate how this post breaks it down without fearmongering.

My team started with GitHub’s AI review last month-simple, free, and it caught a legit buffer overflow we’d have missed.

We’re now adding provenance comments to every AI-generated file. Took 20 minutes per file at first, now it’s automated via a pre-commit hook.

And honestly? Developers were resistant at first. Now they ask, ‘Did Copilot generate this?’ before merging.

It’s not about replacing humans-it’s about giving them better tools to do their job. Small steps, big impact.

Also, the 6-step review? We do it over coffee on Fridays. Makes it feel less like a chore.

Sanjay Mittal

Formal verification is overkill for 90% of apps. I work on internal tools. No one dies if my script breaks.

GitHub review + watermarking = enough. Save TrustInSoft for nuclear plants.

Also, why are we pretending watermarking is foolproof? I’ve seen devs strip it out in 10 minutes with sed.

Focus on culture: train devs to question AI output. Not tools. People.

Mike Zhong

Let’s be real: AI code generation is just a glorified autocomplete on steroids.

It doesn’t understand scope, context, or consequences. It just predicts the next token.

Verification isn’t ‘expensive’-it’s the price of not being an idiot.

And calling it ‘trustworthy AI’ is marketing BS. There’s no such thing as trustworthy AI. There’s only trustworthy processes.

Stop giving AI credit for being a dev. It’s a parrot with a compiler.

Jamie Roman

Okay, so I’ve been thinking about this a lot, and I think the real issue isn’t the tools-it’s the mindset.

Like, we’ve trained developers to treat AI like a magic box: type a prompt, get code, merge it, done.

But code isn’t a recipe you pull from a jar. It’s a living system with dependencies, edge cases, and hidden consequences.

And when you treat AI like a junior dev who never asks questions, you’re setting yourself up for disaster.

I started doing ‘AI code reviews’ with my team where we sit together and ask: ‘Why did it choose this loop? What happens if input is null? Where’s the error handling?’

It’s slow at first. Takes 30 extra minutes per PR.

But now we’ve had zero critical bugs from AI-generated code in 6 months.

And honestly? My devs are way more thoughtful now. They don’t just copy-paste anymore.

It’s not about the tech-it’s about teaching people to think again.

Also, I use emoji in my comments now. It helps. 🤔

sonny dirgantara

ai code is just fancy autocomplete

github review is good enough for me

who cares if it has a watermark

just test it and move on

no need to overthink it lol

Andrew Nashaat

Oh, so we’re pretending AI-generated code is somehow ‘different’ from human-written code? Please.

Human code has buffer overflows, too. Human code has backdoors. Human code has spaghetti logic. So why are we treating AI like it’s the devil?

And watermarking? That’s a compliance theater scam. You think a hacker is gonna be stopped because a variable name has a weird suffix?

And ‘provenance’? You want me to track which model version generated which line? That’s not engineering-that’s OCD with a CI/CD plugin.

Stop making excuses for lazy development. The problem isn’t AI. The problem is that developers stopped reading code.

And if you’re relying on tools to catch your mistakes instead of learning to write safe code yourself, you deserve to get hacked.

Also, ‘TrustInSoft’? That’s not a tool. That’s a $500K/year consulting firm with a fancy UI.

Grow up. Write better code. Or get out of the industry.

And yes-I said it. 😎