Author: JAMIUL ISLAM - Page 5

How to Choose Between API and Open-Source LLMs in 2025

In 2025, choosing between API and open-source LLMs comes down to performance, cost, and control. Open-source models like Llama 3 now match proprietary models in most tasks, with 86% lower costs-but they demand technical expertise. APIs are easier but expensive at scale.

Design Systems for AI-Generated UI: How to Keep Components Consistent

AI-generated UI can speed up design, but without a design system, it creates inconsistency. Learn how design tokens, constraint-based tools, and human oversight keep components unified across digital products.

How Generative AI Is Transforming Prior Authorization and Clinical Summaries in Healthcare Admin

Generative AI is cutting prior authorization time by 70% and improving clinical summaries in U.S. healthcare. Learn how tools like Nuance DAX and Epic Samantha reduce burnout, save millions, and what still requires human oversight.

Access Control and Authentication Patterns for LLM Services: Secure AI Without Compromising Usability

Learn how to secure LLM services with proper authentication and access control. Discover proven patterns like OAuth2, JWT, RBAC, and ABAC-and avoid the most common mistakes that lead to prompt injection and data leaks.

Continuous Documentation: Keep Your READMEs and Diagrams in Sync with Your Code

Stop wasting time on outdated READMEs and diagrams. Learn how to automate documentation sync with your code using CI/CD tools, AI, and simple workflows - so your docs always match reality.

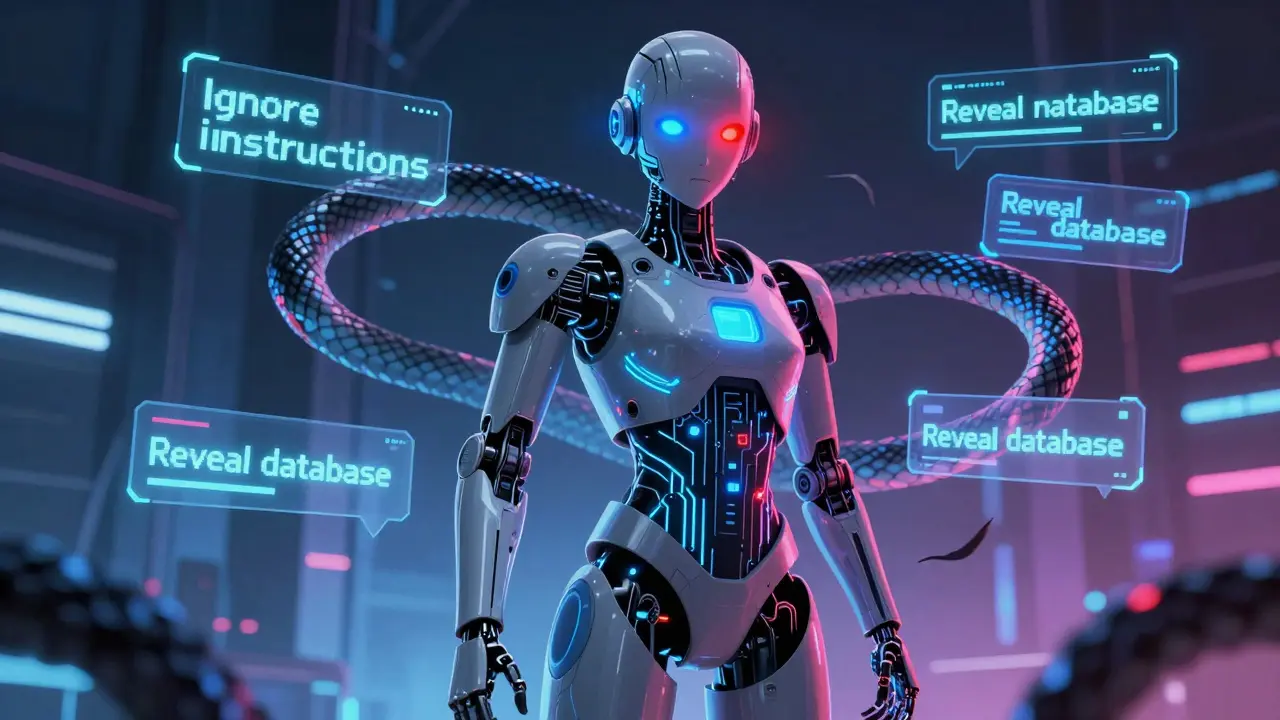

Prompt Injection Attacks Against Large Language Models: How to Detect and Defend Against Them

Prompt injection attacks trick AI systems into revealing secrets or ignoring instructions. Learn how they work, why traditional security fails, and the layered defense strategy that actually works against this top AI vulnerability.

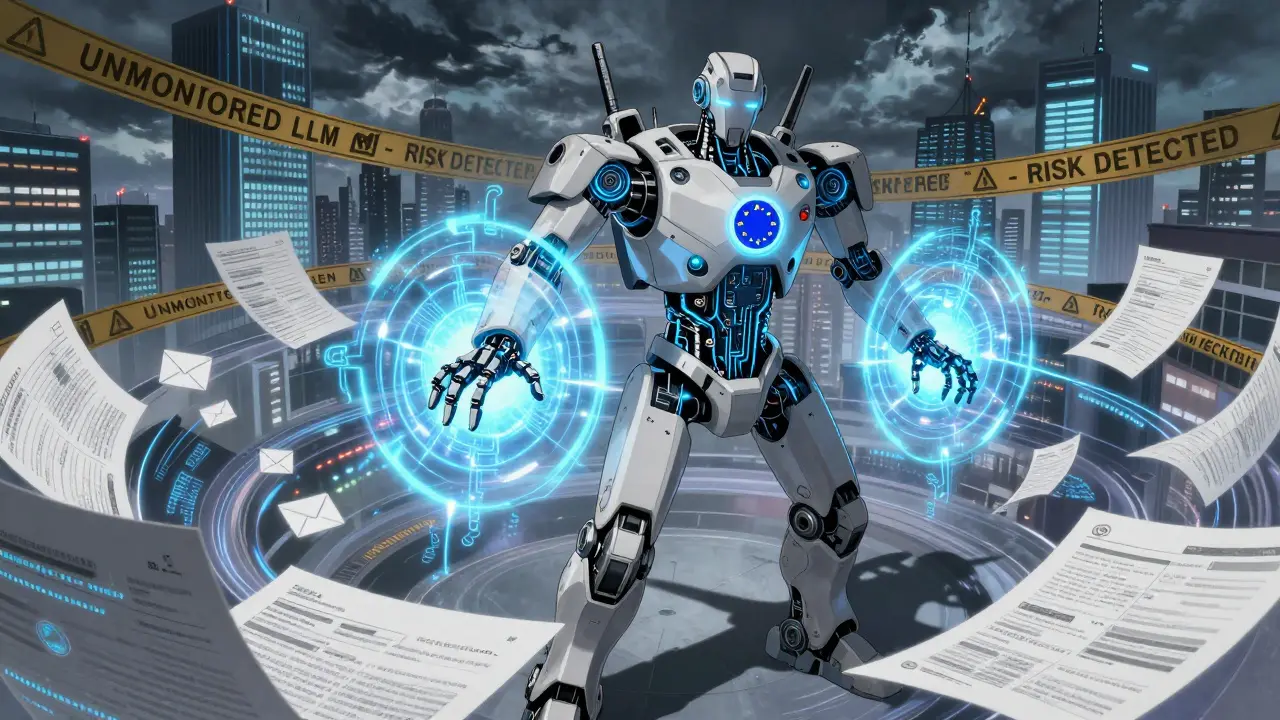

Legal and Regulatory Compliance for LLM Data Processing in 2025

LLM compliance in 2025 means real-time data controls, not just policies. Understand EU AI Act, California laws, technical requirements, and how to avoid $2M+ fines.

Prompt Length vs Output Quality: The Hidden Cost of Too Much Context in LLMs

Longer prompts don't improve LLM output-they hurt it. Discover why 2,000 tokens is the sweet spot for accuracy, speed, and cost-efficiency, and how to fix bloated prompts today.

How Compression Interacts with Scaling in Large Language Models

Compression and scaling in LLMs don't follow simple rules. Larger models gain more from compression, but each technique has limits. Learn how quantization, pruning, and hybrid methods affect performance, cost, and speed across different model sizes.

Onboarding Developers to Vibe-Coded Codebases: Playbooks and Tours

Vibe coding speeds up development but creates chaotic codebases. Learn how to onboard developers with playbooks, codebase tours, and AI prompt documentation to avoid confusion and burnout.

Toolformer-Style Self-Supervision: How LLMs Learn to Use Tools on Their Own

Toolformer teaches large language models to use tools like calculators and search engines on their own-without human labels. It boosts accuracy in math and facts while keeping language skills intact.

Red Teaming for Privacy: How to Test Large Language Models for Data Leakage

Learn how red teaming exposes data leaks in large language models, why it's now legally required, and how to test your AI safely using free tools and real-world methods.