VAHU: Visionary AI & Human Understanding - Page 2

Privacy and Data Governance for Generative AI: Protecting Sensitive Information at Scale

Generative AI is accelerating data leaks, not solving them. Learn how to enforce privacy controls, map AI data flows, and comply with global regulations-before regulators come knocking.

Structured Output Generation in Generative AI: Stop Hallucinations with Schemas

Structured output generation uses schemas to force AI models to return consistent, machine-readable data-eliminating parsing errors and reducing hallucinations in production systems. This is now a standard feature across major AI platforms.

Unit Economics of Large Language Model Features: How Task Type Drives Pricing

LLM pricing isn't one-size-fits-all. Task type-whether it's simple classification or complex reasoning-determines cost. Learn how input, output, and thinking tokens drive pricing, and how smart routing cuts expenses by up to 70%.

Compute Infrastructure for Generative AI: GPUs vs TPUs and Distributed Training Explained

GPUs and TPUs power generative AI, but they work differently. Learn how each handles training, cost, and scaling - and why most organizations use both.

Compliance Controls for Vibe-Coded Systems: SOC 2, ISO 27001, and More

Vibe coding with AI tools like GitHub Copilot is transforming software development - but traditional compliance frameworks like SOC 2 and ISO 27001 can't keep up. Learn the technical controls, industry adoption trends, and real-world risks of AI-generated code compliance in 2026.

On-Prem vs Cloud for Enterprise Coding: Real Trade-Offs and Control Factors

Enterprise teams face real trade-offs when choosing between on-prem and cloud for coding. This article breaks down control, cost, speed, and compliance factors to help teams make intentional deployment decisions-not just follow trends.

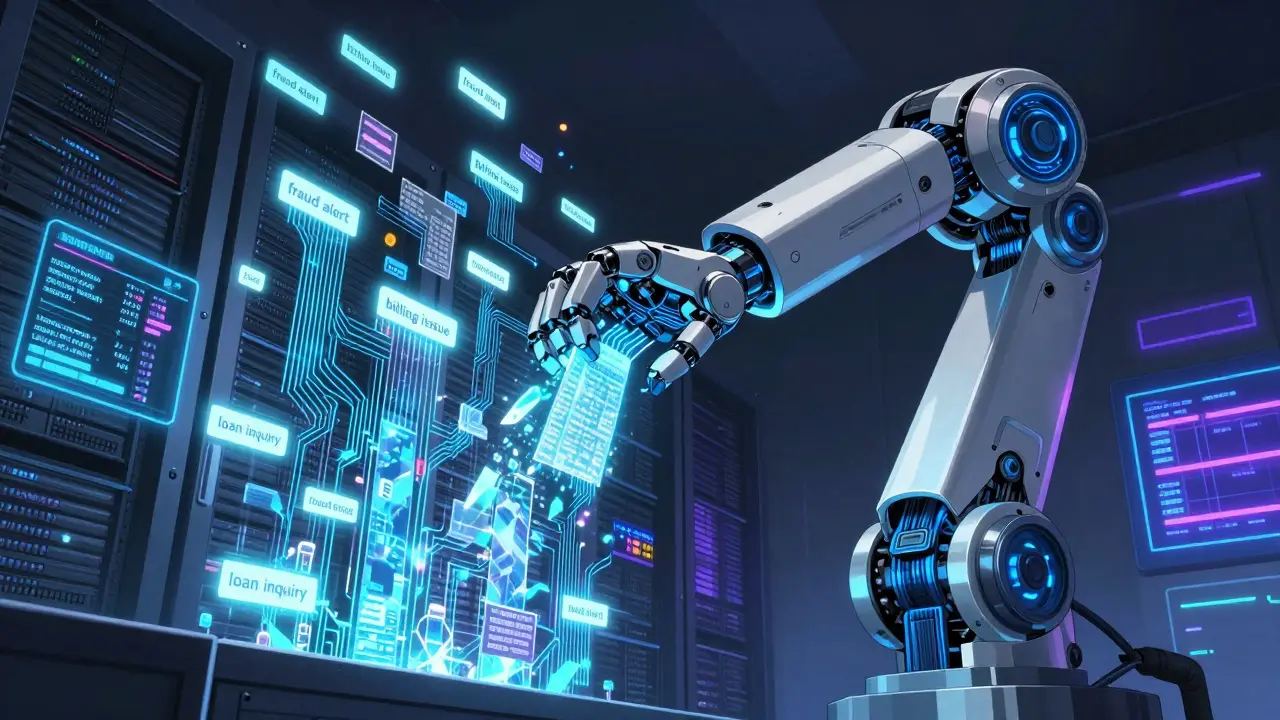

Data Extraction and Labeling with LLMs: Turn Unstructured Text into Structured Insights

LLMs are transforming how businesses turn unstructured text into structured data. From contracts to chat logs, automated extraction and labeling cut costs, speed up AI training, and unlock insights at scale.

Chain-of-Thought Prompts for Reasoning Tasks in Large Language Models

Chain-of-thought prompting helps large language models solve complex reasoning tasks by breaking problems into steps. It works best on models over 100 billion parameters and requires no fine-tuning-just well-structured prompts.

Ensembling Generative AI Models: Cross-Checking Outputs to Reduce Hallucinations

Ensembling generative AI models by cross-checking outputs reduces hallucinations by up to 72%, making it essential for high-stakes applications like healthcare and finance. Learn how it works, its costs, and when to use it.

Human Review Workflows for High-Stakes Large Language Model Responses

Human review workflows are essential for ensuring accurate, safe, and compliant AI responses in healthcare, legal, and financial applications. Learn how these systems reduce errors by up to 80% and why they're now legally required.

LLM Bias Measurement: Standardized Protocols Explained

Standardized protocols measure LLM bias. Audit-style tests, statistical metrics, and domain-specific languages detect discriminatory patterns. EU AI Act mandates testing. Future: real-time monitoring.

How to Select Hyperparameters for Fine-Tuning LLMs Without Catastrophic Forgetting

Learn how to select hyperparameters for fine-tuning large language models without losing prior knowledge. Discover critical settings like learning rate and batch size, advanced techniques such as LoRA, and practical steps to avoid catastrophic forgetting in real-world AI applications.