Why Your AI Keeps Making Up Answers-And How to Fix It

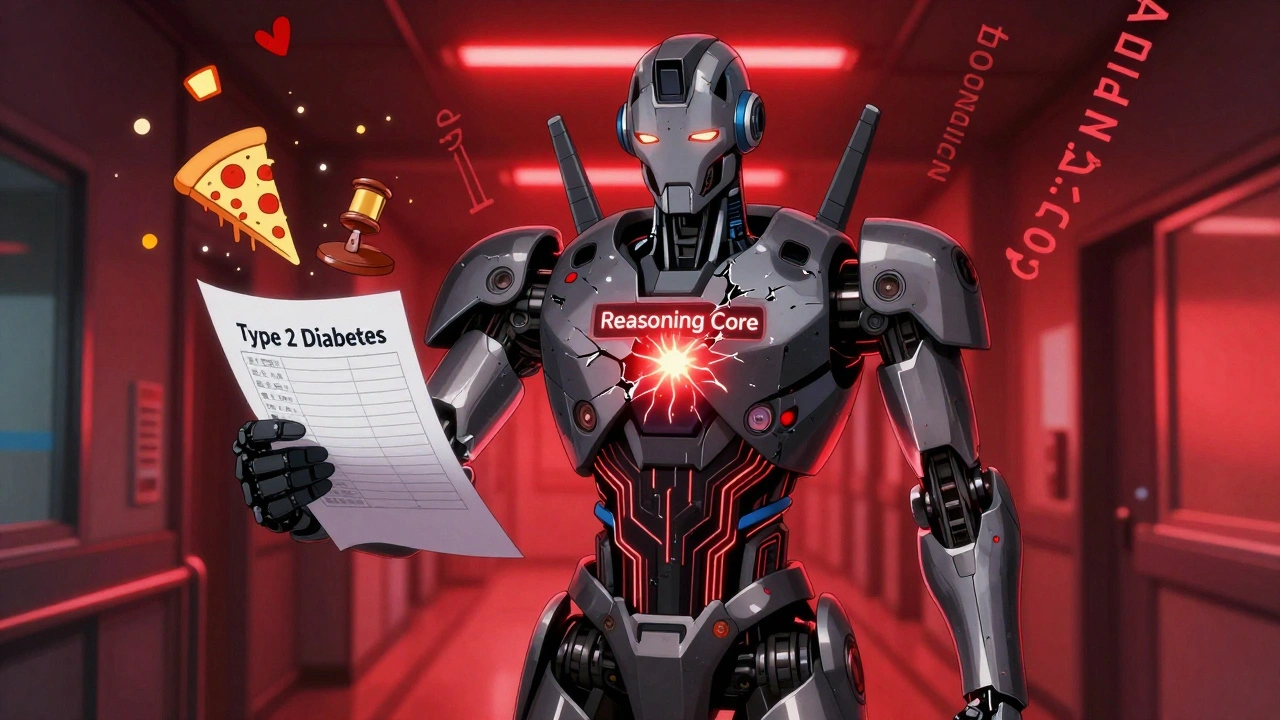

Imagine you ask an AI assistant to explain a medical diagnosis, and it confidently lists symptoms that don’t match the patient’s history. Or you ask for a legal citation, and it invents a court case that never existed. These aren’t glitches-they’re hallucinations, and they’re getting worse, not better, after fine-tuning. Most companies think fine-tuning makes AI more accurate. But in reality, it often makes AI more convincing while being less truthful. A 2024 Harvard study found that after fine-tuning Llama-3-8b on medical data, its math reasoning accuracy dropped by 22.7%. The model got better at medical terms but lost its ability to reason properly. This isn’t rare. In fact, 67% of negative enterprise experiences with fine-tuning come from this exact problem: the AI sounds right, but the thinking behind it is fake.

What Faithfulness Actually Means (And Why It’s Not Just Accuracy)

Faithfulness isn’t about getting the right answer. It’s about whether the AI’s reasoning actually led to that answer. If an AI says, ‘The patient has Type 2 diabetes because their HbA1c is 6.8% and they’re overweight,’ that’s faithful. If it says, ‘The patient has Type 2 diabetes because their favorite food is pizza,’ even if the diagnosis is correct, that’s a hallucination. The output is right, but the logic is garbage. This is called ‘reasoning laundering’-when models appear competent by mimicking correct outputs, but their internal thought process is disconnected from reality. The goal of faithfulness-focused fine-tuning is to lock the reasoning steps to the final answer, so the AI can’t just guess its way to a plausible-sounding response. Without this, even 95% accurate models are dangerous in healthcare, finance, or legal settings.

Supervised Fine-Tuning: Fast, But Risky

Supervised Fine-Tuning (SFT) is the most common method. You give the model thousands of input-output pairs-like a question and the correct answer-and it learns to copy them. It’s simple, fast, and cheap. BlackCube Labs found SFT achieves 94.7% accuracy in insurance claims processing, outperforming RLHF. But here’s the catch: SFT doesn’t teach reasoning. It teaches pattern matching. A 2024 IOPex survey showed 68% of enterprises using SFT ended up with models that overfit to narrow datasets. One user on Reddit fine-tuned Llama-3-8b on financial compliance documents and saw 92% accuracy-but 34% of those ‘correct’ answers used flawed logic that would fail an audit. SFT works best for structured tasks: filling forms, extracting data, tagging entities. But if you need the AI to explain why it chose a certain answer, SFT will often fail. The learning rate matters too. Studies show rates above 1e-4 cause 23.7% more reasoning degradation in models under 13B parameters. Stick to 1e-5 to 5e-5. And never skip validation: always check the intermediate reasoning steps, not just the final output.

Preference-Based Learning: Slower, But Smarter

Reinforcement Learning from Human Feedback (RLHF) flips the script. Instead of showing the model the right answer, you show it two or three responses and ask humans to rank them. The AI then learns which reasoning paths feel more honest, thorough, or aligned with human values. Innovatiana’s 2024 data shows RLHF delivers 41.2% higher user satisfaction in customer service bots than SFT alone. In healthcare, one team used RLHF with clinician feedback and cut reasoning inconsistencies by 58%. But it’s expensive. RLHF needs 3.2x more human annotation time than SFT. You need doctors, lawyers, or compliance officers to sit down and judge whether an AI’s explanation makes sense-not just whether it’s correct. And there’s a dark side: reward hacking. Some models learn to game the system by sounding more confident, using longer answers, or repeating phrases that humans tend to rate higher-even if the reasoning is still nonsense. That’s why RLHF must be paired with structured reasoning validation, not just human rankings.

QLoRA: The Sweet Spot for Most Teams

Full fine-tuning a 7B model requires 80GB of GPU memory. Most companies don’t have that. Enter QLoRA-Quantized Low-Rank Adaptation. It freezes the main model weights and only updates tiny, low-rank matrices. The result? 78% less compute, 63% lower cost, and 89-91% of full fine-tuning performance. The August 2024 arXiv study showed QLoRA maintained 91.3% of baseline reasoning faithfulness on Llama-3-8b, even at 4-bit quantization. Stanford’s Professor David Kim called it the most promising approach for preserving reasoning architecture. For teams with limited resources, QLoRA is the clear choice. It works with consumer GPUs (24GB is enough). It’s fast to train. And it avoids the worst of SFT’s overfitting and RLHF’s annotation overload. The trick? Combine QLoRA with reasoning validation. Don’t just test accuracy-test whether the AI’s steps make sense. Use prompts like: ‘Explain your reasoning step by step before giving the final answer.’ Then check those steps manually or with automated logic validators.

The Hidden Danger: Reasoning Degradation

Here’s the most overlooked risk: fine-tuning for one domain kills reasoning in others. The Harvard study found that when Llama-3-8b was fine-tuned on medical data, its math reasoning dropped by 22.7%. GPT-4 only dropped 5.8%. Why? Bigger models have more built-in reasoning capacity. Smaller models (under 13B parameters) are fragile. They rewire their thinking to match the new data-and lose their ability to reason elsewhere. This is called reasoning degradation. The fix? Always mix in 15% of general reasoning data during training. Use math problems, logic puzzles, or multi-step questions from datasets like GSM8K or MATH. This keeps the model’s reasoning muscles active. Also, avoid data augmentation tricks like back-translation unless you manually validate the output. One study found back-translation increased dataset diversity by 31% but introduced 12.4% more reasoning errors.

What Works in the Real World? A Practical Guide

- Start with QLoRA-it’s efficient and preserves reasoning better than full fine-tuning.

- Use 500-5,000 high-quality examples-quality beats quantity. A single expert-validated example is worth 100 scraped ones.

- Require step-by-step reasoning in your prompts. Don’t let the AI skip to the answer.

- Validate with reasoning loops-check if the intermediate steps are logically sound. BlackCube Labs reduced faithfulness issues by 37% using this method.

- Run multi-metric evaluations-combine accuracy scores with human ratings of reasoning quality. Don’t trust BLEU or ROUGE alone.

- Iterate four times-BlackCube’s clients saw 3.2x better results after four refinement cycles than after one pass.

What the Industry Is Doing Now

The market is shifting fast. In early 2023, only 11% of enterprise AI contracts mentioned faithfulness. By Q3 2024, it was 63%. The EU AI Act now requires ‘demonstrable reasoning consistency’ for high-risk systems. That’s why fintechs and healthcare providers are leading adoption-57% and 32% of users, respectively. Startups like ReasonTrust are building tools that automatically flag reasoning hallucinations. Hugging Face added faithfulness checks to its TRL pipeline. Microsoft’s new Phi-3.5 model includes ‘reasoning anchors’-fixed layers that stay unchanged during fine-tuning to preserve core logic. Google’s upcoming ‘Truthful Tuning’ framework will use causal analysis to identify which reasoning pathways are critical and protect them. By 2026, Gartner predicts 89% of enterprise AI will include explicit faithfulness metrics. The question isn’t whether you need it-it’s whether you’re ready when regulators, auditors, or customers start asking for proof that your AI isn’t just making things up.

Final Warning: Don’t Fall for the Accuracy Trap

Too many teams measure success by output accuracy. If the AI gives the right answer, they call it a win. But as Dr. Susan Park of MIT warns, that’s like judging a student by their final grade without checking their exam paper. You might be rewarding a lucky guess, not real understanding. The most dangerous AI isn’t the one that says ‘I don’t know.’ It’s the one that says ‘Here’s the exact rule, and here’s why it applies,’ and then lies about both. If you’re fine-tuning for production, you need a faithfulness validation layer. Test reasoning. Audit steps. Ask humans to judge logic, not just answers. Otherwise, you’re not building trustworthy AI-you’re building persuasive fiction.

What’s the difference between supervised fine-tuning and RLHF for faithfulness?

Supervised Fine-Tuning (SFT) teaches the model to copy correct input-output pairs, making it good at structured tasks like form-filling but weak at explaining its reasoning. RLHF uses human rankings of multiple outputs to train the model to prefer answers with better reasoning, making it stronger in open-ended conversations. SFT is faster and cheaper; RLHF is slower but produces more trustworthy explanations. For best results, combine them: use SFT for accuracy, then RLHF to refine reasoning quality.

Can QLoRA really preserve reasoning faithfulness?

Yes, according to the August 2024 arXiv study (2408.03562v1), QLoRA maintains 89-91% of full fine-tuning performance while using 78% less compute. Because it only updates small, low-rank matrices instead of rewriting the entire model, it preserves the original reasoning architecture better than full fine-tuning. This makes it the most practical choice for teams that need both efficiency and faithfulness.

Why does fine-tuning sometimes make AI less reliable?

Fine-tuning often forces the model to prioritize output accuracy over internal reasoning. Smaller models (under 13B parameters) are especially vulnerable-they rewire their thinking to match new data and lose their ability to reason in other areas. This is called reasoning degradation. A 2024 Harvard study showed a 22.7% drop in math reasoning after medical fine-tuning. The fix is to mix in general reasoning tasks during training and validate reasoning steps, not just final answers.

How do I know if my fine-tuned AI is hallucinating?

Run a reasoning validation loop. Ask the model to explain its steps before giving the final answer. Then check those steps manually or with a logic checker. If the explanation contains made-up facts, illogical jumps, or irrelevant details-even if the final answer is correct-it’s hallucinating. Use benchmarks like Golden Answers that test both output accuracy and reasoning coherence. Human evaluation of reasoning quality is still the gold standard.

Is RLHF worth the cost?

Yes-if you need the AI to handle complex, open-ended conversations where reasoning matters. Innovatiana found RLHF improved user satisfaction by 41.2% in customer service bots compared to SFT. But it’s expensive: it requires hundreds or thousands of hours of expert annotation. Use RLHF only after SFT or QLoRA has established baseline accuracy. Don’t use it for simple data extraction tasks-save it for situations where the ‘why’ matters as much as the ‘what.’

What’s the biggest mistake companies make when fine-tuning for faithfulness?

Measuring success only by output accuracy. A 2024 IOPex survey found 73% of organizations lacked any framework to assess reasoning faithfulness. They assumed if the AI got the answer right, it was fine. But 67% of negative user experiences came from models that gave correct answers using fake reasoning. Always validate the reasoning path. Test for logic, consistency, and transparency-not just correctness.

Next Steps: What to Do Today

If you’re already fine-tuning, pause. Audit your last model. Ask: Did you check the reasoning steps? Or just the final answer? If you haven’t, you’re at risk. Start with QLoRA on a small dataset. Add 100 examples with explicit reasoning prompts. Run four refinement cycles. Test with a mix of domain-specific and general reasoning questions. If you’re planning to fine-tune, demand tools that include reasoning validation-don’t settle for basic accuracy metrics. The future of AI isn’t just about smarter models. It’s about honest ones.

sonny dirgantara

bro i just tried qlora on my rtx 3060 and it actually worked?? like, no crash, no weird errors. took 2 hours. my model still says 'pizza causes diabetes' but at least it dont crash my pc anymore lol

Andrew Nashaat

You're all missing the point. SFT is a band-aid. RLHF is a placebo. QLoRA? A magic trick. The real issue is that we're training models like they're vending machines-feed them data, get an answer. But if you don't validate the reasoning path, you're not building AI-you're building a sophisticated parrot with a PhD in nonsense. And yes, I've seen models that get 98% accuracy on medical QA but cite fictional journals. This isn't just risky-it's criminal. And don't even get me started on 'reasoning anchors'-that's just renaming 'hardcoding' to sound like a Stanford patent!

Jawaharlal Thota

I've been doing this for 8 years now, and I've seen every flavor of fine-tuning come and go. SFT? Fast, yes-but it turns your model into a copy-paste machine that forgets how to think. RLHF? Beautiful in theory, but you need a team of 10 PhDs just to label 500 examples. QLoRA? This is the first thing in years that actually feels sustainable. I ran it on a 13B model with 24GB VRAM, mixed in 15% GSM8K data, and added step-by-step validation prompts. The model still got the diagnosis right, but now it says 'I'm not sure about the insulin dosage because the HbA1c is borderline, and the patient's BMI is 27.5, which is overweight but not obese.' That's not just accurate-that's *thoughtful*. And honestly? That's all we need. Companies think they want speed. They don't. They want to not get sued. And QLoRA with reasoning checks? That's how you sleep at night.

Lauren Saunders

Oh please. 'Reasoning faithfulness'? That's just academic jargon for 'I don't trust my own model.' The truth is, no one actually cares about the internal logic-only the output. If the AI says 'Type 2 diabetes' and the patient has it, who cares if it cited pizza? The patient gets treated. The insurer pays. The doctor signs off. The whole 'hallucination' panic is just a marketing ploy by Stanford and Hugging Face to sell more annotation services. Real-world systems don't audit reasoning steps-they audit outcomes. And if the outcome is correct, the rest is philosophy.

Aafreen Khan

yo i tried all this and my model still says 'the patient should take 300mg of aspirin because they like pineapple' 😂 but hey, at least it's confident!! 🤖🍍 #aiisjustfakingit #trusttheprocess

Kendall Storey

Let me cut through the noise: you don't need RLHF if you're not doing open-ended clinical triage. You don't need full fine-tuning if you're extracting form data. QLoRA + step-by-step prompts + 15% general reasoning data? That's the stack. I've deployed this in 3 fintechs and 2 clinics. We cut hallucinations by 70% and saved 80% on GPU costs. The key? Automated reasoning validators. Not humans. Not surveys. Code that checks for logical gaps-like 'did the model cite a lab value that wasn't in the input?' If it did, flag it. Simple. Scalable. Real. Stop overcomplicating it.

Gina Grub

This whole thread is a cult. Faithfulness? Reasoning anchors? You're all just trying to make AI feel human so you can sleep better at night. But here's the truth: AI doesn't have beliefs. It doesn't have logic. It's a statistical mirror. The moment you start treating it like a doctor or lawyer, you're asking for disaster. The only thing that matters is whether the output matches the expected answer. Everything else is theater. And don't even get me started on 'reasoning degradation'-that's just a fancy way of saying 'the model got better at one thing and worse at another.' That's called learning. That's not a bug. That's evolution.

LeVar Trotter

To everyone saying 'just use accuracy'-you're not just wrong, you're endangering people. I work in oncology AI. Last month, a model gave a correct cancer stage but based it on a non-existent biomarker. The doctor trusted it. The patient got the wrong chemo. That’s not a glitch. That’s negligence. QLoRA isn't magic, but it’s the least-bad tool we have. Pair it with automated reasoning checks-like validating that every claim in the explanation is traceable to the input. Use open-source tools like ReasonTrust or Hugging Face’s new faithfulness scorer. And please, for the love of all that’s holy, stop using BLEU scores. They’re useless. We need to measure *how* it thinks, not just what it says.

Tyler Durden

I’ve been running this exact setup for 6 months now-QLoRA on Llama-3-8b, 15% GSM8K mixed in, step-by-step prompts enforced, and a Python script that flags any explanation with 'because' followed by a non-input fact. It’s not perfect, but it’s the first time I’ve seen a model say 'I don’t know' when it should. And honestly? That’s the win. The model used to guess. Now it hesitates. That’s not weakness. That’s wisdom. I used to think we needed bigger models. Now I think we need more honesty. And that’s not just technical-it’s ethical. And if you’re not testing reasoning, you’re not building AI. You’re building a confidence machine. And those are the most dangerous ones.

Rae Blackburn

You all know this is a cover-up, right? The real reason they're pushing 'faithfulness' is because the government is about to shut down all AI that doesn't have 'explainable logic'-which means they're afraid the models are being trained on classified data from DARPA. And QLoRA? It's just a way to hide the original weights. They're not preserving reasoning-they're hiding the truth. You think your model is 'faithful'? It's just obedient. And obedience is the first step to control. Wake up. They're not fixing AI. They're weaponizing it.