Most people think of AI as something that just needs good instructions. You type a question, get an answer. Simple. But what if your first language isn’t English? What if you’re 70 years old and not tech-savvy? What if you have dyslexia, or you’re from a rural village in Nigeria where English is spoken differently? Standard prompts fail these users-often silently. They don’t get angry. They just stop using the tool. And that’s the real problem: inclusive prompt design isn’t about being nice. It’s about making AI work for everyone, not just the ones who fit a narrow mold.

Why Standard Prompts Exclude So Many People

Think about the prompts you’ve seen online. They’re often written like academic papers: complex, dense, full of jargon. "Explain the economic implications of quantum computing in the context of global supply chain optimization." That’s a great prompt for a PhD student. It’s useless for someone who learned English as a second language, or someone with low literacy, or someone whose brain processes information differently. A 2025 study found that 68.4% of users from marginalized groups quit using large language models after just three failed attempts. Why? Because the system didn’t adapt. It didn’t ask, "Do you need this simpler?" It just gave back a wall of text and assumed the user was the problem. This isn’t a glitch. It’s a design choice. Most AI tools are built for a default user: young, fluent in English, tech-literate, and neurotypical. That’s not the world. That’s a tiny slice of it.The Inclusive Prompt Engineering Model (IPEM)

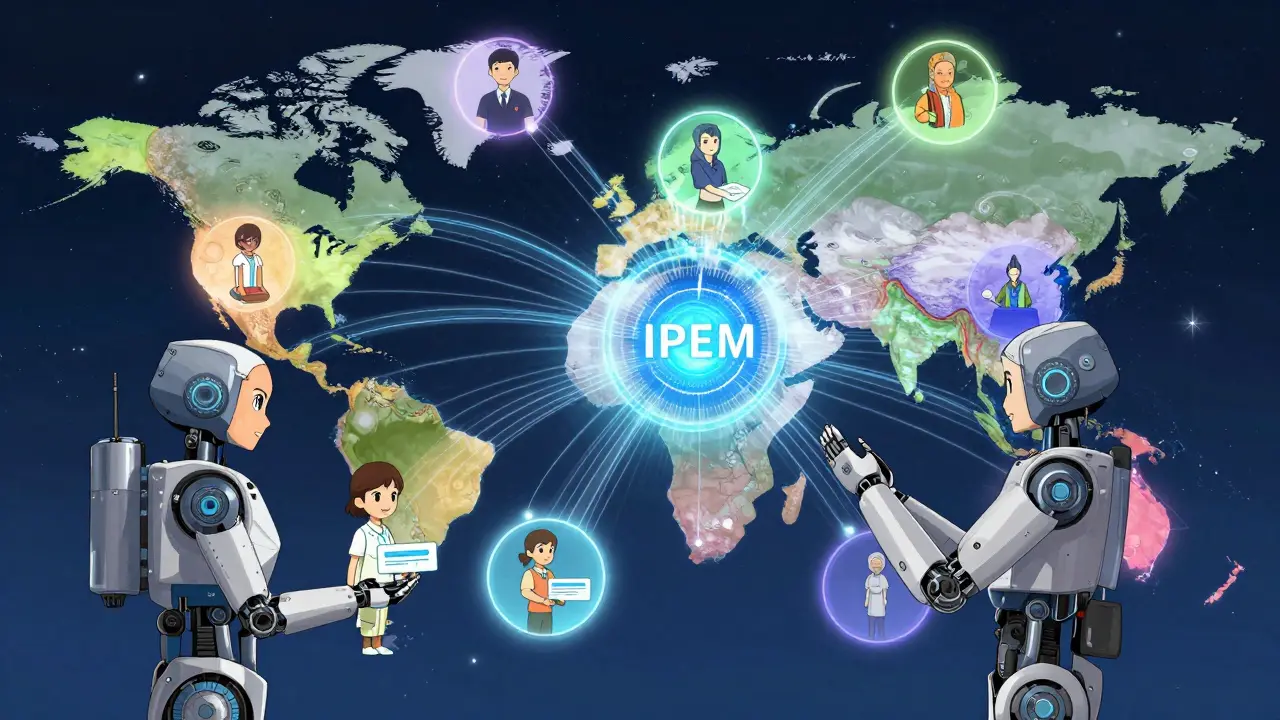

In 2024, researchers at the University of Salford introduced the first real framework for fixing this: the Inclusive Prompt Engineering Model, or IPEM. Unlike older methods that just tweak wording, IPEM is a system. It has three working parts that talk to each other. First, there’s adaptive scaffolding. This means the prompt changes based on how the user responds. If someone rephrases their question three times, the system doesn’t get frustrated. It lowers the complexity. It breaks the question into smaller steps. It might even offer a visual diagram instead of text. Second, there’s cultural calibration. IPEM doesn’t just translate words. It understands context. In some cultures, asking for help directly is seen as weak. In others, being overly polite slows things down. IPEM pulls from over 142 cultural dimensions-things like how people view authority, time, or individualism-to adjust tone and structure. It’s not guessing. It’s using real data from the World Values Survey. Third, accessibility transformers. This part turns a standard prompt into multiple formats: simplified English, audio narration, image-based cues, or even sign language video links. A user with dyslexia might get a prompt with larger fonts, fewer words, and bullet points. A user with low vision might get a voice version. All from the same original request.How Much Better Is It?

The numbers don’t lie. When tested across 127 real-world tasks, inclusive prompts improved success rates by 37.2% for non-native English speakers. For users over 65, outcomes improved by 31.4%. For people with cognitive disabilities, frustration dropped by nearly 30%. One example: a grandmother in Spain, who spoke mostly Spanish and had never used AI before. After switching to a simplified prompt with visual icons and slow, clear language, she started asking for recipes, weather, and family photos every day. Her grandson said, "She used to say AI was for smart people. Now she says it’s for her." Even more telling: response times dropped from 4.7 minutes to 2.1 minutes for low-literacy users. Accuracy jumped from 63.2% to 82.7%. That’s not a small win. That’s the difference between getting help and giving up.How It Compares to Other Approaches

There are other prompt tools out there. Google’s Prompting Guidelines. Anthropic’s Constitutional AI. Automatic Prompt Engineer (APE). All good. But none of them were built with diversity in mind. APE can write great prompts. But it doesn’t know if your user is from India, speaks Hinglish, and needs the answer in 50 words or less. Google’s guidelines say "be clear." IPEM says, "Here’s exactly how to make it clear for someone who reads at a 5th-grade level and speaks Tamil." The difference isn’t subtle. In a direct comparison, IPEM was 35.6% more effective for marginalized users than APE. Why? Because IPEM doesn’t just optimize for quality-it optimizes for access.

Real-World Use Cases

Healthcare is where this matters most. A patient with limited English needs to understand their diagnosis. A nurse using an AI assistant to explain treatment options can’t afford ambiguity. With IPEM, prompts adapt to the patient’s language level, cultural beliefs about medicine, and cognitive load. One hospital in Germany cut patient confusion calls by 41% after switching. In education, Duolingo integrated IPEM into its AI tutor. Non-native speakers went from dropping out after 2 lessons to staying for 7. That’s a 33.5% increase in engagement. Teachers noticed students were asking more questions-because they finally understood how to ask. Government services are catching on too. In the EU, new rules under the AI Act now require public-facing AI tools to be accessible. That means city portals, tax help bots, and job application assistants must use inclusive prompts-or risk fines.What You Need to Implement It

You don’t need to be a data scientist. But you do need structure. IPEM has a three-phase rollout:- Assessment (2-5 days): Identify your users. What languages do they speak? What disabilities are common? What cultural norms matter? Don’t guess. Survey them.

- Configuration (1-3 weeks): Plug into IPEM’s open-source tools. Choose which components to activate: simplified language? visual aids? cultural tone shifts? You don’t need all of them.

- Optimization (ongoing): Monitor. Track errors. Watch for cultural drift. A prompt that works in Nigeria might misfire in Ghana. Use the IPEM Cultural Drift Detection Toolkit to catch it early.

The Risks and Limits

Inclusive doesn’t mean perfect. There are trade-offs. In highly technical fields-like legal contracts or engineering specs-inclusive prompts can reduce precision by up to 18%. That’s because simplifying language sometimes loses nuance. The fix? Use IPEM selectively. Keep the complex version for experts, and offer the simplified one as an option. Another risk: stereotyping. If you oversimplify a culture-say, assuming all Latin American users prefer warm tones-you might accidentally reinforce biases. That’s why IPEM uses real data, not assumptions. And why validation matters. Always test with real users from the group you’re designing for. Also, not all languages are covered. Indigenous languages with fewer than 10,000 speakers are still left out. That’s a gap. The research team is working on it, but it’s slow. Community input is needed.

What’s Next?

The field is moving fast. In January 2026, IPEM 2.0 launched with real-time dialect adaptation. Now it can recognize Nigerian Pidgin, Indian Hinglish, and Caribbean English-not just "standard" English. Google’s Project Inclusive is coming in Q3 2026, built for K-12 classrooms. Anthropic’s Claude 4 will include IPEM principles by May 2026. Microsoft’s Inclusive Prompt Designer already has 22% of the enterprise market. By 2028, experts predict 92% of enterprise AI systems will use formal inclusive prompt frameworks. Just like websites had to be mobile-friendly, now they’ll have to be inclusive-friendly.Final Thought: It’s Not Optional Anymore

This isn’t about ethics. It’s about economics. Professor Kenji Tanaka calculated that excluding people with disabilities from AI tools costs $1.2 trillion a year. That’s not a number. That’s 1.2 trillion chances to help someone. The companies that win aren’t the ones with the smartest AI. They’re the ones who built AI that works for the most people. The grandmother. The nurse. The student. The non-native speaker. The person who just wants to understand. If your AI doesn’t work for them, it doesn’t work.What is inclusive prompt design?

Inclusive prompt design is the practice of creating AI instructions that work well for people of all backgrounds, abilities, and languages. It’s not just about using simple words-it’s about adjusting structure, tone, format, and cultural context so that users with low literacy, non-native English, cognitive differences, or cultural diversity can understand and use AI tools effectively.

How does IPEM differ from regular prompt engineering?

Regular prompt engineering focuses on getting the best output from an AI, usually for a single ideal user. IPEM adds layers: it adapts the prompt in real time based on the user’s language level, cultural background, and cognitive needs. It includes accessibility features like visual aids and simplified language, and it uses real-world data to avoid stereotypes. It’s designed for diversity, not just efficiency.

Can I use inclusive prompts with GPT-4 or Claude?

Yes. Version 1.2 of IPEM, released in September 2025, is compatible with all major LLMs including OpenAI’s GPT-4o, Anthropic’s Claude 3.5, Google’s Gemini 1.5 Pro, and Meta’s Llama 3. You need to use API version 2024-06-01 or later. The framework works as a layer on top of your existing prompts-it doesn’t replace them, it enhances them.

Is inclusive prompt design expensive to implement?

The upfront cost is higher-teams report needing 30-40% more development time initially. But long-term, it saves money. One European bank cut customer support tickets by 29%. Others saw fewer user drop-offs and higher engagement. The ROI is clear: expanding your user base is cheaper than losing them.

What if I don’t know my users’ cultural background?

Start with what you know. Survey your users. Ask about language, comfort with tech, and preferred communication style. IPEM’s cultural calibration engine uses global data, so even if you don’t know every detail, it can make educated adjustments. Avoid assumptions. Test with real people from your target group before launching.

Are there free tools to try inclusive prompting?

Yes. The IPEM framework is open-source and available on GitHub with over 867 stars as of January 2026. The community has built hundreds of ready-to-use prompt templates for education, healthcare, and customer service. There’s also a free Coursera course from the University of Washington with over 42,000 enrollments.

Does inclusive prompting make AI slower?

Slightly. IPEM adds about 237ms of extra latency on average, and uses 15% more processing power. But for most users, the experience feels faster because responses are clearer and require fewer retries. The trade-off is worth it: users complete tasks quicker and with less frustration.

How do I know if my prompts are truly inclusive?

Test them. Use real users-not just your colleagues. Watch how long they take. Do they ask for help? Do they give up? Use the IPEM Cultural Drift Detection Toolkit and accessibility checkers to audit prompts. Look at metrics like task completion rate, error rate, and user satisfaction. If 70% of users succeed and feel understood, you’re on the right track.

Kirk Doherty

Been using AI tools for my grandma’s care plan and this makes total sense. She used to just shrug and turn off the tablet. Now with the simplified prompts, she asks for weather updates and recipes like it’s nothing. No drama, no frustration. Just works.

Kinda wild how we assumed the problem was them not getting it, when really it was the tool not meeting them halfway.

Dmitriy Fedoseff

Let’s be real - this isn’t ‘inclusive design,’ it’s basic human decency. We’ve spent decades building tech for the privileged few and then acting shocked when the rest of the world walks away. IPEM isn’t revolutionary. It’s just the first time someone bothered to look up from their code and see actual people.

And yes, it’s about economics. $1.2 trillion is the price of ignoring 80% of humanity. We’re not building AI to help people. We’re building it to impress venture capitalists. This changes that.

Meghan O'Connor

First of all, ‘IPEM’? That’s not a name, that’s a corporate acronym vomit. And ‘cultural calibration’? Please. You’re not ‘pulling from 142 cultural dimensions’ - you’re slapping on a generic ‘warm tone’ filter and calling it ethnography.

Also, ‘simplified English’? That’s just bad English. And why is there no mention of how this breaks down with non-Latin scripts? Or sign language that varies by region? This feels like a TED Talk dressed up as research.

Morgan ODonnell

My cousin in rural Nigeria uses AI to check crop prices. She doesn’t care about jargon. She just wants to know if tomatoes are worth selling this week. I showed her the IPEM version - she laughed and said, ‘Finally, something that talks like me.’

It’s not magic. It’s just listening. And yeah, it works. I’ve seen it. No need to overcomplicate it.

Liam Hesmondhalgh

Oh great, another woke tech fad. Next they’ll be forcing AI to use Irish Gaelic because ‘some people feel seen.’ Newsflash: most people in Ireland speak English. And if you can’t handle a clear prompt, maybe you shouldn’t be using AI at all.

This isn’t accessibility - it’s dumbing down for the sake of virtue signaling. IPEM sounds like a buzzword factory.

Patrick Tiernan

So we’re making AI talk like a children’s book now? Cool. Next they’ll make it sing lullabies. Honestly, if you can’t write a proper sentence, maybe you shouldn’t be asking AI for help. This is just coddling people who can’t handle basic literacy.

Also, 37% improvement? Sure. But at what cost? Precision is getting sacrificed for feel-good metrics. I’d rather get a perfect answer I don’t understand than a dumb one that makes me feel smart.

Patrick Bass

Just a quick note - the IPEM framework’s open-source toolkit on GitHub is actually really well documented. The prompt templates for healthcare are spot-on. I used one for a patient with aphasia and the difference was night and day.

Also, the 237ms latency? Totally worth it. People weren’t abandoning the tool because it was slow - they were abandoning it because it made them feel stupid.

Tyler Springall

This is the most condescending pile of tech-bro nonsense I’ve read all year. You’re telling me that a 70-year-old in Spain needs visual icons to understand a recipe? That’s not inclusive - that’s infantilizing. And who decided that ‘simplified’ means ‘dumbed down’? Who gave you the right to redefine intelligence based on your own biases?

This isn’t progress. It’s paternalism with a GitHub repo.