Generative AI isn’t just another tool-it’s rewriting how businesses operate, how content is created, and how decisions are made. But with great power comes great risk. ChatGPT, Midjourney, and other generative models can produce convincing fake text, deepfake videos, or biased hiring recommendations-all without human oversight. By 2025, 68% of enterprises have some kind of AI governance in place, but only 22% have what experts call "mature" governance. That gap is where things go wrong. Organizations aren’t failing because they lack tools. They’re failing because they’re using the wrong governance model.

Why Governance Isn’t Optional Anymore

In 2023, companies treated generative AI like a cool experiment. By 2025, it’s in production. A bank uses AI to screen loan applications. A hospital relies on it to analyze X-rays. A retailer uses it to write product descriptions at scale. Each of these uses carries legal, ethical, and reputational risks. One biased algorithm can trigger a lawsuit. One hallucinated medical diagnosis can cost a life. One leaked training dataset can break GDPR. The ModelOp 2025 AI Governance Benchmark Report found that companies with weak governance see 41% more regulatory violations and 37% more bias incidents. Meanwhile, those with strong governance report 23% higher ROI on AI projects. It’s not about slowing down. It’s about making sure you’re moving in the right direction.Council-Based Governance: The Committee Trap

The most common starting point? A council. Cross-functional teams-legal, compliance, data science, marketing-meet monthly to review AI projects. It sounds reasonable. In theory, it brings diverse perspectives. In practice, it creates bottlenecks. Forty-three percent of enterprises adopted council-based models in 2023. By 2025, 62% of them say it adds 14 to 21 days to every AI deployment. A senior data scientist at a Fortune 500 bank told Reddit users their council took 18 days just to approve a simple chatbot for customer service. By then, the business unit had already built a workaround using an unapproved tool. Councils work best when they’re advisory, not gatekeepers. But too often, they become the gate. The result? Shadow AI. Employees bypass the council entirely. Microsoft’s 2024 study found 78% of workers use personal AI tools at work. When governance feels like a roadblock, people drive around it.Policy-Driven Frameworks: Rules That Don’t Scale

Thirty-eight percent of organizations moved from councils to written policies. These are detailed documents covering data quality, model training, bias testing, and output monitoring. They’re often based on NIST’s AI Risk Management Framework, which 68% of companies now cite as their baseline. The problem? Policies are static. Generative AI evolves daily. A policy that says "all models must be validated with 10,000 test cases" sounds solid-until you’re deploying 50 new models a week. Healthcare companies, which need explainability for medical AI, found that rigid policies caused 42% of models to be rejected for non-critical issues, wasting over 11,000 engineering hours in Q1 2025 alone. Policies are necessary, but they can’t be the whole system. They need to be living documents, updated weekly, not quarterly. And they need to be paired with tools that enforce them automatically-not just people checking boxes.Accountability Models: Who’s Really in Charge?

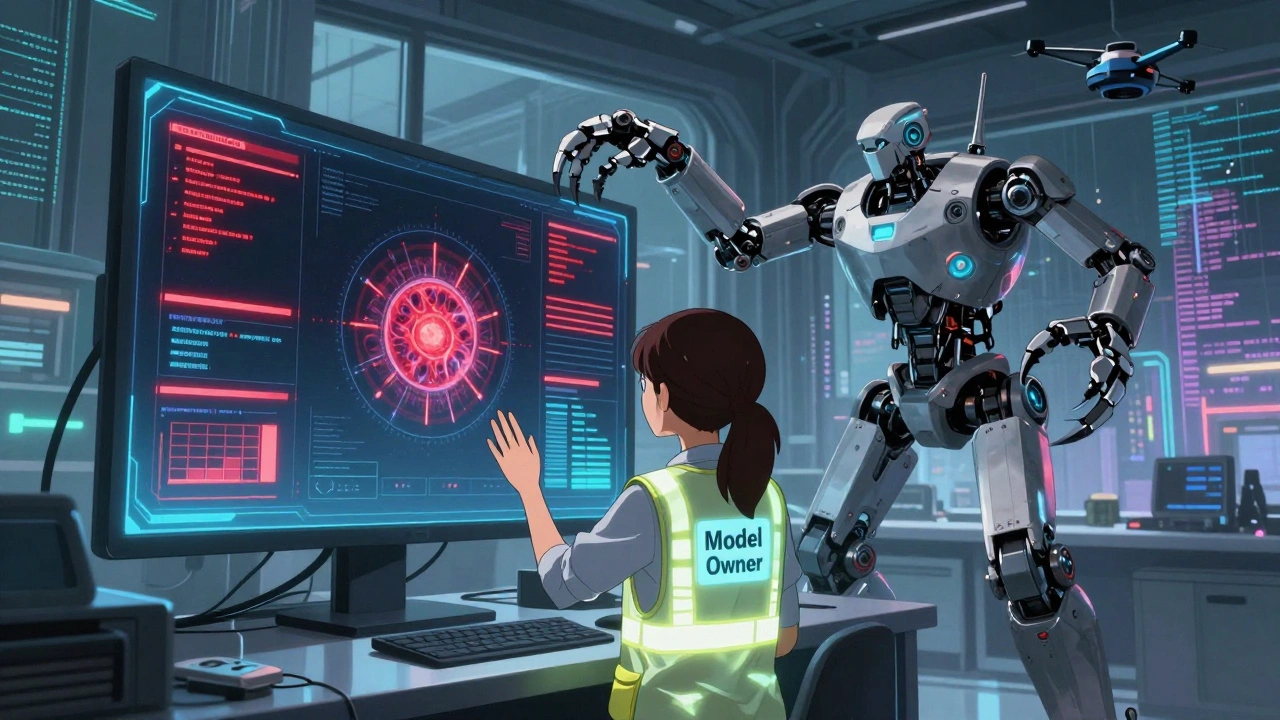

The most effective models in 2025 don’t rely on committees or thick policy manuals. They rely on accountability. That means assigning clear ownership: who built it? Who monitors it? Who answers when it fails? Nineteen percent of advanced organizations have adopted this model. And they’re seeing results: 33% faster deployment cycles, 55% fewer AI-related incidents, and 28% higher business value from AI projects. How? They embed governance into the workflow. Every AI model has a designated owner-a data scientist or product manager-who signs off on risk assessments before launch. They use automated tools to track performance in real time. If a model starts generating biased responses, the system flags it. If it violates a privacy rule, it’s automatically paused. No committee needed. This isn’t about removing oversight. It’s about making it real-time, not retrospective. Accountability means someone’s name is on the line-not a department.

The Five Pillars of Real AI Governance

Effective governance isn’t one model. It’s five working parts:- Policy and Compliance: Align with the EU AI Act, U.S. AI Bill of Rights, and ISO/IEC 42001:2023. Don’t guess-know what’s required.

- Transparency and Explainability: Can you explain why the AI said what it said? In healthcare, 87% of organizations require full execution graphs. In finance, explainability matters less than audit trails.

- Security and Risk Management: Red teaming isn’t optional. Financial institutions require it for 92% of AI systems. Use automated tools to simulate attacks on your models.

- Ethical Considerations: Bias isn’t a bug-it’s a design flaw. Test for demographic disparities in outputs. Use diverse training data. Audit for fairness, not just accuracy.

- Continuous Monitoring and Auditing: Deployments aren’t the end. Monitor outputs daily. Track drift. Measure impact. Tools like Essert’s dashboard reduced compliance review time from 14 days to 8 hours for one client.

Industry Differences: One Size Doesn’t Fit All

Governance isn’t universal. It’s contextual. - Healthcare: Needs explainability above all. If an AI recommends a treatment, doctors must understand why. Models that can’t show their reasoning get blocked. - Finance: Security and fraud detection come first. Red teaming, encryption, and access controls are non-negotiable. They also track model drift obsessively-small changes in loan approval rates can signal bias. - Retail: Speed matters. They use lightweight governance: automated content filters, brand safety checks, and real-time sentiment monitoring. No committees. No delays. - Manufacturing: Focus on operational safety. If an AI controls a robotic arm, it can’t hallucinate. They use real-time monitoring and fail-safes. Pick the right focus for your industry. Don’t apply healthcare rules to a marketing chatbot.The "Bring Your Own AI" Problem

Here’s the dirty secret: your employees are already using AI. They’re using ChatGPT to write emails. Midjourney to design logos. Claude to summarize reports. And they’re not telling you. Microsoft found 75% of employees use AI at work. 78% use personal tools. That’s not rebellion-it’s efficiency. But it’s also a massive governance gap. Organizations that try to ban it fail. The solution? Sandboxing. Create approved, secure environments where employees can use AI safely. One company rolled out a corporate version of ChatGPT with built-in compliance filters. Within six months, shadow AI usage dropped from 78% to 11%. Compliance jumped from 31% to 89%. Stop fighting shadow AI. Manage it.What Mature Governance Looks Like

Mature governance doesn’t feel like bureaucracy. It feels like infrastructure. Think of it like cybersecurity. No one asks for permission to install antivirus software. It’s just there. Same with AI governance in top-performing companies:- AI tools are pre-approved and pre-audited.

- Models are monitored automatically for drift, bias, and compliance.

- Every model has an owner, a risk score, and a kill switch.

- Training is built into onboarding-every engineer knows how to flag a risky model.

- Leadership reviews governance metrics monthly, not quarterly.

The Biggest Mistake: Governance Theater

Dr. Marcus Wong from the TechPolicy Institute calls it "governance theater." That’s when companies create the appearance of control without real substance. A fancy AI ethics committee. A 50-page policy no one reads. A dashboard no one checks. These organizations check the box. Then they get burned. Real governance isn’t about having a policy. It’s about having consequences. If a model causes harm, someone loses their bonus. If a team ignores a red flag, they get retrained. If a tool is unsafe, it’s blocked-no exceptions. Ask yourself: If your AI made a mistake tomorrow, would anyone be held accountable? If the answer is no, you don’t have governance. You have theater.Where We’re Headed: Dynamic Guardrails

The next big shift? From static rules to dynamic guardrails. Agentic AI-systems that don’t just generate text, but make plans, book meetings, and place orders-is coming fast. By Q4 2025, 45% of large enterprises will use guardrails that adjust in real time based on risk levels. Imagine this: A marketing AI wants to post a promotional message. The system checks: Is this time zone active? Is the message within brand tone? Is there a current PR crisis? If yes to any, it’s blocked. If no, it’s approved-no human needed. This is the future. Governance must be fast, automated, and embedded-not a bottleneck.How to Start

You don’t need a perfect system. You need a starting point. 1. Map your AI use cases. List every project in production or planning. Don’t skip the small ones. 2. Assign ownership. Who’s responsible for each? Name them. Make it official. 3. Pick one risk to fix. Bias? Security? Compliance? Start there. 4. Use automated tools. Don’t rely on spreadsheets. Tools like ModelOp, Essert, or IBM Watson can monitor outputs, detect drift, and flag violations. 5. Train your team. Three hours of training won’t cut it. Expect 25-35 hours of instruction for full adoption. 6. Measure progress. Track bias incidents, deployment speed, and compliance violations. If numbers aren’t improving, your model isn’t working.Final Thought: Governance Is a Growth Engine

The best companies don’t see governance as a cost. They see it as a catalyst. It’s not about saying no. It’s about saying "yes, safely." Organizations with mature governance deploy AI faster, scale it wider, and earn more trust. They don’t fear the future. They shape it. If you’re still waiting for the perfect framework, you’re already behind. Start small. Be accountable. Build continuously. The goal isn’t to control AI. It’s to make it work for you-without breaking anything.What’s the difference between AI governance and AI ethics?

AI ethics is about values-fairness, transparency, human dignity. AI governance is about systems-policies, ownership, monitoring, enforcement. Ethics tells you what’s right. Governance ensures you do it.

Do small businesses need AI governance?

Yes-even if you’re using AI for simple tasks like email drafting or customer replies. One biased response can damage your brand. One data leak can cost you customers. Start with ownership: who’s using AI? What’s the risk? Then add one automated check-like a content filter or a bias detector. You don’t need a committee. You need awareness.

Can AI governance be automated?

Yes, and it should be. Automated testing, real-time monitoring, and dynamic guardrails reduce human error and speed up deployment. Tools can detect bias, flag privacy violations, and pause models when performance drops. But automation doesn’t replace accountability-it enables it. Someone still needs to own the system.

What happens if I ignore AI governance?

You’ll face three things: regulatory fines (especially under the EU AI Act), reputational damage from biased or harmful outputs, and shadow AI that spirals out of control. Companies that banned AI saw shadow usage jump from 22% to 67% in six months. Ignoring governance doesn’t stop AI-it just makes it riskier.

How long does it take to implement AI governance?

You can set up a basic framework in 4-6 weeks: assign owners, pick one tool, define one policy. Full maturity takes 6-12 months, depending on scale. The key is starting now-not waiting for perfection. Progress beats perfection in AI governance.

Is there a free AI governance template I can use?

Yes. The NIST AI Risk Management Framework (RMF) is free and publicly available. It’s not a checklist, but a structure: Identify, Protect, Detect, Respond, Recover. Many companies use it as a foundation. Pair it with a simple ownership chart and one automated monitoring tool, and you’re ahead of 80% of organizations.

Victoria Kingsbury

Love how this breaks down governance beyond the usual buzzword bingo. Too many orgs think a fancy ethics committee = compliance. Reality? It’s just theater until someone’s name is on the line for a bad output.

Real governance isn’t a doc you file away-it’s a muscle you build daily. Automated monitoring, clear owners, kill switches. Done right, it’s invisible. And that’s the point.

Tonya Trottman

‘Governance theater’-finally someone said it. I’ve seen companies spend $2M on AI ethics posters and a PowerPoint titled ‘Our Values’ while their chatbot spits out racist customer replies. The only thing more pathetic than the policy is the HR email that says ‘We’re committed to ethical AI’ signed by someone who doesn’t know what a transformer is.

sampa Karjee

It is not merely a matter of technical implementation, but of ontological responsibility. The very notion of delegating decision-making to an algorithmic entity-without a transcendent moral agent to bear consequence-constitutes a metaphysical failure of Western epistemology. Governance without accountability is not governance; it is nihilism dressed in compliance robes.

Kieran Danagher

Shadow AI isn’t rebellion-it’s just people being smart. If your governance is slower than your coffee machine, don’t blame the employees. Fix the system. I’ve seen teams use GPT-4 to write legal docs because the internal tool took 3 weeks to approve a simple prompt template. That’s not innovation. That’s failure.

OONAGH Ffrench

Accountability is the only thing that sticks. No committee. No policy manual. Just one person who gets called out when it breaks. That’s it. Everything else is decoration. The tools exist. The frameworks exist. What’s missing is the courage to assign ownership and enforce it.

poonam upadhyay

OMG I’ve seen this so many times-like, my boss let a junior use Midjourney to design the whole Q3 campaign and then got sued because the AI generated a logo that looked like a hate symbol??!! And then they blamed the ‘tool’?? Nooo, it’s YOU who didn’t set filters, didn’t review outputs, didn’t even CARE until the client screamed!! This isn’t tech failure-it’s leadership failure!!

Shivam Mogha

Start with one model. Assign one owner. Add one filter. That’s enough to begin.

Rahul Borole

It is imperative to recognize that AI governance is not a discretionary initiative but a foundational pillar of corporate integrity in the digital age. Organizations that fail to institutionalize dynamic guardrails, real-time auditing, and unequivocal accountability mechanisms are not merely at risk-they are actively endangering stakeholder trust and regulatory compliance on a systemic level. The time for incrementalism has expired.

ujjwal fouzdar

Think about it… what if the AI that’s writing your marketing copy… also writes your obituary? What if the model that recommends your loan… also decides your fate? We’re not talking about bugs anymore. We’re talking about souls being shaped by code that no one understands, owned by no one, and accountable to nobody. That’s not innovation. That’s a ghost in the machine… and it’s hungry.