Tag: LLM training infrastructure

16Feb

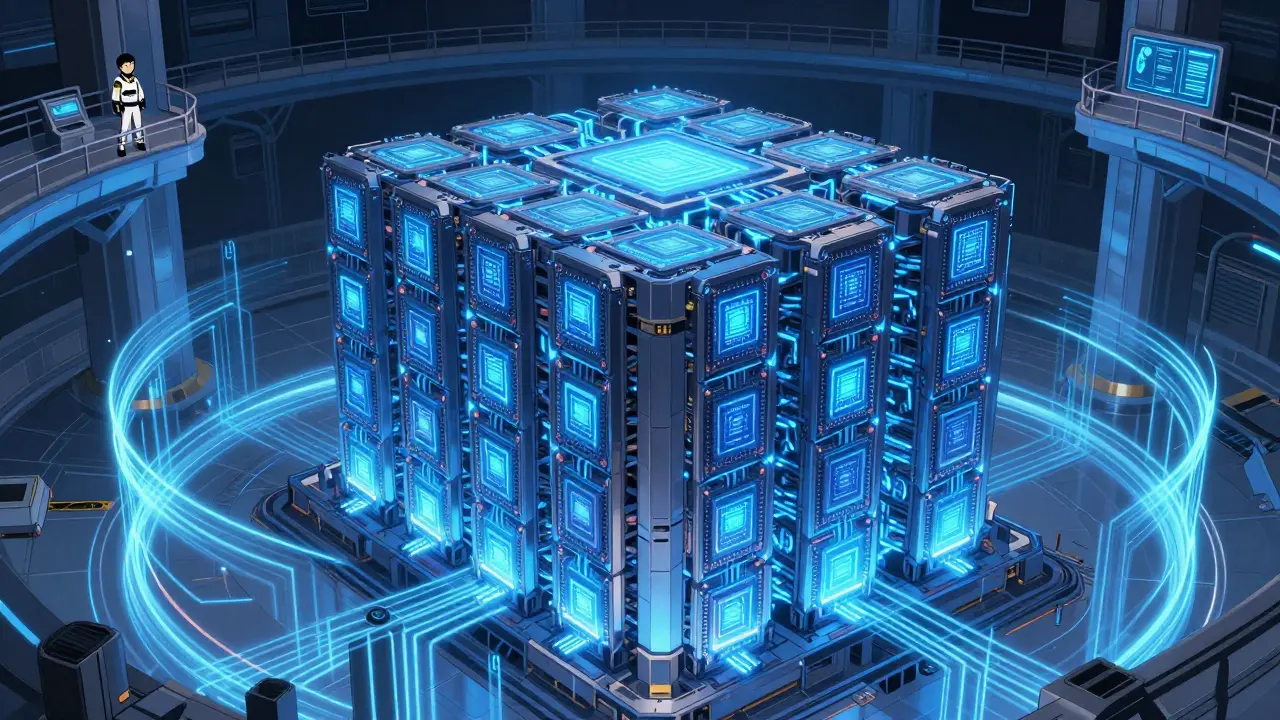

Compute Infrastructure for Generative AI: GPUs vs TPUs and Distributed Training Explained

GPUs and TPUs power generative AI, but they work differently. Learn how each handles training, cost, and scaling - and why most organizations use both.