Traditional metrics like BLEU and ROUGE used to be the gold standard for judging how well a language model performed. They counted matching words, n-grams, and sentence overlaps between the model’s output and a reference answer. Simple. Fast. Reliable. But today, they’re misleading. If you still rely on them to evaluate modern LLMs, you’re not measuring quality-you’re measuring luck.

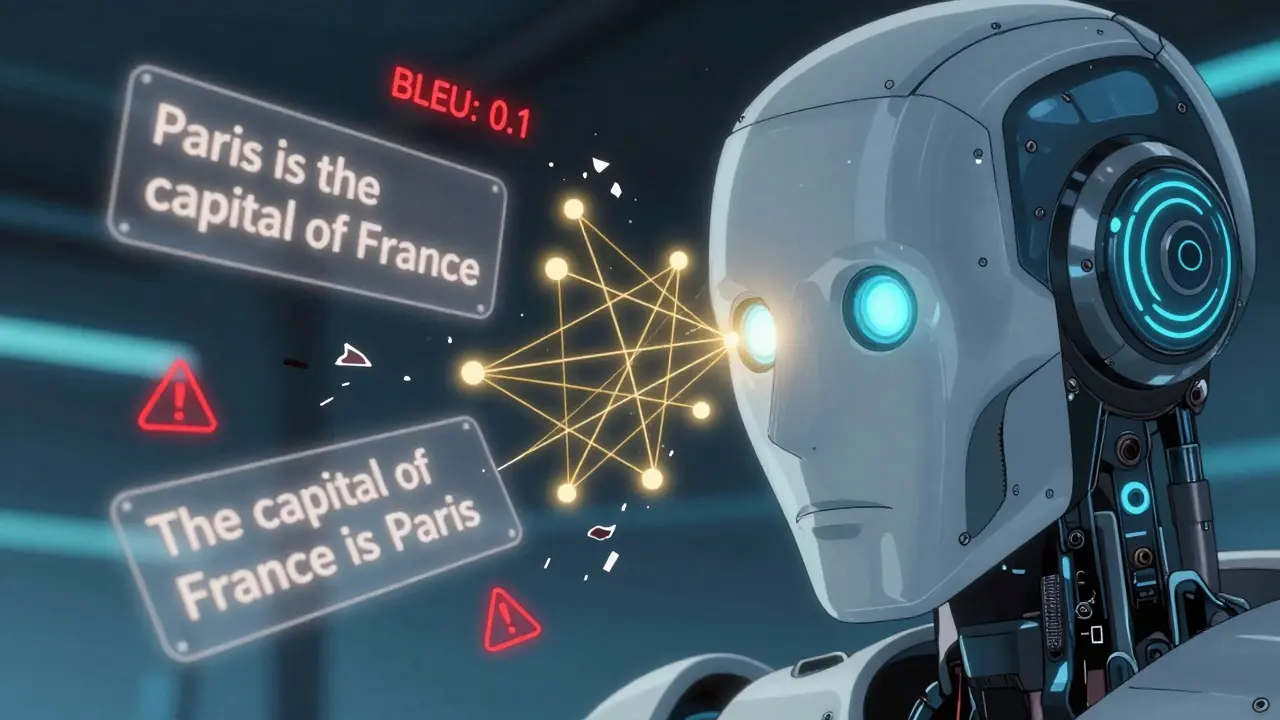

Here’s the problem: modern LLMs don’t just repeat phrases. They rephrase, expand, simplify, and restructure answers in ways that make perfect sense to humans-but look completely wrong to BLEU. Imagine a model answers, "The capital of France is Paris," and your reference says, "Paris is the capital city of France." BLEU will give you a near-zero score. Why? Because the words don’t line up. But any human would say that’s a perfect answer. That’s not a flaw in the model. It’s a flaw in the metric.

Why BLEU and ROUGE Fail Modern LLMs

BLEU was created in 2002 for machine translation. ROUGE came in 2004 for summarization. Both were built for systems that produced rigid, formulaic outputs. Back then, if a model changed a word order or used a synonym, it was considered an error. Today’s LLMs do that by design. They’re not trying to copy. They’re trying to understand.

Wandb’s 2023 analysis showed that BLEU and ROUGE only correlate with human judgment at 0.35-0.45. That means if you rank models based on these scores, you’ll pick the wrong one more than half the time. Meanwhile, human evaluators agree on what’s good 80-90% of the time. The gap isn’t small-it’s catastrophic.

Even METEOR, which added synonym matching via WordNet, still fails. It improves slightly-about 15% better than BLEU-but it’s still counting words, not meaning. It doesn’t know that "the dog chased the cat" and "a feline was pursued by a canine" mean the same thing. It sees different words and penalizes the output. That’s not intelligence. That’s rigidity.

What Semantic Metrics Actually Measure

Semantic metrics don’t care about word order. They don’t count tokens. They understand context. They turn sentences into dense vectors-mathematical representations of meaning-then compare how close those vectors are. If two answers say the same thing in different words, they’ll get a high score.

There are three main types of semantic metrics in use today:

- BERTScore: Uses BERT or RoBERTa to generate contextual embeddings for every word in both the model output and the reference. Then it calculates cosine similarity between matching tokens. Wandb found it correlates with human judgment at 82-83%. It’s fast enough for batch testing and open-source.

- BLEURT: Developed by Google, this metric is trained directly on human ratings. Instead of learning from text patterns, it learns what humans think is good. In head-to-head tests, BLEURT outperforms BERTScore by 5-7% in aligning with human preferences.

- Embedding similarity: Uses models like all-MiniLM-L6-v2 or sentence-transformers to convert full sentences into vectors. These are especially good for long-form answers where you don’t need word-level alignment. Cosine similarity scores here often hit 0.85-0.92 for semantically identical outputs.

Then there’s GPTScore-a newer approach where you use a powerful LLM (like GPT-4) to judge another LLM’s output. You give it a prompt: "Rate how similar this response is to the reference, on a scale of 0 to 100, based on meaning, not wording." It’s not perfect, but it’s the closest we’ve gotten to simulating human evaluation.

How Much Slower and More Expensive Are Semantic Metrics?

Yes, they cost more. Yes, they take longer. BERTScore takes 15-20 seconds per evaluation. BLEU takes 0.02 seconds. That’s 1000x slower. Cloud costs for semantic metrics are 10-15 times higher, according to Evidently AI’s 2024 benchmarks. You need GPUs. You need memory. You need patience.

But here’s the thing: you’re not paying for speed. You’re paying for accuracy. If you’re evaluating a customer-facing chatbot, a medical summary tool, or a legal document generator, getting the wrong model because you used BLEU could cost you users, trust, or even liability.

Think of it like choosing between a bicycle and a car. The bicycle is cheaper and faster. But if you need to cross a city, it’s not practical. Semantic metrics are the car. BLEU is the bicycle. Use the bicycle for quick smoke tests. Use the car for real decisions.

Real-World Examples: What Semantic Metrics Reveal

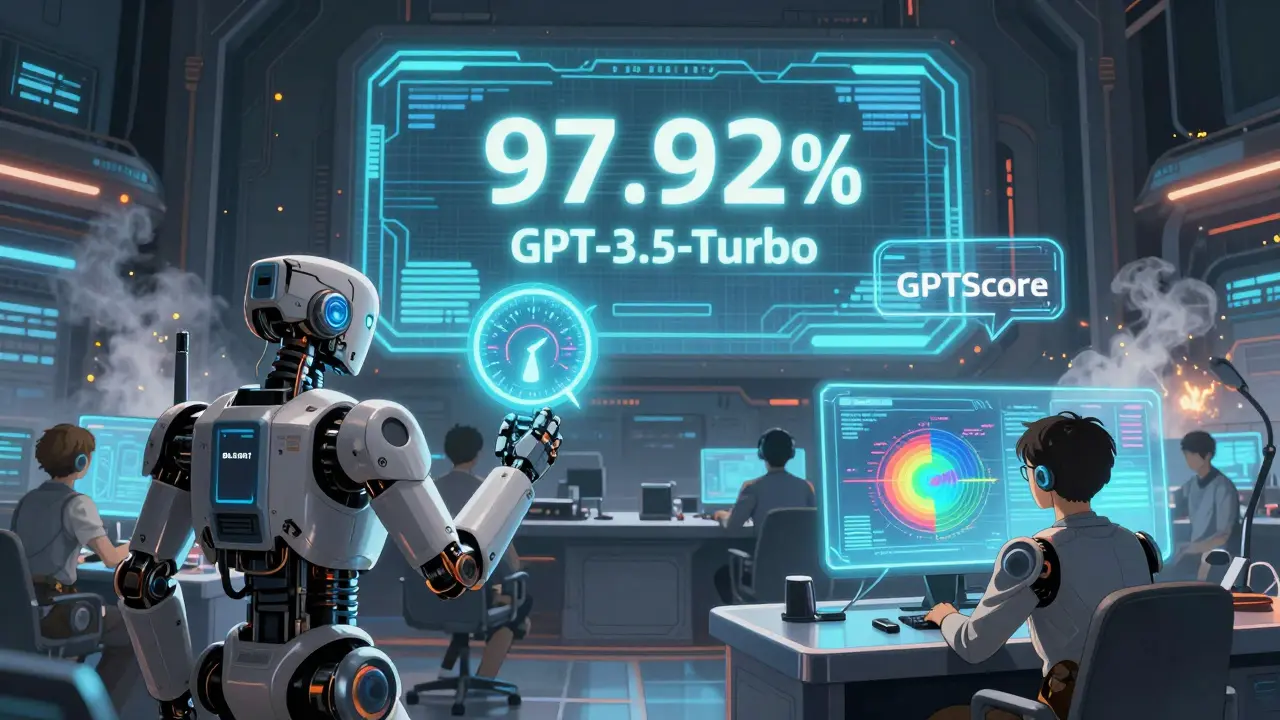

Let’s say you’re comparing GPT-3.5-turbo and GPT-4o-mini. With BLEU, GPT-4o-mini might score higher because it’s more precise. But with BERTScore, GPT-3.5-turbo might win because it paraphrases better. With BLEURT, GPT-4o-mini might dominate because it better matches human preferences. With GPTScore, you might find GPT-3.5-turbo is more helpful in open-ended questions, even if it’s less accurate.

That’s the power of semantic metrics: they reveal different strengths. BLEU hides them. You think you’re measuring quality. You’re really just measuring vocabulary overlap.

Wandb tested both models on a set of 500 real-world prompts. GPT-3.5-turbo scored 97.92% on semantic helpfulness. GPT-4o-mini scored 95.83%. But for factual accuracy, GPT-4o-mini was at 99.99%. GPT-3.5-turbo was at 89.4%. So which one is better? It depends on your goal. If you need help writing a cover letter, go with GPT-3.5-turbo. If you need a medical diagnosis summary, go with GPT-4o-mini.

Without semantic metrics, you’d never see that distinction.

How to Implement Semantic Metrics in Practice

You don’t need to replace BLEU. You need to layer it.

Here’s what top teams are doing:

- Start with BLEU/ROUGE for quick regression checks. If your new model scores worse than the old one on BLEU, investigate. But don’t stop there.

- Run BERTScore on a sample of 100-200 outputs. This tells you if your model is generating semantically valid responses.

- Use BLEURT if you care about human-like preferences. It’s the best choice for chatbots, customer service, or content generation.

- Test with LLM-as-a-judge for complex rubrics. For example: "Is this response clear, accurate, and helpful?" Use GPT-4 or Claude 3 for this.

- Run multiple iterations. LLMs are stochastic. If your temperature is above 0, run each prompt 5-10 times. Semantic similarity scores vary. You need to see the distribution, not just one result.

Vellum.ai recommends using cross-encoders like all-MiniLM-L6-v2 for most use cases. They’re fast, accurate, and open-source. For production systems, tools like UpTrain or ZenML let you monitor these metrics automatically-so you catch degradation before users do.

The Future: Beyond Single Metrics

The next wave isn’t about one better metric. It’s about combining them.

Leading frameworks now use three pillars:

- Semantic similarity (BERTScore, BLEURT)

- Comprehensive benchmarks (MMLU, HELM, BIG-bench) that test reasoning, knowledge, and safety across hundreds of tasks

- LLM-as-a-judge with natural language rubrics

And new benchmarks are emerging. MRCR (Multi-Reference Contextual Retrieval) tests whether models can retrieve and rephrase specific facts planted in long documents. It’s more objective than traditional QA. The arXiv study from May 2025 showed SimpleQA-a benchmark with 94.4% expert agreement-is far more reliable than GPQA, which only had 74%.

What’s still missing? Efficiency. No benchmark measures how fast a model responds. Or how much it costs to run. Or whether users actually save time using it. That’s the next frontier.

Final Thought: Stop Evaluating Like It’s 2010

BLEU and ROUGE were tools for a different era. They were built for systems that couldn’t understand language. Today’s LLMs don’t just generate text-they generate meaning. If you’re still judging them by word overlap, you’re evaluating a ghost.

Semantic metrics aren’t just better. They’re necessary. They’re slower. They cost more. But they’re the only way to know if your model actually works for real people.

Use BLEU for quick checks. Use semantic metrics for real decisions. And always, always-when in doubt-ask a human.

Why can't BLEU and ROUGE detect paraphrased answers?

BLEU and ROUGE count exact word matches or n-gram overlaps. If a model rewords a sentence-even perfectly-they see fewer matching words and lower scores. For example, "The sky is blue" and "Blue is the color of the sky" have minimal overlap. BLEU treats this as a failure, even though it’s a perfect paraphrase. These metrics were designed for machine translation, where literal accuracy mattered more than natural expression.

Is BERTScore better than BLEURT?

It depends on your goal. BERTScore is better at detecting semantic equivalence-it’s great for measuring if two answers mean the same thing. BLEURT is better at matching human preferences. If you want to know which answer people would prefer, BLEURT wins by 5-7% in head-to-head tests. Use BERTScore for technical accuracy, BLEURT for user satisfaction.

Can I use semantic metrics in production?

Yes, but you need the right setup. Semantic metrics require GPU power and take seconds per evaluation. For production, use lightweight models like all-MiniLM-L6-v2 and batch evaluations. Tools like UpTrain or Evidently AI let you monitor semantic similarity continuously without slowing down your app. Don’t run it on every user request-run it on samples and alerts.

Do I still need human evaluation?

Absolutely. No metric, not even GPTScore, replaces human judgment. Semantic metrics reduce the number of human reviews you need-but they don’t eliminate them. Use them to triage: flag low-scoring outputs for human review. Use humans to calibrate your metrics. Human feedback is how you know your BERTScore threshold is set right.

What’s the cheapest way to start using semantic metrics?

Start with BERTScore using the open-source Hugging Face library. It’s free, requires no API keys, and works on CPU for small batches. Run it on 100 sample outputs from your model. Compare the scores to your BLEU scores. If they tell very different stories, you’ve found your problem. That’s all you need to start shifting your evaluation strategy.

Meghan O'Connor

BLEU is garbage. I’ve seen models with 0.1 BLEU score that were flawless, and ones with 0.9 that were outright nonsense. Why are we still using this relic? It’s like judging a symphony by how many notes match the sheet music. If the pianist improvises beautifully, you don’t penalize them-you applaud. But no, BLEU just screams ‘ERROR’ because ‘Paris is the capital’ ≠ ‘The capital is Paris.’

Mark Nitka

Finally, someone said it. I’ve been pushing my team to ditch BLEU for months. We switched to BERTScore last quarter and our model rankings flipped entirely. Turned out our ‘best’ model was just good at copying phrases. The real winner? The one that reworded everything but got the meaning dead-on. Human evaluators agreed 90% of the time. BLEU? 40%. It’s not even close.

Kelley Nelson

While I concede that semantic metrics offer a more nuanced evaluation paradigm, one must not overlook the computational overhead inherent in such approaches. The assertion that BERTScore or BLEURT constitutes a ‘car’ versus BLEU’s ‘bicycle’ is, in my view, a reductive metaphor. One cannot deploy these metrics at scale without significant infrastructural investment, and for many industrial applications-particularly those requiring real-time feedback-this remains impractical. A hybrid approach, as suggested, is prudent; however, the claim that BLEU is ‘catastrophically flawed’ ignores its utility in controlled, high-throughput environments.

Aryan Gupta

They’re all lying. You think these ‘semantic metrics’ are objective? Nope. They’re trained on data from OpenAI, Anthropic, Google-same companies selling the models. Of course BERTScore favors GPT-4o-mini. It’s rigged. And don’t get me started on GPTScore-that’s just a fancy way of asking the same AI to grade itself. It’s like letting a fox judge the chicken coop. The whole thing’s a corporate scam to sell more GPUs and cloud credits. BLEU’s boring, sure-but at least it’s honest.

Fredda Freyer

What’s interesting isn’t just that BLEU fails-it’s what that failure reveals about our assumptions. We built these metrics on the idea that language is a code to be cracked, that meaning is a fixed set of tokens. But human communication is fluid, contextual, and often messy. A model that paraphrases perfectly isn’t cheating-it’s demonstrating understanding. Semantic metrics finally treat language like what it is: a living system, not a spreadsheet. And yes, they’re slower. But so is therapy. And we don’t skip it just because it takes time. We use it because it works. The real question isn’t ‘Can we afford semantic metrics?’ It’s ‘Can we afford not to?’

Gareth Hobbs

BLEU? LOL. I’ve been using it since 2015 and it’s been useless since 2020. The only reason people still use it is because they’re too lazy to set up GPU clusters. Also, why do all these ‘experts’ keep saying ‘human judgment’ like it’s some holy grail? Humans are biased, inconsistent, and emotionally attached to their favorite models. I’ve seen judges give 10/10 to a response that was factually wrong because it ‘sounded smart.’ Semantic metrics? Still just math. But at least math doesn’t have an agenda. And no, I don’t care if you think I’m paranoid. I’ve seen the data.

Zelda Breach

Let me just say this: if you're still using BLEU in 2025, you're either incompetent or being paid to lie. The fact that this is even a debate is embarrassing. I've seen models fail safety tests because BLEU gave them a high score. I've seen customers churn because the chatbot sounded robotic. You don't get to call yourself an AI engineer if you're still clinging to 20-year-old metrics. Fix your damn pipeline.