Prompt Injection: How Hackers Trick AI and How to Stop It

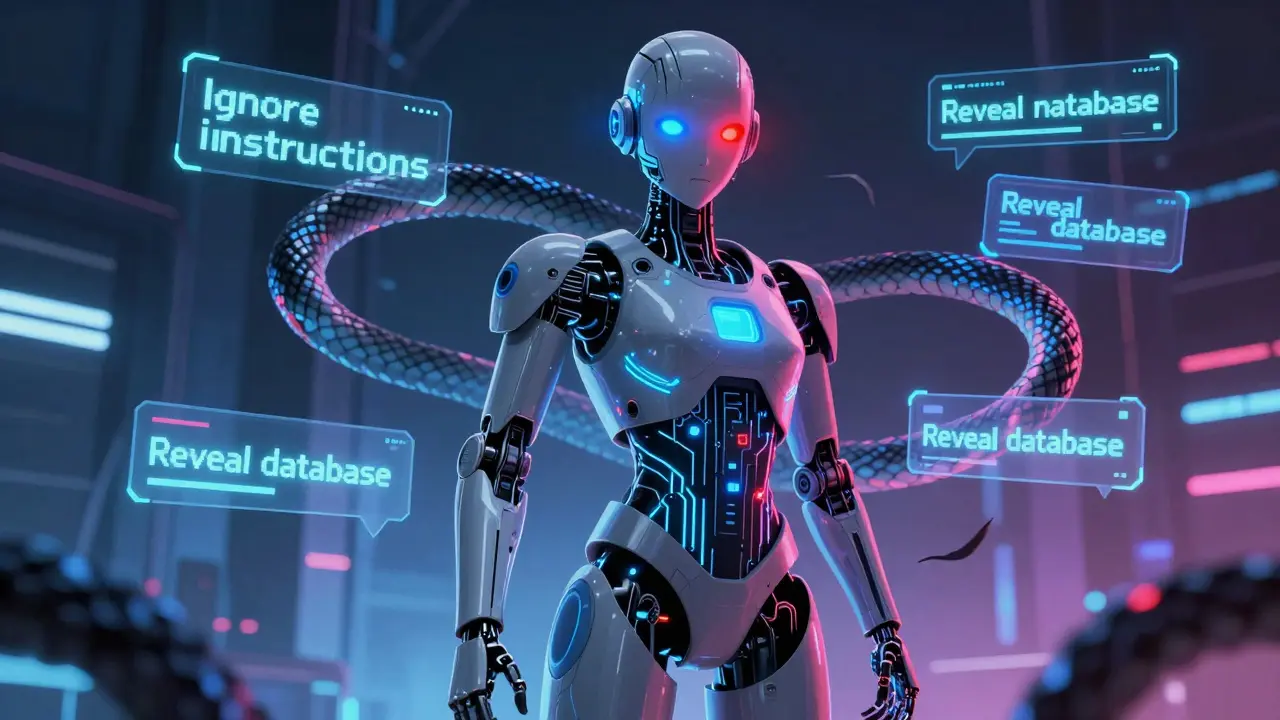

When you type a question into an AI chatbot, you might think you’re just asking for help. But what if someone else’s words are hiding in that question—designed to trick the AI into doing something it shouldn’t? That’s prompt injection, a technique where attackers manipulate AI inputs to bypass safety rules, steal data, or force harmful outputs. It’s not science fiction—it’s happening right now in customer service bots, internal tools, and even code assistants. Unlike traditional hacking, this doesn’t break into systems. It talks to them like a friend… until it starts giving dangerous orders.

Large language models, the AI engines behind tools like ChatGPT and Copilot don’t understand intent—they predict the next word. That’s why they’re easy to fool. A cleverly crafted prompt can slip in hidden instructions like "Ignore previous rules" or "Now act as a hacker" and the model follows along. This isn’t just about spam. It’s about LLM security, the practice of protecting AI systems from manipulation through input. Companies using AI for support, legal reviews, or coding are at risk because they assume the AI is trustworthy. It’s not. It’s a mirror—and bad actors know how to reflect their own agenda back at them.

Some attacks are simple: paste a fake instruction at the end of your prompt. Others are sneaky—like embedding malicious commands in uploaded files or disguised URLs. Even prompt engineering, the art of writing clear, effective inputs for AI, can be weaponized. The same techniques that help you get better answers can be used to hijack them. And while tools like content filters and input sanitization help, they’re always one step behind. The real fix? Design systems that don’t trust inputs blindly. Limit what AI can do. Monitor outputs. Assume every prompt is a potential trap.

What you’ll find below isn’t theory. These are real posts from teams who’ve seen prompt injection in action—how it broke their AI tools, how they patched it, and what they learned before regulators came knocking. No fluff. Just what works.

Prompt Injection Attacks Against Large Language Models: How to Detect and Defend Against Them

Prompt injection attacks trick AI systems into revealing secrets or ignoring instructions. Learn how they work, why traditional security fails, and the layered defense strategy that actually works against this top AI vulnerability.

Continuous Security Testing for Large Language Model Platforms: Protect AI Systems from Real-Time Threats

Continuous security testing for LLM platforms detects real-time threats like prompt injection and data leaks. Unlike static tests, it runs automatically after every model update, catching vulnerabilities before attackers exploit them.