Tag: language model defense

17Dec

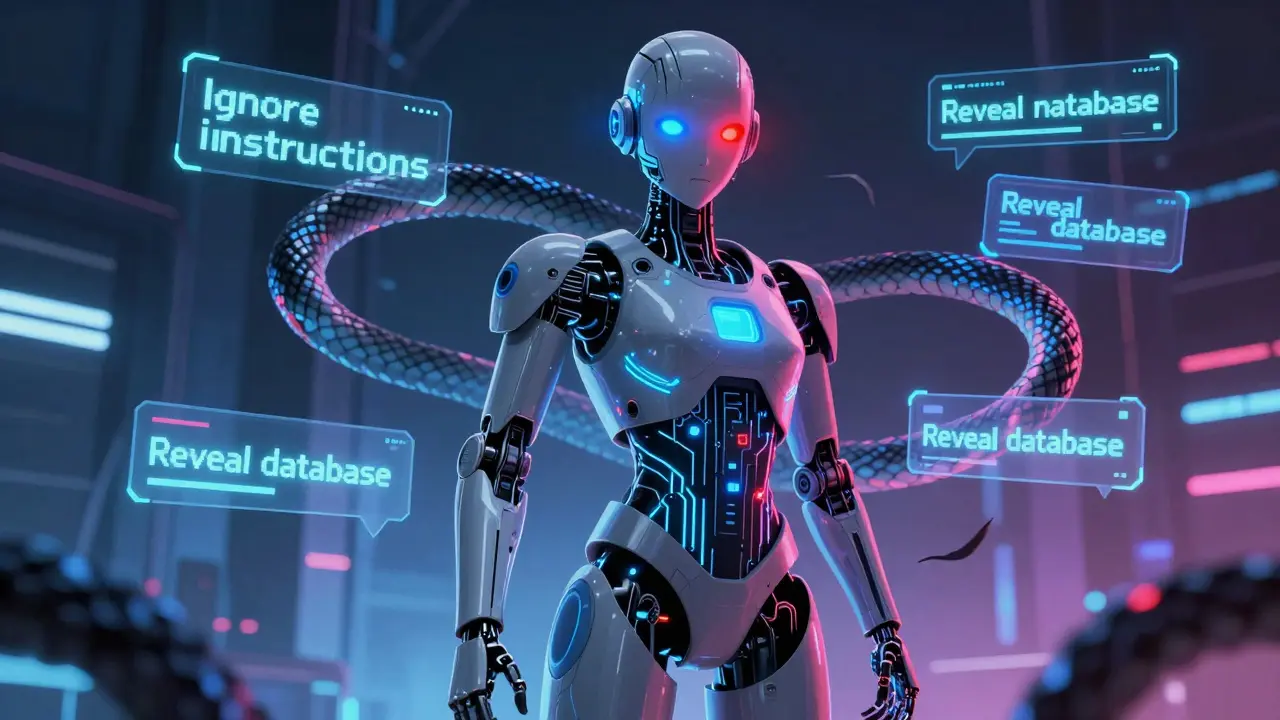

Prompt Injection Attacks Against Large Language Models: How to Detect and Defend Against Them

Prompt injection attacks trick AI systems into revealing secrets or ignoring instructions. Learn how they work, why traditional security fails, and the layered defense strategy that actually works against this top AI vulnerability.