Tag: KV caching LLM

31Jan

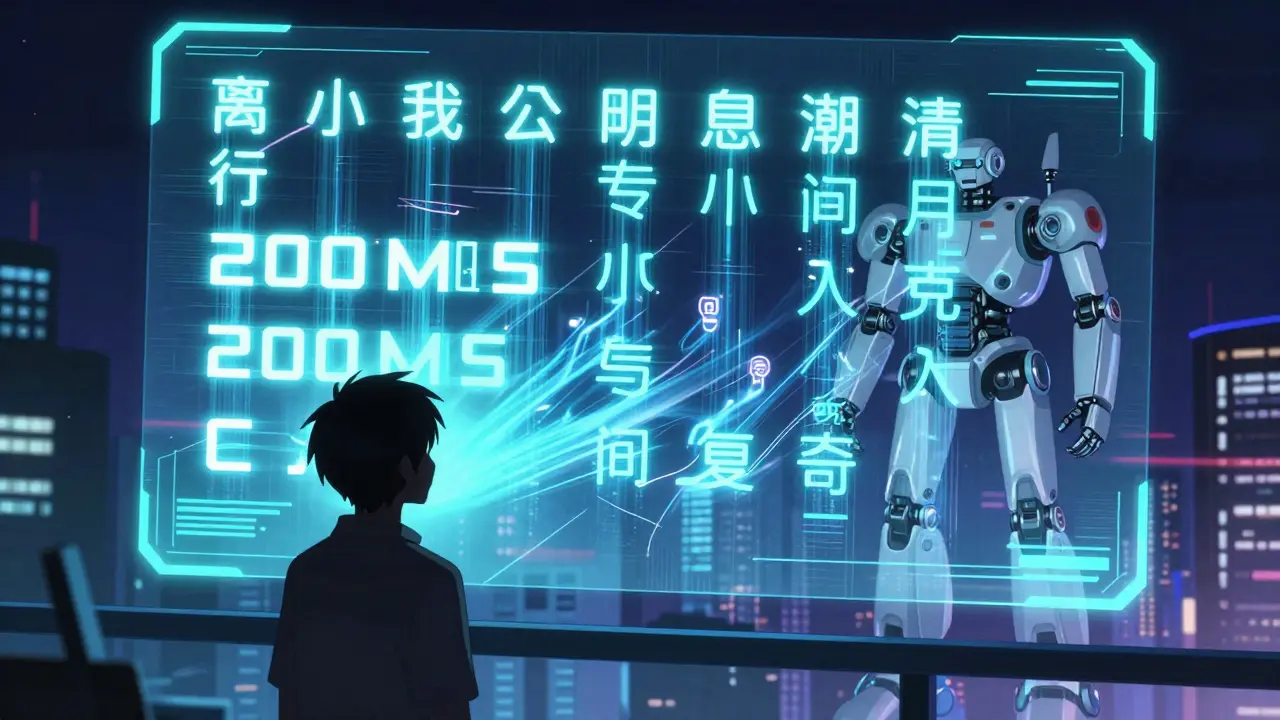

Latency Optimization for Large Language Models: Streaming, Batching, and Caching

Learn how streaming, batching, and caching can slash LLM response times by up to 70%. Real-world benchmarks, hardware tips, and step-by-step optimization for chatbots and APIs.